— April 14, 2019

With artificial intelligence ruling the airwaves, there’s more than a little talk around what all the advancements could mean for professionals. This is especially true in an industry like recruiting, where processes have traditionally been very human-focused.

AI offers answers to the numerous challenges recruiters and hiring managers encounter, meanwhile, providing job seekers and applicants with a smoother and more enjoyable candidate experience. Unfortunately, though the technology introduces solutions to common hiring problems, AI is often misunderstood, especially by the very people who stand to gain the most: hiring teams.

These misunderstandings have been leaving many HR and recruiting professionals fearful of AI’s implications. Has technology reached an unmatchable level that leaves jobs vulnerable and people replaceable? Fortunately, much of the fear around AI is due in part to the difficulty in defining AI.

We want to break down those misconceptions by explaining 3 of the facets of modern AI, especially how each pertain to those in talent acquisition and the candidates they hope to hire. We will also uncover how those facets ease the hiring process and the specific tasks that overwhelm and frustrate recruiters, hiring managers and job seekers.

THE 3 FACETS OF AI TO UNDERSTAND

AI is complex, which isn’t all that surprising when you consider the fact that it is built to mirror human response and knowledge. There are many moving parts, but machine learning, natural language processing and computer vision are some of the most influential in AI for talent acquisition.

Machine Learning is a computer’s ability to learn without being programmed to do so. When data enters the system, the computer can read the information, detect patterns and make changes or perform tasks based on the input.

Natural Language Processing is a computer’s ability to understand human speech and text without the mediator of a programming language. In other words, a person can speak or type a message to a computer in their native language and the computer will understand and respond accordingly.

Computer Vision, similar to and sometimes confused with machine vision, is a computer’s ability to process data from a digitized image and take action based on the information gathered.

Notice how each of these advanced abilities of computers lend themselves to the development and success of AI technology. With each, an AI program can communicate with humans on a level closer to how humans interact with one another. Unfortunately, these concepts are the very reason talent acquisition professionals sometimes fear the advancement of AI in recruiting and hiring.

Traditionally, these processes have been dependent on human interaction because it is so difficult to judge the more abstract understanding of personality and culture, both in organizations and potential hires. However, if computers can gather information on a person based on visual and textual/spoken output, does that mean there’s no need for actual human intervention? Let’s dive deeper into these concepts and how each affects talent acquisition.

MACHINE LEARNING

A computer with machine learning can learn and act without being explicitly programmed to do so. Often, people believe this means a computer is in a sense given life, because it can use information to form conclusions without humans leading the way. However, that is a bit of a misguided understanding of AI. Machine learning is far more linear and only as accurate as the user trains it to be. Though the computer may act on its own with great accuracy, it’s still a computer in need of transactional support from a human.

“What is highly manual today (think about an analyst combing thousand line spreadsheets), becomes automatic tomorrow (an easy button) through technology.”

– Rob Thomas (@robdthomas), General Manager at IBM & Jean-Francois Puget (@JFPuget), IBM Distinguished Engineer

Deep Learning vs. Machine Learning

Machine learning requires a great deal of human intervention, as the example above shows. If the programmer leading the computer with machine learning capabilities doesn’t present very specific details, the computer won’t be able to accurately understand the input, negatively affecting the results. This is called feature extraction and places a lot of responsibility on programmer guidance.

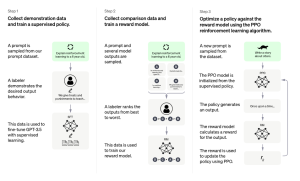

Deep learning, similar to machine learning, can detect patterns and uses feature sets to make decisions. The difference is how it obtains those features sets. Instead of receiving constant input from humans, it uses training data from humans to build its own understanding. It still relies on patterns and doesn’t “see” minute details, but instead uses reinforcement learning to create a more accurate predictive model that becomes more complex with each process.

HOW MACHINE LEARNING WORKS

Think of it this way, if you present a computer with a detailed description of two fruits, say a strawberry and a raspberry, it will be able to discern between the two without much intervention. Because you have provided it a feature set, or in this example, a range of weights, textures, colors and other characteristics, it will be able to understand patterns of each so long as they are the only fruits it is asked to separate. Now, if you present it with another fruit it hasn’t yet been exposed to, it will struggle with accuracy. A blackberry will probably wind up with raspberries, kiwis might be strawberries and there’s no telling where an apple will be sent.

The 3 machine learning algorithms:

- Supervised Learning: data sets are labeled so that patterns are detected and used to label additional incoming data

- Unsupervised Learning: data sets aren’t labeled and are sorted according to similarities or differences between one another

- Reinforcement Learning: data sets aren’t labeled and the computer instead learns from trial and error feedback

NATURAL LANGUAGE PROCESSING

A computer with natural language processing (NLP) has the ability to understand and generate text and speech without the intervention of programming languages. Currently, the most common way to interact with a computer system is through a programming language (think: Java, Python, Ruby, etc). Computers with NLP understands human language in its natural state. The backend work that the program uses to understand the interaction develops a response but is translated back to a natural human language for delivery.

Neural Networking

Sometimes deep learning is referred to as deep neural learning or deep neural networking. Computer programs that use neural networking can learn new concepts and build advanced learning algorithms quickly and more efficiently than programs built by humans.

COMMONLY USED NLP

NLP is an AI that is currently based in machine learning and some of the common examples of NLP is speech recognition and text translation. One of the older, most widely known and used forms of NLP is spam detection in email. The system uses text found in the email and its subject line to decide if the contents are malicious or junk. Another commonly used, more modern example is the speech recognition capabilities seen in the ever humorous sage, Siri, who can understand most voice commands and can provide information as well as control integrated apps and home appliances. Siri isn’t the only voice assistant with Google, Alexa and Cortana as other notable NLP technology.

Common NLP tasks in software:

- Syntactic Analysis/Grammar Parsing

- Part-of-Speech Tagging

- Tokenization

- Named Entity Recognition

It’s this type of advanced AI technology that allows chatbots to exist. From customer service to online purchasing, chatbots allow users to communicate with systems without advanced knowledge of computer language or another human/programmer.

“As artificial intelligence finds its way into more and more of our devices and tasks, it becomes critically important for us to be able to communicate with computers in the language we’re familiar with. We can always ask programmers to write more programs, but we can’t ask consumers to learn to write code just to ask Siri for the weather.”

-Siraj Raval (@sirajraval), Data Scientist

44% of executives believe the most important benefit of AI will be its ability to automate communications that provide data.

COMPUTER VISION

As it sounds, computer vision is the ability for a computer to “see.” Human sight is a complicated matter with multiple processes taking place in less than a second of time. Though we understand the pieces that lead to seeing in humans, replicating one of those pieces in machines is a complex process that we’ve been researching for decades.

“For humans, it’s very simple to understand the contents of an image. We see a picture of a dog and we know it’s a dog. We see a picture of a cat and our brain is able to make the connection that we are seeing a cat in the photo. But for computer, it’s not that easy. All a computer “sees” is a matrix of pixels (i.e. the Red, Green, and Blue pixel intensities) of an image. A computer has no idea how to take these pixel intensities and derive any semantic meaning from the image.”

-Adrian Rosebrock (@PyImageSearch), Author & Computer Vision Educator

Researchers have had the most success with reinventing the processes performed by the eye, creating sensors and image processors that can do the same work, in some cases, performing those tasks better. These “eyes” come in the form of modern cameras that can record images quickly and accurately. The disconnect between a machine having the ability to “see” is that though it captures images, it doesn’t actually recognize the subject matter involved. In other words, we have been successful in granting computers with sight, but enabling an understanding of that sight is another story.

TODAY’S COMPUTER VISION

Similar to machine learning AI, it’s possible to build a system that sees a berry and accurately differentiates between raspberries and blackberries. However, when presented with an apple, that system will need new training for recognition and accurate categorization.

The future of computer vision will take the work of engineers, computer scientists, programmers, neuroscientists and a whole lot of other experts to advance, but that doesn’t mean we haven’t established some breakthroughs. Google’s self-driving vehicles understand signs and react appropriately. A team at MIT trained a system to recognize sounds and scenes using video and without the use of any hand-annotated data for training. The first step in the process used computer vision technologies to categorize the images using objects in the videos. The resulting technology, a sound-recognition system, could categorize sounds with 92% accuracy with a data set of 10 and 74% accuracy of a data set of 50. Interestingly, the MIT team found humans could identify sounds in those datasets with 96% accuracy and 81% accuracy, respectively.

Today’s AI, also known as AGI

When we hear AI, we think of robots capable of performing tasks with the accuracy and experience of a human. In that understanding of AI, we believe the system to be able to not only understand patterns, but have a vast bank of knowledge and, more drastically, be able to see and react to vague human interactions, like emotions and cultural mannerisms. This technology is also called artificial general intelligence, or AGI. Computers of this caliber do not yet exist, and our current technology is merely scratching the surface.

IS AI GREATER THAN HUMANS IN TA?

Today’s smartphones feature something known as predictive text within their keyboard functions. The predictive text feature helps the phone user to more quickly type messages by providing suggested words while he or she types. The more the smartphone owner uses their keyboard, the more the predictive text feature learns about their habits, vocabulary and even begins to understand personal references (think friends’ names and location specific terms). These are added to the users’ dictionary and soon become suggested items during conversations.

However, though a majority of people use this piece of tech on a daily basis, it hasn’t replaced all human interaction. In fact, there are more than a few horror stories about how autocorrect, a part of predictive text, has poorly affected interactions between people. The same can be said for AI in talent acquisition. The use of this technology simply can’t replace the communication and experience of humans, but it can provide a way for teams to more accurately and effectively perform tasks.

AI AND TA PROFESSIONALS WORK BETTER TOGETHER

Artificial intelligence applied to the hiring process eases administrative tasks and organization with better accuracy than previously used technologies. AI provides a more intuitive solution to sourcing and applicant tracking systems, allowing recruiters and hiring managers to focus their attention on the more abstract qualities of a candidate.

For example, using chatbots to communicate with job seekers not only helps applicants move through the application process but also ensures the candidate experience is positive throughout. The candidate can ask questions every step of the way and receive follow-up without a recruiter intervening. Chatbots capitalize on that communication by applying the interactions to the ranking and scoring process. In fact, one of the most useful tools of AI in talent acquisition is the ability of AI to rank and score candidate resumes, cover letters and predictive analysis. The right AI technology can use basic keyword search and advanced semantic search to help match candidates to open positions in the organization.

The advancements in AI are reaching new heights, but there’s still a great deal of work to be done. Talent acquisition AI has amazing potential to lower time-to-hire, improve matching techniques and decrease negative candidate experiences without costing more of hiring team’s time and resources.

That’s exactly what Karen was built to do. Karen is a cognitive recruiting assistant with the goal of bringing a better candidate experience to applicants while solving the issues that commonly cause a disconnect between employers and job seekers. Unlike other systems, Karen is trained with IBM Watson’s AI model, a proven leader. Karen can not only view thousands of resumes and cover letters but can rank and score the qualifications all while providing a chat service to applicants. Karen is advancing how the hiring process is performed.

This article was originally published here.

Business & Finance Articles on Business 2 Community

(66)

Report Post