Did you know that only 1 out of every 8 A/B tests manage to produce significant results?

And that’s at a professional level! Imagine how the statistics must look for people just getting started.

There are a number of reasons why your A/B tests aren’t giving you the results that you’re looking for. It could be the specific test you’re running, but then again, it might go a little deeper than that.

This article will break down the top 7 reasons your A/B tests aren’t working, and how you can get back on track today.

A/B Testing Mistake #1- You Only Copy Other People’s Tests

We’ve refrained from writing about A/B testing examples for a while now on the Wishpond blog since we believe that while it may be an entertaining read, unless there’s some context behind why those tests were successful, finding out that someone received a 27% conversion boost from changing their button color won’t do your business any good.

Of course while reading an article on A/B testing examples can be a great way of sparking your imagination, they should always be taken with a grain of salt and considered in relation to:

- The offer

- The design of the page

- The audience viewing the test

- The audience’s relationship with the business at hand

For example, take a look at this example from Salesforce who found success using a directional cue pointing at their form.

After reading this, I thought “I should add a directional cue pointing at my form!”

So I pulled my design skills out of the garage and came up with this:

And the result?

A popup that converted 18% worse than the original.

Key Takeaway:

Just because something worked for someone else doesn’t mean that it will work for you. Take the time to consider why something worked rather than jumping the gun to implement new tests based on what you’ve heard about online. An arrow isn’t necessarily better than not having an arrow. A background image isn’t necessarily better than no background image.

It’s all about context, and that’s the context that only you know best.

Side note: Don’t try and be a hero, go and find your designer and let them do their job (I swear, I thought yellow arrows were good for conversions!).

A/B Testing Mistake #2 – Testing too many variables at once

They say less is more. Well that’s definitely true about A/B testing.

By testing less elements at a time, you’ll get…

- More clarity about what caused a specific change

- A more controlled experiment

- Less volatility within your overall conversions / revenue stream

It might be tempting to test multiple elements on a page at a time. There’s nothing wrong with this and there’s actually a name for it, multivariate testing.

But with the potential upswing of a massive increase in conversions, you also run the risk of a massive decrease in conversions. This is especially troubling for businesses that rely on their landing pages and websites to contribute a significant portion of their business.

If you want to run a true A/B test then you need to focus on testing one element at a time.

Here’s is an example of what not to do on an A/B test:

Original

Variation

At first glance you might think that the only difference between these two ads was their background color. But after closer examination you can see that the headline, sub headline, and CTA have changed as well.

Now imagine that the variation wins this test. When creating a new ad, would you know which CTA to use? How about which headline or sub headline? Maybe it was the yellow background that made the difference?

While you might be able to get a more significant change by changing multiple elements at once, testing more than one thing at a time results in sloppy unanalytical tests that yield no conclusive results.

Key Takeaway

Stick to one test at a time. Your team will thank you later.

A/B Testing Mistake #3 – Too little traffic, too small changes

Depending on the traffic to your site, different marketers will be able to run different degrees of tests and still find statistical significance.

One mistake that marketers make is making very minor changes to low traffic campaigns. For instance, A/B testing “Create Your Package” against “Create My Package” as a CTA for a landing page with only a couple hundred visitors per month might take a year to reach statistical significance.

Take a look at this A/B test that ran for a few months on a low traffic popup.

Original:

Variation:

Do you notice the difference? If you look closely, there’s a small arrow to the right of the CTA text in the variation. While converting slightly better, a minor change like this could take months and months to determine a conclusive result.

Generally speaking, it makes more sense to test minor page elements (like subtle directional cues, small copy changes, etc) on high traffic campaigns so you can get feedback quickly. Then apply that learning to your lower traffic campaigns.

Key Takeaway:

Test big changes on low traffic campaigns (while still keeping it one change per test).

A/B Testing Mistake #4 – Not reaching statistical significance

Even if you’re making big changes on high traffic campaigns, you still need to ensure that you’re reaching statistical significance before calling it a day.

That means not determining the results of a test before you’ve reached a result of about a 95% confidence level.

It might be tempting to call your tests early, especially if your variation is winning. This can be dangerous though, as tests have been known to make sudden comebacks even when they’re losing by over 80%.

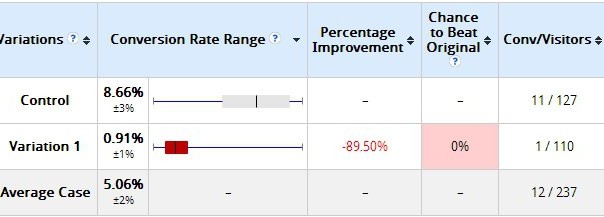

Take a look at this example from Peep Laja of ConversionXL, where he walks through how a test that was losing by over 89%, managed to come back and beat the original by over 25% with a 95% confidence level.

Losing Variation at the early stages of testing:

The variation that ended up winning:

Key Takeaway

Have patience when testing. Early results won’t always signal a definitive winner. Keep your tests alive until they reach at least a 95% confidence level with at least 100 conversions per variation before calling them.

A/B Testing Mistake #5 – You’re driving the wrong traffic

There’s two main components of an A/B test: the variations you’re testing and the users who are viewing them.

Most marketers get so bogged down with their actual test that they forget to consider the other side: the people behind the test.

In an article posted on Search Engine Journal, columnist Jacob Baadsgaard of Disruptive Advertising explains how he ran a Facebook PPC campaign driving traffic to his new post How to Spice Up Your Love Life With AdWords.

Despite the spicy title, the article wasn’t about relationships at all, rather it was an interesting breakdown of how to use AdWords in a novel way.

To his surprise, once the campaign was underway, despite the regular flow of traffic from a campaign of this sort, his conversion rate was far worse than usual.

Upon further investigation, he discovered that the ad and the term “spice up your love life” was resonating with 55+ year old women.

Because of the demographic (uninterested in Adwords marketing) none of them were converting and his campaign was coming up a flop.

Due to his investigation, he was able to exclude the female population 50+ and his conversion rates went back to normal.

Key Takeaway:

Know where your traffic is coming from and what they’re looking for. Don’t be so quick to call it quits on an A/B test especially if you haven’t considered who you’re testing on.

A/B Testing Mistake #6 – Not running tests for a full week

Even if you have a high traffic site with the right audience reaching a 95% confidence level, your A/B testing data could still be flawed.

Why?

Because you need to take into account weekly trends that result from browsing habits. Does your site get a spike of traffic on the weekends? Are there fluctuations in the types of people that visit your site based on your weekly publishing schedule?

All these aspects need to be considered when running an A/B test.

Take a look at this analytics snapshot from an ecommerce businesses weekly traffic breakdown:

Notice how their Sunday traffic is half of what their Friday traffic is? And do you notice how their conversion rate on Saturday is almost 2% less than what it is on Thursday?

Key Takeaway:

Run your tests for a full week to ensure that your taking into account day-to-day variations for the entire week spread.

A/B Testing Mistake #7 – You’re not proving your high-impact tests completely

There’s no better feeling than having one of your A/B tests destroy the original so that you can go on to crown yourself “King of Conversion.”

But before your inauguration, you might want to double check your findings to ensure that the results weren’t just a fluke and that you’ve actually drilled down to some meaningful results.

This is especially true for tests which surprised you from their sudden landslide victories with a high level of statistical significance. These are the types of tests which you might be tempted to quickly declare a winner and move on. But even if this is the case, and you’ve wrapped up a test with 95% confidence, there’s still a 5% chance that the test could come up a false positive.

Be careful to not jump to a conclusions (in these cases particularly) if the results are something that you’ll be applying globally across your entire site or as a part of your widespread optimization process.

Key Takeaway:

High-impact test results should be proven beyond a shadow of a doubt by running the test again. Make absolutely sure there’s not an unidentified variable before implementing across your entire site. If you’re running tests to 95% significance, there’s only a 1 in 400 chance that you’ll get a false positive twice.

Wrapping up

There you have it: 7 reasons why your A/B tests might not be giving you the results you were looking for.

Remember, A/B testing is a science, so treat it like one. Isolate your tests, analyze your findings, and always question why something works, rather than just accepting it at face value.

As a bonus tip, remember to always record your A/B testing results in a spreadsheet so that your team can refer back to the results later. The human brain tends to forget and there’s nothing worse than re-testing the same test again and again 6 months down the line.

Digital & Social Articles on Business 2 Community(50)