A/B testing is the staple experiment of conversion rate optimisation (CRO) and a vital tool for improving the performance of web pages, page elements, ad campaigns and every aspect of your marketing strategy.

In this article, we explain what A/B testing is, how to do it, how to test ad variations in Google Ads, what to do after an A/B test and what tools are available to help you run them.

What is A/B testing?

A/B testing (often referred to as split testing) runs two versions of a page or page element against each other, splitting traffic between the two variations to determine which one achieves business goals most effectively.

For example, you might test two versions of a landing page to see which one converts more visitors or two different versions of a CTA on the same landing page. Alternatively, you could test two ad variations in Google Ads or Facebook advertising to determine which one achieves the highest CTR or conversion rates post-click.

A/B testing is one of several test formats you might run in a conversion optimisation strategy and it’s important to understand the use cases for each type:

- A/B testing: Run two variations of the same page or element.

- A/B/n testing: Run more than two versions of the same page or element.

- Multivariate (MVT) testing: Test multiple variables at the same time to determine the most effective combination.

- Multi-page (funnel) testing: Test variations across multiple pages to optimise an entire conversion action (eg: onboarding, checkouts, etc.)

With A/B testing and A/B/n testing, you only ever test one variable such as two versions of the same landing page, CTA or ad.

If you’re generating enough traffic and have the right data handling systems in place, you may also run multivariate tests on more than one variable at the same time, such as the best combination of hero section, CTA and form design to maximise conversions.

Multi-page tests are the most demanding in terms of traffic and data handling but, with the right resources, you can optimise conversion goals across multiple pages.

You can use these experiments to optimise pages and campaigns for specific marketing goals (eg: increase conversions) or to test solutions to issues you identify in your analytics, such as unusually high cart abandonment rates.

How do you do A/B testing?

A/B testing is the simplest form of CRO experimentation but this doesn’t mean it’s easy. Most businesses (and marketers) underestimate the challenges of producing reliable outcomes from A/B tests.

You need the right data processes in place and the resources to run tests for long enough to achieve statistical significance. You also have to identify external variables that may skew the outcome of your experiments because false positives could lead to implementing changes that hurt your marketing performance, rather than boost it.

A/B testing is a data-driven strategy so make sure you have the right processes in place before you start.

How to run an A/B test on your website

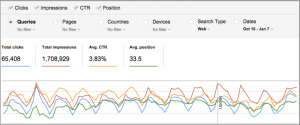

To run an A/B test on your website, you’ll need to identify a page or page element that you want to test, such as a hero section, CTA or web form. You’re not simply picking elements by random, though. Let the data pick pages and elements for you by pinpointing pages with, say, lower-than-average conversion rates.

From here, you can test page variations or identify the page elements you want to test and optimise.

Step #1: Create your hypothesis

Before you create your first experiment, you should create a hypothesis to guide your test. For example, it’s common for mobile pages to have lower conversion rates than desktop versions and mobile often generates the most traffic – so why not optimise mobile pages to lift conversion rates?

After analysing the mobile and desktop versions of your key landing pages, you might suspect that the responsive layout is pushing CTAs too far down the page on mobile displays and create an A/B test with two variations:

- Control: This is the original page with no changes made.

- Variation: The revised page with CTAs pushed up on mobile displays.

Your hypothesis should be data-driven, too, so you would look at the difference between conversion rates on desktop and mobile, compare traffic volumes and estimate the expected impact of moving CTAs into a more prominent position on mobile displays.

You should come up with a specific number – for example, variation (B) will result in a 3.5% lift in conversion rates.

Step #2: Set up your A/B test

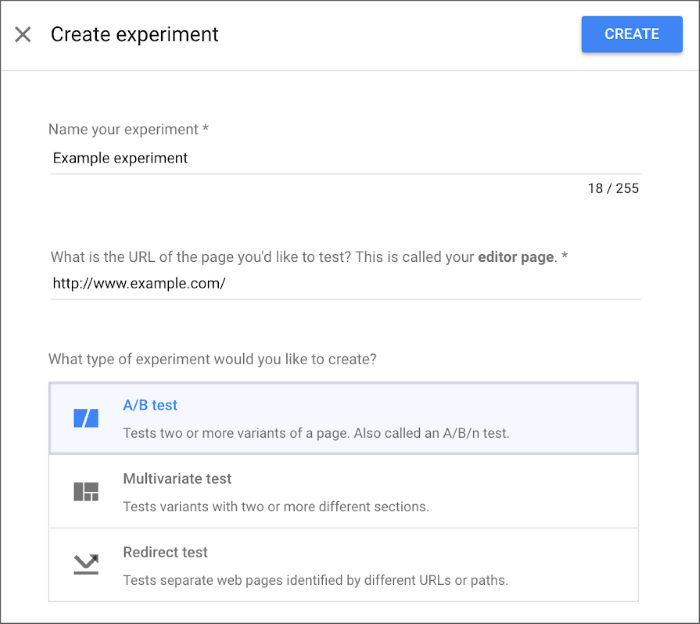

The specifics of this process will vary depending on the software you use to run your test. If you’re using Google Optimize, you can follow these steps to create your A/B test:

- Go to your Optimize Account (Main menu > Accounts)

- Click on your Container name to get to the Experiments page

- Click Create experiment

- Enter an Experiment name (up to 255 characters)

- Enter an Editor page URL (the web page you’d like to test)

- Click A/B test

- Click Create

Once you’ve set up your A/B test, Google Optimize will ask you to create the variant for your experiment. Like most conversion optimisation platforms, Optimize has a visual editor that you can use to edit your control page and create your variant.

Step #3: Create your variant

In Google Optimize, you’ll find a Variants card at the top of the experiment page and you can create a new variant by clicking on the blue + NEW VARIANT button at the bottom-right of the card.

To start making changes, click on the variant row (which will say “0 changes”) to launch the visual editor, which you can use to select elements on the page and make your edits.

- Click on any web page element you wish to edit (e.g. the hero section, text, etc.)

- Use the editor panel to make a change (e.g. change hero height)

- Click Save

- Continue making edits as necessary

- Click Done

If you’re looking to push your CTAs further up the page on mobile, you might control the height of your hero section, decrease the size of headings text or restructure some of your content to use space more efficiently, meaning users encounter your CTAs on mobile with less scrolling.

You may simply try to create a more concise message in your landing page copy that makes more impact with less text taking up vertical space.

Step #4: Set variant weighting

In Google Optimize (and most A/B testing tools), variant weighting is set to 50% by default, meaning exactly half of visitors will see either variant at random. Half will see the control when they click through to your page and the other half will see the variant.

You can adjust this setting if you want more of your traffic to see either variant – just be aware that this impacts the outcome of your experiment and requires you to run tests for longer to achieve statistical significance.

Step #5: Apply your targeting settings

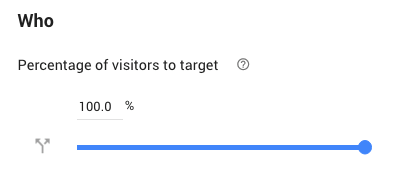

You have to be careful with targeting in A/B tests because you don’t want to introduce any bias into your experiments. However, there are legitimate reasons to target users and the first type of targeting you’ll want to consider is the percentage of visitors to include in your test.

You’re not obliged to include everyone in your experiment and you may want to run your test for longer with a smaller sample size rather than include all of your traffic. In Google Optimize, you can simply slide the Percentage of visitors to target slider to change this setting.

The next targeting options you’ll want to think about are first-time and repeat visitors. It’s generally a good idea to show visitors the same variant if they return to your site again rather than confuse the user experience with designs that keep changing.

The simplest way to prevent this from happening is to exclude returning visitors from your experiment although the risk remains that they’ll see the variant on their first visit and the control on their following visits. This isn’t a perfect solution but the only other option is to apply a tracking ID to individual users and add parameters for the variation they see on their first visit.

This allows you to deliver the same experience on every following visit but this solution is also fallible to tracking issues.

If you want to apply more advanced targeting options to your A/B tests, such as location targeting or behavioural targeting, you can apply any of the following in Google Optimize:

- URL targeting

- Google Analytics Audiences targeting

- Behaviour targeting

- Geotargeting

- Technology targeting

- JavaScript variable targeting

- First-party cookie targeting

- Custom JavaScript targeting

- Query parameter targeting

- Data layer variable targeting

If we run with the example of optimising mobile landing pages, you’ll want to target mobile users specifically and you can do this with Technology targeting > Device category > Mobile in Google Optimize.

Step #6: Run your experiment

With your experiment set up and your variant created, you’re ready to start your A/B test. Now, you need to decide how long to run your test for and the aim here is to ensure you achieve a reliable outcome. If your test results in a false positive, you could implement changes that damage the performance of your website.

By achieving statistical significance in your tests, you can implement changes with confidence and use the results of tests to inform future decisions.

If you’re using Google Optimize, run your test until at least one variant achieves a 95% probability to beat baseline score, which is the platform’s closest equivalent metric to confidence or statistical significance (there are slight differences between all three).

Keep in mind that your A/B testing software can’t do all of the work on its own. You also need to factor for external variables that could produce skewed results – for example, seasonal trends, holiday events, economic trends and anything else that could exaggerate results in either direction.

If Christmas is your busiest time of the year, don’t expect an A/B test launched in mid-December to produce reliable results by the end of January. Likewise, if your sales are heavily affected by the weather, you have to consider meteorological events, such as a summer heatwave or an unusually wet spring.

How to test ad variations in Google Ads

In Google Ads, you can A/B test different versions of the same ad by creating ad variations.

“Ad variations allow you to easily create and test variations of your ads across multiple campaigns or your entire account. For example, you can test how well your ads perform if you were to change your call to action from ‘Buy now’ to ‘Buy today’. Or you can test changing your headline to ‘Call Now for a Free Quote’ across ads in multiple campaigns.”

The functionality of ad variations is essentially the same as running A/B tests on a landing page. You create different versions of the same ad and test them out of split sections of your target audience to determine the top performer.

The good news is, ad variations are quick and easy to set up in Google Ads.

Step #1: Create your ad variation

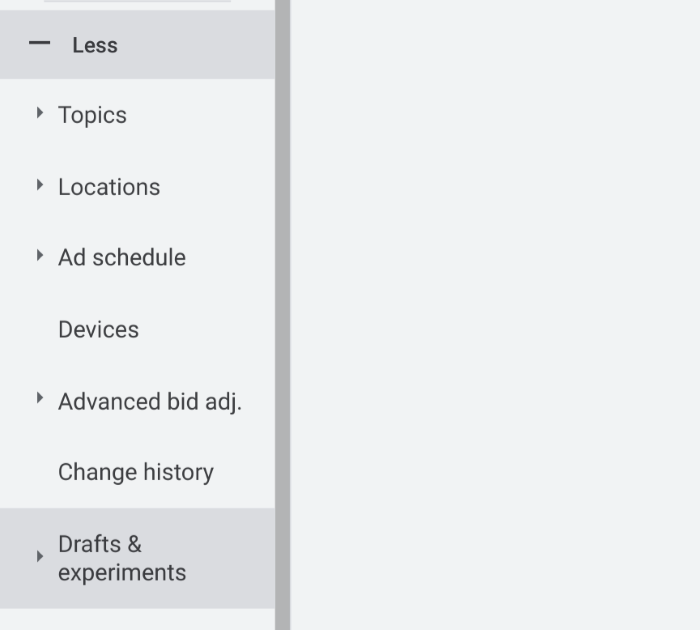

To create an ad variation, sign in to your Google Ads account, click on the + More tab in the left-hand menu and select Drafts & experiments from the expanded menu.

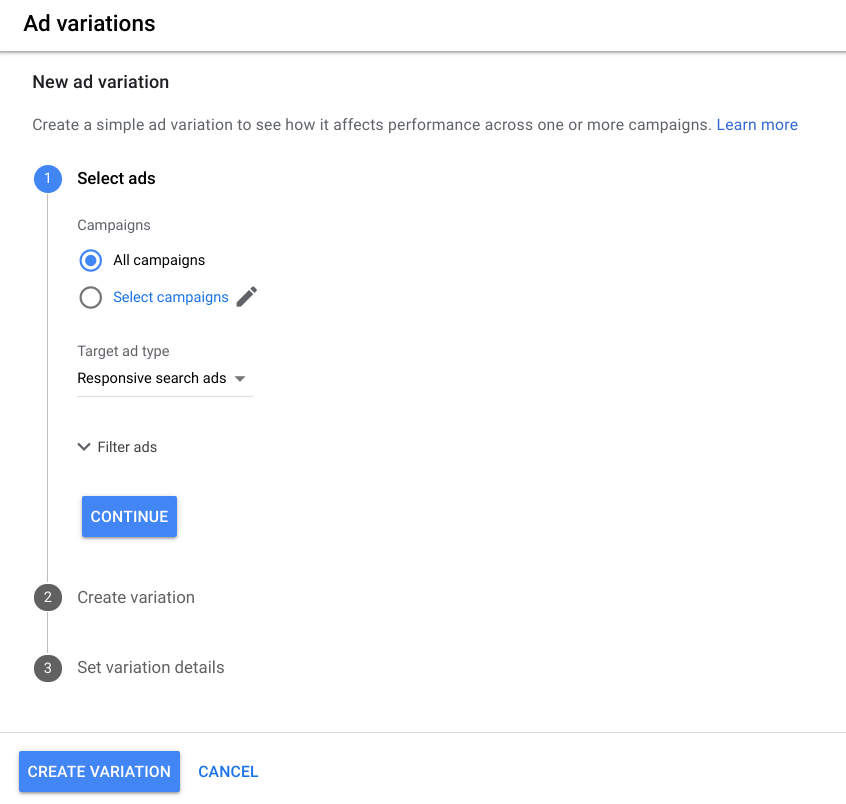

Then, click on the Ad variations tab in the same menu and click the blue + button or the + NEW AD VARIATION button to create your first variations.

On the next screen, you’ll be asked to choose which campaigns you want to create your ad variation for and you can stick with the default All campaigns or manually select campaigns (recommended).

You’ll also be asked to select the ad type you’re creating your variant for and you can choose Responsive search ads or Text ads from the dropdown list.

You can filter which ads your variations are created for by clicking on the Filter ads tab and selecting options from the dropdown list to refine ads by the following:

- Headlines

- Descriptions

- Headlines and descriptions

- Path 1

- Path 2

- Paths

- Final URL

- Final mobile URL

- All URLs

You can apply multiple filters to narrow down on ads with precision.

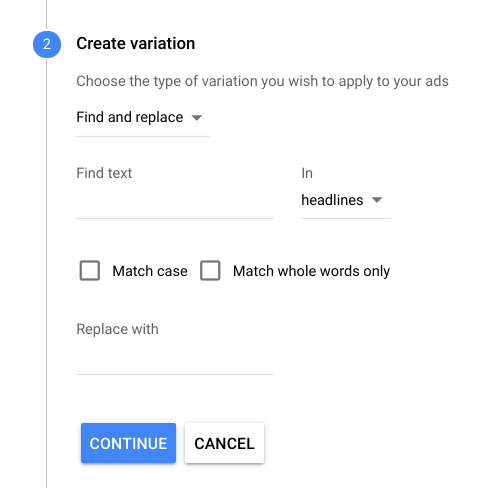

Once you’re finished, click on Continue and the next step prompts you to create your ad variation by specifying which parts of the original ad you want to change.

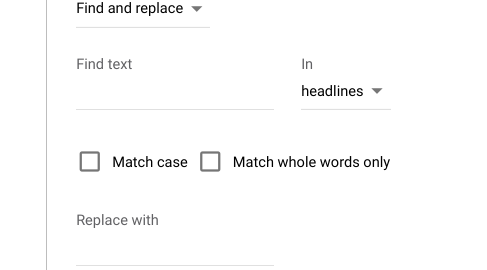

You can use the Find and replace to pinpoint specific text in your ads and replace it with alternative copy. Simply type the text you want to replace in the Find text box, select the asset it’s located in (headline, descriptions, etc.) and type your replacement text in the Replace with box.

You can also update URLs and update the full text of headlines and descriptions.

Once you’re done, click on Continue and you’ll be asked to name your variation on the next screen. You’ll also be prompted to set the start and end dates for your variation and set the experiment split, which is set to 50% by default.

Once you’re happy with your settings, click CREATE VARIATION and you’re all set.

What to do after an A/B test

Once your test has gathered enough data and reached statistical significance, it’s time to analyse the results of your experiment. The first question is whether your hypothesis is proven right by the outcome. If your hypothesis was correct, you’ve got a winning A/B test but don’t feel too bad if the results prove your hypothesis to be incorrect.

Even with a losing A/B test result, you may find the variant still has a positive impact – eg: increasing conversions by 1.8% instead of your target 3.5%

Alternatively, your variant may perform worse than the control but this isn’t the disaster it may feel like in your first losing A/B test. Every outcome produces data that you can learn from and compile with future data to build historical insights to inform future design choices and optimisation campaigns.

You also shouldn’t put too much faith in positive results the first time around. If your variant outperforms the control version, you still want to test the waters with any changes. In an ideal world, you would run the same test again multiple times to confirm the initial verdict but that’s not always viable for a company with limited resources.

Still, any changes implemented after a positive result should be rolled out and analysed closely. For example, if your new mobile landing pages achieved higher conversion rates in testing, you might update one landing page or a small group and monitor performance to check they’re achieving the expected performance when they get 100% of your traffic.

What A/B testing tools are there?

There’s no shortage of A/B testing tools on the market these days and the best tool for the job depends on what you want to test. If you want an all-in-one system for running A/B tests and other experiments across your website, then comprehensive tools like VWO and Optimizely aim to provide everything you need in a CRO platform.

You’ll also find plenty of marketing tools that include A/B testing as a feature, even if it’s reserved for their more expensive plans. For example, landing page builder Unbounce includes A/B testing on most of its plans and many email marketing platforms like Campaign Monitor include A/B testing for optimising email campaigns.

Google Optimize is a free experimentation system that includes A/B testing features (and plenty more) as part of its comprehensive optimisation platform. The free version is more than capable of managing A/B tests while the paid version (Optimize 360) offers a complete testing suite for enterprise-level companies.

Digital & Social Articles on Business 2 Community

(54)

Report Post