What defines quality content, and how can its value be quantified? Columnist Dave Davies reviews studies done on this topic and presents his conclusions.

Those who know me know that I’m primarily a technical SEO. I like on-site content optimization to be sure, but I like what I can measure — and now that keyword densities are mostly gone, I find it slightly less rewarding during the process (though equally rewarding via the outcome).

For this reason, I’ve never been a huge fan of the “quality content is awesome for rankings simply because quality content is awesome for rankings” argument for producing … well … quality content.

Quality content is hard to produce and often expensive, so its benefits need to be justified, especially if the content in question has nothing to do with the conversion path. I want to see measured results. The arguments for quality content are convincing, to be sure — but the pragmatist in me still needs to see hard evidence that quality content matters and directly impacts rankings.

I had two choices on how to obtain this evidence:

- I could set up a large number of very expensive experiments to weight different aspects of content and see what we come up with.

- Or I could do some extensive research and benefit from the expensive experiments others have done. Hmmmmm.

As I like to keep myself abreast of what others are publishing, and after seeing a number of documents around the web recently covering exactly this subject, I decided to save the money and work with the data available — which, I should add, is from a broader spectrum of different angles than I could produce on my own. So let’s look at what quality content does to rankings.

What is quality content?

The first thing we need to define is quality content itself. This is a difficult task, as quality content can range from 5,000-word white papers on highly technical areas to evergreen content that is easy but time-consuming to produce, to the perfect 30-second video put on the right product at the right time. Some quality content takes months to produce, some minutes to produce.

Quality content cannot be defined by a set criteria. Rather, it is putting the content your visitors want or need in front of them at the right time. Quality is defined by the simple principle of exceeding your visitors’ expectations on what they will find when they get to your web resource. That’s it.

Now, let’s look at what quality content actually does for your rankings.

Larry Kim on machine learning and its impact on ranking content

Anyone in the PPC industry knows Larry Kim, the founder and CTO of WordStream, but the guy knows his stuff when it comes to organic as well. And we share a passion: we both are greatly intrigued by machine learning and its impact on rankings.

We can all understand that machine learning systems like RankBrain would naturally be geared toward providing better and better user experiences (or what would they be for?). But what does this actually mean?

Kim wrote a great and informative piece for “Search Engine Journal” providing some insight into exactly what this means. In his article, he takes a look at WordStream’s own traffic (which is substantial), and here’s what he found:

- Kim looked at the site’s top 32 organic traffic-driving pages prior to machine learning being introduced into Google’s algorithm; of these pages, the time on site was above average for about two-thirds of them and below average for the remaining third.

- After the introduction of machine learning, only two of the top 32 pages had below-average time on site.

The conclusion Kim draws from this — and which I agree with — is that Google is becoming better at weeding out pages that do not match the user intent. In this case, they are demoting pages that do not have high user engagement and rewarding those that do.

The question is, does it impact rankings? Clearly the demotion of poor-engagement pages on the sites of others would reward sites with higher engagement, so the answer is yes.

Kim also goes on to discuss click-through rate (CTR) and its impact on rankings. Assuming your pages have high engagement, does having a higher click-through rate impact your rankings? Here’s what he found:

What we can see in this chart is that over time, the pages with higher organic click-through rates are rewarded with higher rankings.

What do CTRs have to do with quality content, you might ask? To me, the titles and descriptions are the most important content on any web page. Write quality content in your titles and descriptions, and you’ll improve your click-through rate. And provided that quality carries over to the page itself, you’ll improve your rankings simply based on the user signals generated.

Of course, I would be remiss to be basing any full-scale strategy on a single article or study, so let’s continue …

Eric Enge on machine learning’s impact on ranking quality content

Eric Enge of Stone Temple Consulting outlined a very telling test, and the results appeared right here on Search Engine Land in January. Here’s what I love about Enge: He loves data. Like me, he’s not one to follow a principle simply because it’s trendy and sounds great — he runs a test, measures and makes conclusions to deploy on a broader scale.

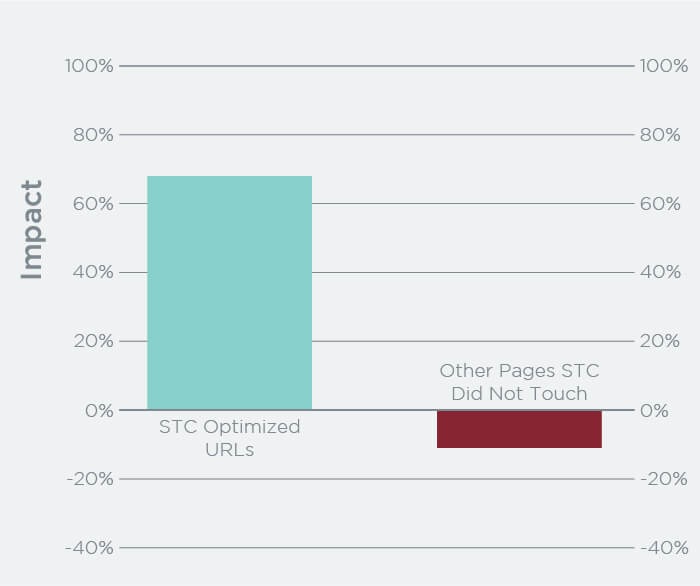

What Stone Temple Consulting did for this test was replace the text on category pages — which had been written as “SEO copy” and was not particularly user-friendly — with new text that “was handcrafted and tuned with an explicit goal of adding value to the tested pages.” It was not SEO content by the classic definition; it was user content. And here’s what they found:

The traffic to the pages they updated with high-quality content on saw an increase of 68 percent in traffic, whereas the control pages took a hit of 11 percent. Assuming that all the pages would have taken the 11 percent drop, the pages with the gains actually improved by 80 percent. This was accomplished simply by adding content for the users instead of relying on content that search engines wanted back in 2014.

Eric points out in his article that Hummingbird‘s role in helping Google to understand natural language, combined with the speed in adjustments facilitated by machine learning, allows Google to reward sites that provide a good user experience — even when it’s not rich in keywords or traditional SEO signals.

Brian Dean on core ranking metrics

Back in September, Brian Dean of Backlinko wrote an interesting piece breaking down the core common elements of the top-ranking sites over a million search results. This is a big study, and it covers links, content and some technical consideration, but we’re going to be focusing only on the content areas here.

So with this significant amount of data, what did they find the top-ranking sites had in common with regard to content?

- Content that was topically relevant significantly outperformed content that didn’t cover a topic in depth.

- Longer content tended to outrank shorter content, with the average first-page result containing 1,890 words.

- A lower bounce rate was associated with higher rankings.

Topically relevant content appears to be more about what is on the page and how it serves users than whether it contains all the keywords. To use their example, for the query “indonesian satay sauce,” we find the following page in the results:

This page is beating out stronger sites, and it doesn’t actually use the exact term “indonesian satay sauce” anywhere on the page. It does, however, include a recipe, information on what a satay is, variations on it and so on. Basically, they beat stronger sites by having better content. Not keyword-stuffed or even “keyword-rich,” just better and more thorough content.

Quality content, it seems, has taken another victory in the data.

So what we see is …

I could go on with other examples and studies, but I’d simply be making you suffer through more reading to reinforce what I believe these three do well: illustrate that there is a technical argument for quality content.

More important perhaps is the reinforcement that “quality content” follows no strict definition, apart from providing what your user wants (although that may periodically be biased by what Google believes your user wants prior to attaining any information about them directly). Your click-through rates, time on page, bounce rate, the thoroughness of your pages, and pretty much anything to do with your visitors and their engagement, all factor in.

The goal, then, is to serve your users to the best of your ability. Produce high-quality content that addresses all their needs and questions and sends them either further down your conversion funnel or on to other tasks — anything but back to Google to click on the next result.

If you need one more reinforcement, however, I have one but it has no supporting authoritative data aside from its source. Periodically, Google either releases or has leaked their Quality Rater’s Guidelines. You can download the most recent (2016) in this post. While I did a fuller evaluation of these guidelines here, the key takeaway is as follows:

The quality of the Main Content (MC) is one of the most important considerations in Page Quality rating. For all types of webpages, creating high quality MC takes a significant amount of at least one of the following: time, effort, expertise, and talent/skill.

So we don’t get metrics here, but what we do get is a confirmation that Google is sending human raters to help them better understand what types of content require time, effort, expertise and talent/skill. Combine this information with machine learning and Hummingbird, and you have a system designed to look for these things and reward it.

Now what?

Producing quality content is hard. I’ve tried to do so here and hope I’ve succeeded (I suppose Google and social shares will let me know soon enough). But if you’re looking at your site trying to think of where to start, what should you be looking at?

This, of course, depends on your site and how it’s built. My recommendation is to start with the content I already have, as Eric Enge did in his test. Rather than trying to build out completely new pages, simply come up with a way to serve your users better with the content you already have. Rewriting your current pages — especially ones that rank reasonably well but not quite where you want them to be — yields results that are easily monitored, and you’ll not only be able see ranking changes but also get information on how your users are reacting.

If you don’t have any pages you can test with (as unlikely as that may be), then you need to brainstorm new content ideas. Start with content that would genuinely serve your current visitors. Think to yourself, “When a user is on my site and leaves, what question were they trying to answer when they did so?” Then create content to address that, and put it where that user will find it rather than leave.

If users are leaving your site to find the information they need, then you can bet the same thing is happening to your competitors. When these users are looking for the answer to their question, wouldn’t it be great if they found you? It’s a win-win: You get quality content that addresses a human need, and you might even intercept someone who was just at a competitor’s website.

Beyond that, the world is your oyster. There are many forms of quality content. Your job is “simply” to find the pearl in the sea of possibilities.

[Article on Search Engine Land.]

Some opinions expressed in this article may be those of a guest author and not necessarily Marketing Land. Staff authors are listed here.

Marketing Land – Internet Marketing News, Strategies & Tips

(50)

Report Post