The Old Farmer’s Almanac is the oldest continuously published periodical in North America. It was first published in 1792 by Robert B. Thomas who wanted an almanac “to be useful with a pleasant degree of humor. Many long-time Almanac followers claim that its forecasts are 80% to 85% accurate.

This claim was seriously tested in 1816 when Thomas made a mistake in his Almanac. His weather forecast for July and August called for snow! He had made an error by switching the January and February forecasts to July and August. While he destroyed most of the snow copies and reprinted the right edition, some copies were left in distribution. But then, something exceptional happened on the other side of the world.

Mount Tambora erupted on 10 April 1815 in the Dutch East Indies (now Indonesia). It “produced the largest eruption known on the planet during the past 10,000 years.” Global temperatures dropped by as much as 3 degrees Celsius and turned 1816 into the “year without a summer” in Europe. Volcanic dust circled around the globe for months, lowering temperatures and guess what, it snowed in New England and Canada in July and August of 1816. Thomas error could have put him out of business but mount Tambora though otherwise. Instead, it gave his Almanac notoriety that lasted for decades.

After all these unlikely events, I’m pretty sure Thomas implemented a mechanism to double-check these kinds of errors in future editions. Natural disasters won’t always cover his tracks in the future.

Today’s weather forecast is much more advanced than Mount Tambora. Satellites, radars, Supercomputers (and probably quantum computers in the near future) are powering those forecasts every day. And even with all this technology, we still get a good laugh at the forecast of 40% chances of sun and clouds today. Who couldn’t predict that!

More seriously, weather forecasts have helped us narrow our decision-making process. Weather forecasts help us decide if we bring an umbrella and a raincoat with us. They advise us on our driving habits when the road conditions are bad on our commute to work. They help us decide if we should take the family camping this weekend. When dealing with uncertainty, and the weather holds a lot of uncertainty, forecasts are of value to narrow our decision-making process.

I believe Agile software development is more than ready to use new forecasting techniques to express uncertainy to narrow their decision-making process. These new forecasting techniques are not based on estimations but historical data, thus saving time on the part of developers to focus on what they do best.

Definition of a forecast

To clarify my definition of a forecast for the rest of this post, I refer to chapter 14 of Daniel Vacanti book Actionable Agile – Metrics for Predictability. It says:

A forecast is a calculation about the future completion of an item or items that includes both a date range and a probability.

In other words, a forecast according to Vacanti is made up of:

- A calculation leading to a date range

- And a probability of reaching this date range

As the future is uncertain, we cannot be 100 % correct about future events, hence the probability part of the forecast.

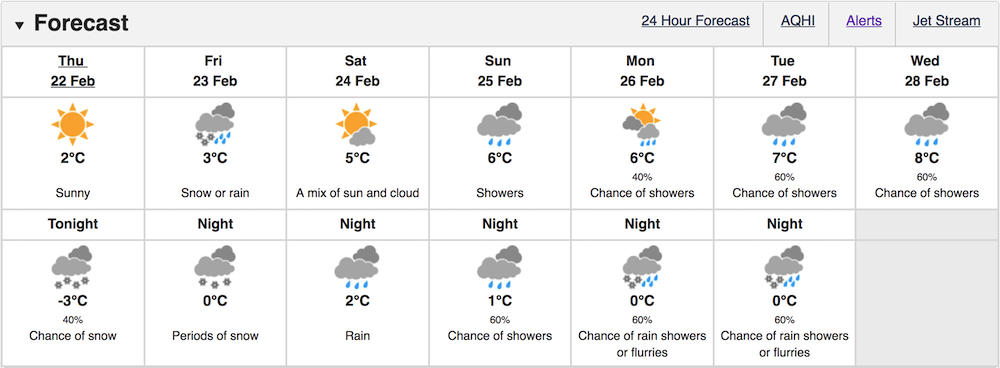

Going back to our weather forecast, we can take snapshots of current weather forecast to see how this pans out. The following image from Environment Canada shows the 7-day forecast for Vancouver in February. Notice how in some cases, there is a probability attached to a weather condition:

On the night of Sunday February 25, there is a 60 % chance (or probability) of showers for the city of Vancouver.

I believe we can use this definition in Agile software development. Instead of weather conditions, I believe our forecasts are either dates or number of completed PBIs. For the rest of this post, when I talk about forecasting, please keep in mind I am thinking of a calculated value with a probability.

Forecasting in Agile software development

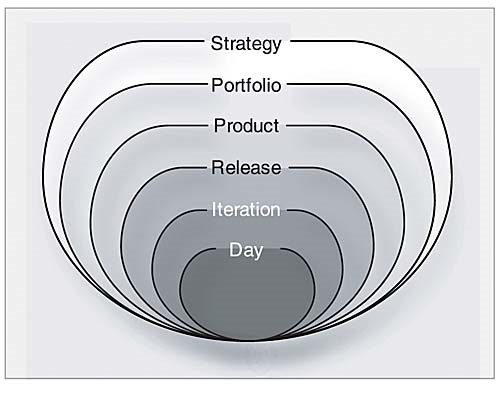

When I have a Agile planning/forecasting conversation at work, I’ve fallen back more than once on the Agile planning onion proposed by Mike Cohn back in 2009. In his article, I really like to use this diagram to pursue the planning/forecasting conversation.

In the image above, each layer of the planning onion will use a different set of Agile practices. For example, at the Iteration layer, I use the user story practice to track work while at the Day layer, we could use tasks with estimates of hours as another practice to track work. On both of these layers, I’ve seen a lot of times the sprint burndown as the most popular chart to track progress and forecast the completion of work.

At the Release or Product layer, I’ve used the impact mapping practice as an activity to generate PBIs for our next release and the epic to track a lump of work that range a few iterations. I’ve seen the release burn up/down or the Sunset graph as means of forecasting a release.

Depending on which layer of the Agile planning onion you are at, we use different practices and tools to track and forecast the work. I believe the most popular Agile forecasting toolset named above is limiting our decision-making process because it operates on deterministic dates. They all point on a date in the future with no probability to express a level of confidence. As we’ve seen above, the future is uncertain and to epxress the future, probabilities are of great value to narrow or decision-making process.

When I have an Agile planning/forecasting conversation using one of the charts named above, it falls mostly between the Day and Product layers of the onion. And for the last decade, my experience is that we’ve used estimation techniques (story points, velocity, tasks with hours) to forecast at those layers. I believe they have fallen short for the following reasons:

- They are based on estimates instead of historical data.

- They are deterministic, i.e. they point to an exact date or an exact amount of hours.

- When forecasts are used, they are based on averages.

- Estimates are (sometimes) compared to actual data to validate them.

I believe we have two problems with our current forecasting techniques:

- They are based on estimates

- They forecast the future without a probability

In the following section, I am presenting a set of tools that goes in a different direction than with estimates. They are based on historical data generated by your team(s).

SLE – The forecast at the Day and Iteration layers

To talk about forecasting at the Day and Iteration layers, I use the Service Level Expectation (SLE) as defined in the Kanban Guide for Scrum teams.

A service level expectation (SLE) forecasts how long it should take a given item to flow from start to finish within the Scrum Team’s workflow

For example, let’s say that historically, your development team completes 85 % of its PBIs in 8 days or less. This is our forecast for an item. It has a calculated date range, 8 days or less, and a probability, 85 %.

As a PBI is moving through the Scrum Team’s workflow, I use its current age in the workflow and compare it with its corresponding SLE to make sure it will meet its forecast.

At our daily Scrum, we can use this forecast to keep track of our current work. If my PBI has been in the workflow for 3 days, I might not pay as much attention to it compared to another PBI who is now to its 7th day in the workflow.

Instead of using tasks with estimated hours on it to track its remaining work, we use the combination PBI age + SLE to monitor if we will meet our forecast. I believe these are two data points of value to track work. I also think they are more valuable than estimated hours. I am using hard data (age and SLE) to track work.

I believe this fixes both problems named above. My team doesn’t spend any time estimating each task. It can still decompose the PBIs if required. But we compare the age of the PBI with the SLE of the team. My team uses a probability in its forecasting, thus leaving the door to some uncertainty that can prevent it from meeting the SLE.

Throughput and Monte Carlo – The forecast at the Release and Product level

As we go higher in the layers of the onion, we move the conversation from one PBI to multiple PBIs. At the Release and Product layers of the onion, the Product Owner is now talking in terms of multiple PBIs. For example, a Product Owner might have 54 PBIs left to launch a release. Or she might have a deadline to reach and wonders how many PBIs can be completed on that date. In both situations, forecasting the future can help her narrow her decsion-making process. And as we’ve seen above, forecasting the future requires a calculated value with a probability.

My experience has been that Monte Carlo simulations, using throughput as its input, can generate a forecast for those layers of the onion. Basically, the Monte Carlo simulations will use your historical throughput and simulate the future a large number of times (i.e. 10,000 times).

Let’s take the example above and see how the results of the Monte Carlo simulations can help the Product Owner. I had previously mentioned above the Product Owner has 54 remaining PBIs to complete her release. Running the Monte Carlo simulations with her historical throughput, she gets the following results:

Her first forecast, 25 days with 90% confidence, says there’s a 90% chance her team can deliver the remaining 54 PBIs of the release in the next 25 days. At the far right, there’s a 30 % chance they will deliver them in 16 days. She can now turn back to the business to discuss if 25 days is an acceptable number. If they prefer to have it in 14 days, she can reply the outlook for this scenario has less than 30 % of happening.

For a more detailed read on Monte Carlo simulations and a tool to help you do them quickly, I recommend the post Create Faster and More Accurate Forecasts using Probabilities from my fellow PST Julia Webster.

The decade in front of us

As Agile is now the new traditional software development approach, I can only wish the forecasting techniques described above will also become the new traditional forecasting techniques. Estimations in story points and hours helped us adopt Agile in the last decade. While imperfect, they reassured the business when it asked for dates.

I believe we are now ready to use historical data to do Agile forecasting in the decade in front of us. We already have the data in our teams. The toolset exists and is accessible. The only thing missing is for people to learn, understand and master those new techniques. I’ll cross my fingers that in 2030, conversations about our forecasts will be in probabilities. Even between, I’ll forecast a 90% level of confidence it will happen.

References

- https://www.almanac.com/content/difference-between-old-farmers-almanac-and-other-almanacs

- https://en.wikipedia.org/wiki/Old_Farmer%27s_Almanac

- https://www.volcanodiscovery.com/tambora.html

Business & Finance Articles on Business 2 Community

(42)

Report Post