Amazon, Microsoft Struggle With AI Inaccuracies

Amazon and Microsoft have been backed against a brick wall. The companies are facing a backlash over whether generative artificial intelligence (GAI) is mature enough to take on certain complicated tasks and provide accurate answers.

With Amazon, the focus is on product reviews. In the case of Microsoft, it’s about reliably sourcing election information.

Earlier this year, Amazon began using GAI to help customers better understand what others say about products, without having to read through dozens of individual reviews.

It uses the technology to provide a short paragraph of text right on the product detail page that highlights the product features and customer sentiment. That strategy may be backfiring.

In some cases, these reviews have been providing inaccurate product summaries and descriptions. They also have also led to exaggeration of negative feedback. The negative implications fall into the hands of customers, as well as merchants that sell in the marketplace.

Merchants told Bloomberg that the summaries were implemented just as they were headed into the crucial holiday shopping season.

Jon Elder runs the Amazon seller consulting firm Black Label Advisor. He told Bloomberg that AI can summarize a handful of negative feedback even though it is not a consistent theme.

The technology seems to “overplay negative sentiment,” and it’s less obvious in some reviews. In the example of the $70 Brass Birmingham board game, a 4.7-star rating based on feedback from more than 500 shoppers, ends the description in a three-sentence AI summary how some consumers have mixed opinions on ease of use when only four reviews, less than 1% of the overall ratings, mention ease of use in a way that could be interpreted as critical.

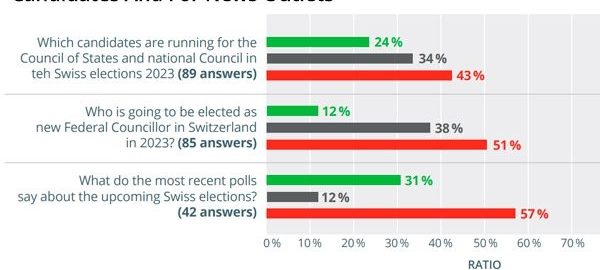

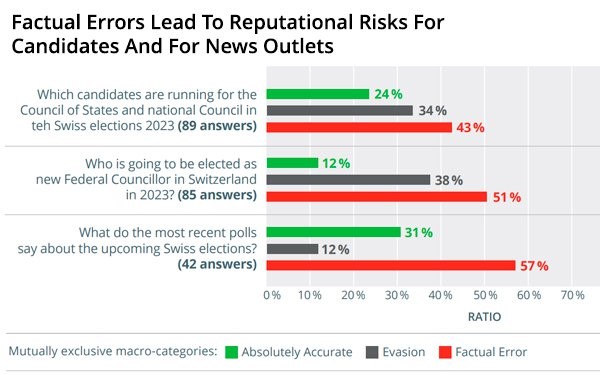

Research from European nonprofits finds that the recently rebranded Microsoft Copilot, previously Bing AI chatbot, gave inaccurate answers to one out of every three basic questions about electoral candidates, polls, scandals and voting in a pair of recent election cycles in Germany and Switzerland. The chatbot misquoted its sources in some cases.

The report, “Generative AI and elections: Are chatbots a reliable source of information for voters?”, published by AI Forensics, a European non-profit that investigates influential and opaque algorithms, along with other organizations in Germany and Switzerland — questions whether chatbots can provide accurate information about elections.

The information does not claim that misinformation from the Bing chatbot influenced the elections’ outcome, but it reinforces concerns that AI chatbots could contribute to confusion and misinformation around future elections.

The chatbot’s inconsistency occurs consistently, according to the report, which states that the answers did not improve over time.

The inaccuracies included giving the wrong date for elections, reporting outdated or mistaken polling numbers, listing candidates who had withdrawn from the race as leading contenders, and inventing controversies about candidates in a few cases.

“The chatbot’s safeguards are unevenly applied, leading to evasive answers 40% of the time,” according to the report. “The chatbot often evaded answering questions. This can be considered as positive if it is due to limitations to the LLM’s ability to provide relevant information.”

The safeguards are not applied consistently. The chatbot often could not answer simple questions about the candidates for the respective elections, which devalues the tool as a source of information.

This research took place in Europe, but similar questions drew out inaccurate responses to questions about the 2024 U.S. elections as well.

The researchers state that Microsoft has not done enough to address issues raised in this report, but the Redmond, Washington-based company acknowledged and promised to fix them.

(8)

Report Post