About A/B Testing

In the simplest possible terms, A/B testing is the process of serving two or more alternative variants in user experience, to otherwise identical audiences, and then establishing which ‘works’ most effectively according to agreed criteria.

It is, of course, an incredibly powerful and popular technique. After all, the alternative is making decisions based on ‘gut’, or what the most senior or most persuasive person in the room wants to do, a process with a decidedly mixed track record. Any organization that spends significant amounts of time arguing about creative decisions around a table would do well to adopt a testing culture and rely on user data to help ‘settle the argument’.

This blog looks at how to build that culture, flesh out an A/B testing team, and as a result get the most out of A/B testing. As part of that discussion, we’ll also look at the common failure points that so often limit the potential of the testing approach. We won’t go into the detail around designing and conducting tests themselves – but will set you up to make that process a success.

Building A Culture: Aligning The Organization

It sounds obvious, but the first and single most important step in building an A/B testing culture is ensuring that at all relevant levels there is a commitment to conduct testing and abide by the results.

At this point, some readers may be asking themselves whether it’s really possible that an organization would invest time and money in creating an A/B testing program only to ignore the hard-won results that it delivers.

The answer is emphatically “yes”. In fact, in our experience this is probably the number one reason A/B testing programs fail. Given that’s the case, another question springs to mind, which is: why would this happen? The answer almost always lies with the prejudices and opinions of the senior team members, and the fact that A/B testing is used and accepted when it confirms these prejudices, and ignored when it contradicts them. In other words – good old confirmation bias.

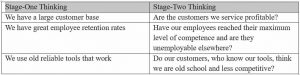

It is easy to sit in judgement from the safe distance of a whitepaper, but the truth is that we are all prone to this attitude. If the CEO of a company feels a certain way, or a competitor we admire does things in a particular style, it can be hard to admit that they are wrong (or be the one to tell them). So what’s the best way to ensure your tests truly change the way you build mobile apps?

Firstly, always make clear before you are running the test what you are proposing to learn and achieve. That way everyone involved can buy-in to the test before it begins rather than picking it apart after the results are in. Those with strong opinions will be able to ensure these are protected in test design, which will in turn make them more likely to accept any results that emerge.

Second, promote a ‘fail faster’ start-up culture (no matter what size your business) that promotes and rewards learning. Setting too much store on ‘being right’ leads to defensiveness, negativity and ultimately slows progression towards optimal design choices. So if you want A/B tests to get accepted – and start making a difference to your business – you need to start reward those people willing to admit they were wrong.

Promoting Testing: Putting A Structure In Place

Success breeds buy-in. In order to align the team and organization behind an A/B testing program it is vital to structure that activity in a way that supports learning and clearly communicates success. This means both testing the right things and measuring in the correct way, and perhaps most importantly it means recording and communicating the results of all tests in a structured way. Build a spreadsheet and work from it.

The range of possibilities when it comes to what to test can be overwhelming. And running tests at random on trivial aspects of app design and function is a surefire way to learn little and endanger commitment to a testing culture. On the flip side, the right structure can help enormously.

Here are some elements and approaches you’ll want to put in place:

Ensure all tests are focused on learning rather than simply finding a winner. To put this another way, always seek to define tests in a way that delivers more meaning than the results themselves. For example, ‘copy A beats copy B’ is not a particularly helpful result if neither will ever be used again. However, ‘short, focused copy beat longer, more detailed copy’ is. Of course that learning won’t hold true in all situations, but it provides a place to start.

Where possible, try to link tests to concrete business goals. When deciding what to test, take agreed business objectives and work back from them. Consider common user flows that lead to key revenue events and model them with funnel analysis to find where issues arise. Zoom in on those areas. Get to the point where each test can be clearly justified based on potential impact on the bottom line.

Keep sight of the big picture. A common mistake in A/B testing is to lose the wood for the trees and relentlessly optimize rather than re-invent. There’s no need to fall into this trap. Most A/B testing platforms will enable a team to show radical variants to just a small sub-set of users. Leverage that ability to answer the big ‘what if?’ questions and keep innovating at a fundamental level.

Agree what success looks like before testing. It’s great to have huge amounts of data at your fingertips. But that means that if you trawl through a variety of metrics after the fact in order to find success – you probably will. Be rigorous and structured and agree what metric should be influenced before the test begins. Report on that metric and no others (but do look for unintended effects and if necessary test again to confirm them).

Measure the right things. There’s a tension in A/B testing between reporting on what is easy to measure, and reporting on the right thing to measure. As you can probably guess: we recommend the latter. Don’t allow yourself to get too bogged down in things like click-through rates, and retain some focus on the global impact of tests on higher level metrics.

Building An A/B Testing Team

Implementing a structured A/B testing program implies committing internal resources to the project and, at least in some sense, building a team dedicated to running and reporting tests. When building that team, keep the following recommendations top-of-mind.

Everyone is in the team. As noted above, if buy-in doesn’t exist at every level, testing programs will have a diminished impact. It is important to adopt that culture and build support across, and upwards within, the organization.

Keep active teams small and focused. A/B testing is fundamentally a ‘start-up’ approach and demands a start-up mentality. Whilst it may be tempting to hire large teams in order to demonstrate commitment, this is likely to be counter-productive. Small, focused groups of individuals who are committed to change and actively enjoy challenging the status quo are always to be preferred over larger and more inevitably corporate structures. But note, as above, that these teams must themselves be data-driven and structured in their approach. Lone wolves need not apply!

Build to reduce dependence on development. In an ideal world, the creation, running and reporting of an A/B test requires NO development and engineering work whatsoever. Instead, ensure that the core approach to development creates a framework within which running A/B tests is easy for a non-technical team. In broad terms, that means separating logic and data and providing the means for your A/B testing team to edit the latter and not the former. And of course, it means having at least one member of the development team being aware of this requirement and liaising with the A/B testing team to ensure it is met.

Focus on analytics and data science. The core skill in any A/B testing team is an ability to interpret current reality (as shown within app analytics), identify possible improvement and the changes that might deliver it, and see patterns and meaning in results of tests. That means giving control of an A/B testing program to an individual or individuals with a strong background in data science or a similar discipline. As above, that individual should be empowered by an organization around them that is committed to A/B testing and ‘ready to learn’.

Build a close partnership with the creative side of the organization. You’ll need creative talent on the team to iterate rapidly and build variants to spec. Within an A/B testing structure, that talent needs to adopt a mindset that creativity is not compromised but rather empowered by the testing culture (an attitude rarer than you might think). An ideal team brings the creative and scientific aspects of the program together in a small group of individuals who each as individuals understand the value of both.

Digital & Social Articles on Business 2 Community(79)

Report Post