Google-Backed Anthropic Releases Claude 3, More Powerful Chatbot

Anthropic on Monday debuted Claude 3, a chatbot and suite of AI models that it calls its fastest and most powerful yet.

The company’s backers include Google, Salesforce and Amazon. It has closed about five funding deals during the past year, totaling about $7.3 billion.

Google Cloud said it will make the Claude models available in Vertex AI Model Garden during the coming weeks. The garden is a collection of pre-trained machine-learning models and tools.

And while the entire announcement seems a little odd, like waiting for news that Google, Amazon and Salesforce will jointly acquire Anthropic, the new chatbot series has the advancements needed to summarize a lengthy book — about 200,000 words — compared with ChatGPT, which can summarize about 3,000.

The latest version from Anthropic can also upload images for the first time.

With this release, Anthropic — a company founded by former OpenAI research executives — now offers multimodal support. Users can upload photos, charts, documents and other types of unstructured data for analysis and answers.

The family of AI models includes Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus.

Each successive model offers increasingly powerful performance, allowing users to select the optimal balance of intelligence, speed, and cost for their specific application.

Sonnet and Haiku are compact and less expensive than Opus. Sonnet and Opus are available in 159 countries starting today, while Haiku will be coming soon, according to Anthropic.

Anthropic did not specify how long it took to train Claude 3 or how much it cost, but said companies like Airtable and Asana helped A/B test the models.

All Claude 3 models show increased capabilities in analysis and forecasting, nuanced content creation, code generation, and conversing in non-English languages like Spanish, Japanese, and French.

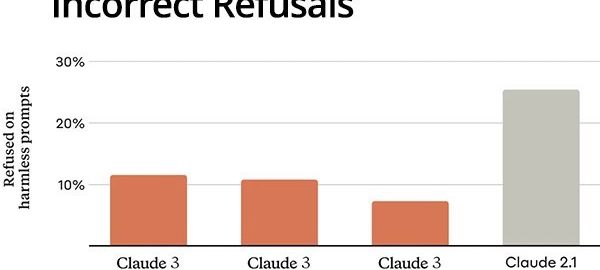

Anthropic said previous Claude models often made unnecessary refusals suggesting a lack of contextual understanding. Now, Opus, Sonnet, and Haiku are significantly less likely to refuse to answer prompts that border on the system’s guardrails than previous generations of models.

The Claude 3 models show a more nuanced understanding of requests, recognize real harm, and refuse to answer harmless prompts much less often. They can process a wider range of visual formats like photos, charts, graphs and technical diagrams.

Maintaining high accuracy using a large set of complex, factual questions target known weaknesses in current models. Anthropic categorized the responses into correct answers, incorrect answers, or hallucinations, and admissions of uncertainty, where the model is willing to say it doesn’t know the answer instead of providing incorrect information.

Anthropic said it will soon enable citations in Claude 3 models and point to precise sentences in reference material to verify their answers. It’s not clear if that will enable the company to create some soft of monetization and bring in advertising.

(7)