Google Algorithms Can Tell When Sources Agree On Facts

Google designs its ranking systems to serve relevant information from the most reliable sources that demonstrate expertise, authoritativeness and trustworthiness.

The latest artificial intelligence (AI) model, Multitask Unified Model (MUM), helps by identifying and prioritizing signals related to reliability. Thousands of improvements are made each year.

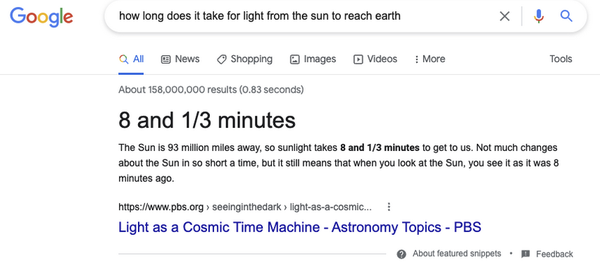

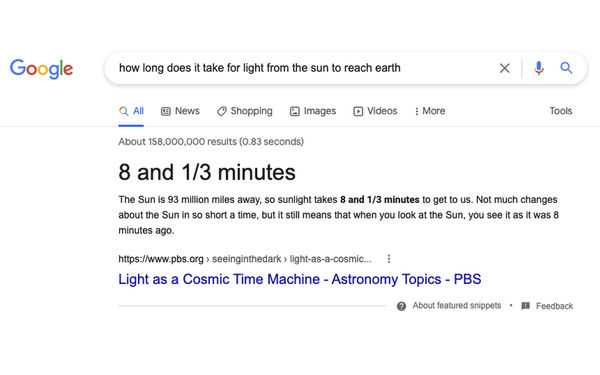

Google on Thursday announced technology to improve the quality of featured snippets, the descriptive box at the top of the page that highlights a piece of information from a result and the source in response to a search query. This also drives traffic to publishers, retailers and other websites.

MUM helps the systems “understand the notion of consensus, which is when multiple high-quality sources on the web all agree on the same fact,” Pandu Nayak, vice president of search and Google Fellow, wrote in a blog post. “Our systems can check snippet callouts (the word or words called out above the featured snippet in a larger font) against other high-quality sources on the web, to see if there’s a general consensus for that callout, even if sources use different words or concepts to describe the same thing.”

He explains that this “consensus-based technique” has improved the quality and helpfulness of featured snippet callouts.

Google also built information literacy features in Search that help people evaluate information, whether they found it on social media or in conversations with family or friends. In a study this year, researchers found that people regularly use Google as a tool to validate information they find on other platforms.

Today, Google launched several updates to features such as Fact Check Explorer, Reverse image search, and About this result.

For example, About this result, which lets searchers see more context about results before visiting a web page, launched in the Google app, and now includes more information such as how widely a source is circulated, online reviews, whether a company is owned by another, or when Google’s systems can’t find much info about a source.

Google also addressed the issue of a lack of reliable information online about a given subject, because sometimes, Nayak believes, information moves faster than facts.

“To address these, we show content advisories in situations when a topic is rapidly evolving, indicating that it might be best to check back later when more sources are available,” he wrote.

Google expanded content advisories to searches where its systems do not have high confidence in the overall quality of the results available for the search. These notices provide context about the entire set of results on the page. It always serves the results for the query, even when the advisory is present.

Educating people about misinformation also has become a passion for Google. Since 2018, the Google News Initiative (GNI) has invested nearly $75 million in projects and partnerships working to strengthen media literacy and combat misinformation around the world.

Partnerships announced today aim to help. They include an expanded partnership with MediaWise at the Poynter Institute for Media Studies, and PBS NewsHour Student Reporting Labs to develop information literacy lesson plans for teachers of middle and high school students.

“Today’s announcement builds on the GNI’s support of its microlearning course through text and WhatsApp called Find Facts Fast,” Nayak wrote.

(33)

Report Post