Google is rolling out its AI-native search to all users

AI search returns a custom-built bundle of text and links in response to a user’s plain-language query.

Google said at its I/O developer event Tuesday that it’s rolling out its AI-native search to all users for the first time. Previously, users could try Google’s chatbot-based search (then called “Search Generative Experience” (SGE)) by opting into the experience in Google Labs. Now AI search will become available to all users searching for certain subjects or products.

Unlike traditional Google search, AI search returns a custom-built bundle of text and links—called “AI Overviews”—in response to a user’s plain-language query.

Google VP of Search Liz Reid told Fast Company that Google’s AI search is powered by the company’s newest and most powerful large language model, Gemini. She also confirmed that Google has been testing the new AI search with a small number of users who have not opted in to SGE.

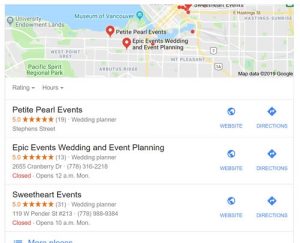

Reid pointed out that the AI search experience won’t be invoked by all queries. Navigational queries to specific websites, or short answers to quick questions, she said, are better served by traditional (non-AI) search results (i.e. a ranked list of links). But for more complex or exploratory searches, such as for vacation planning or product queries, Google’s AI search will assemble a custom answer that pulls information from a number of websites, and from a number of Google databases such as local business data or mapping.

“The fact that you’re not limited by [whether] your question is directly matched with one web page that has the full answer, but you can pull [from] across the corpus of human knowledge really changes the question,” Reid said in an interview with Fast Company on Monday.

Publishers and creatives worry that users will simply get the information they need from the custom answer and not link out to their websites. But Google says that during their tests of the feature, they’ve seen that users are actually more likely to click out to the publisher sites to find further information. Still, the company acknowledged that concern directly in a press release: “As we expand this experience, we’ll continue to focus on sending valuable traffic to publishers and creators,” the release reads.

The AI search Google showed Tuesday at I/O has a couple of new wrinkles that weren’t part of SGE. Users can now show Gemini a video of a problem they have, and the AI can analyze and fix the issue. (Reid used the example of someone whose turntable tonearm wasn’t staying in place.)

“You don’t even know what’s called the tonearm and you just take a video where it’s moving poorly and say ‘why is this not staying in place?’” Reid told Fast Company. “And it figures out what is the model of the record player. It then tells you that it’s the tonearm. It tells you how to balance the tonearm.”

Google has been under increasing pressure to feature an AI-native “conversational” search experience. Ever since the arrival of ChatGPT in late 2022, consumers have increasingly used AI tools to find content on the internet. Google frames LLMs and chatbots as the latest in a long line of new technologies that it’s leveraged to enhance search.

OpenAI, Google’s chief rival in the area of generative AI, is rumored to be preparing its own chatbot-based AI search capability. The startup Perplexity has also impressed many in the AI space with its AI-native search, which it calls an “answer engine.”

“Technology shifts can be medium-size or they can be big, or they can be giant,” Reid said. “This is a giant technology shift, so we think our ability to expand what’s possible is much bigger than, like, five years ago.”

ABOUT THE AUTHOR

(11)

Report Post