Google: What Is A Red Team?

Google now has a red team charged with testing the security of artificial intelligence (AI) systems by running simulated but realistic attacks to uncover vulnerabilities or other weaknesses that could be exploited by cybercriminals.

Red teams are known as groups of hackers at companies capable of simulating cyberattacks and advanced persistent threats (APTs) with the goal helping organizations identify security vulnerabilities in their systems.

“We’ve already seen early indications that investments in AI expertise and capabilities in adversarial simulations are highly successful,” Daniel Fabian, head of Google Red Teams, wrote in a recent blog post. “Red team engagements, for example, have highlighted potential vulnerabilities and weaknesses, which helped anticipate some of the attacks we now see on AI systems.”

Google published a report to explore a critical capability deploy to support the Secure AI Framework (SAIF), red teaming. The company believes red teaming will play a role in preparing every organization for attacks on AI systems. The report examines Google’s work and addresses three areas: what red teaming in the context of AI systems is and why it is important, what types of attacks AI red teams simulate, and lessons we have learned that we can share with others.

A key responsibility of Google’s AI Red Team points to relevant research and adapt that research to work against real products and features that use AI to learn about their impact.

“Exercises can raise findings across security, privacy, and abuse disciplines, depending on where and how the technology is deployed,” Fabian wrote. “To identify these opportunities to improve safety, we leverage attackers’ tactics, techniques and procedures (TTPs) to test a range of system defenses.”

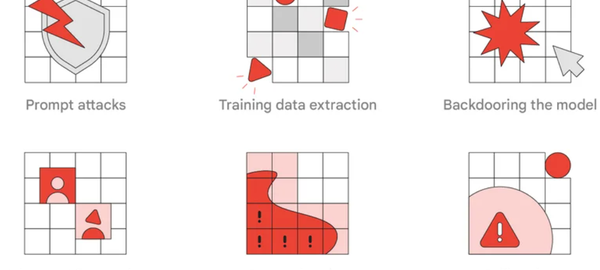

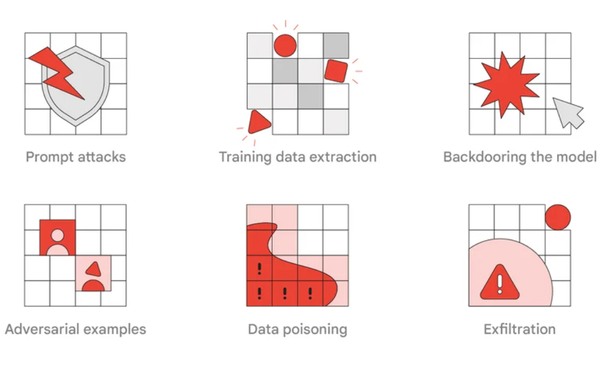

The report lists TTPs that Google considers most relevant and realistic for real world adversaries and red teaming exercises. They include prompt attacks, training data extraction, backdooring the model, adversarial examples, data poisoning and exfiltration.

Google also listed lessons learned around vulnerabilities in a blog post.

These include:

- Traditional red teams are a good starting point, but attacks on AI systems quickly become complex, and will benefit from AI subject matter expertise.

- Addressing red team findings can be challenging, and some attacks may not have simple fixes, so we encourage organizations to incorporate red teaming into their work feeds to help fuel research and product development efforts.

- Traditional security controls such as ensuring the systems and models are properly locked down can significantly mitigate risk.

- Many attacks on AI systems can be detected in the same way as traditional attacks.

(17)