With artificial intelligence (AI)/machine learning (ML) models being developed for all kinds of marketing problems, what part should marketing play in developing and monitoring the applications that serve them?

If you have an artificial intelligence program, you also have a committee, team, or body that is providing governance over AI development, deployment, and use. If you don’t, one needs to be created.

In my last article, I shared the key areas for applying AI and ML models in marketing and how those models can help you innovate and meet client demands. Here I look at marketing’s responsibility for AI governance.

So, what is AI governance?

AI governance is what we call the framework or process that manages your use of AI. The goal of any AI governance effort is simple — mitigate the risks attached to using AI. To do this, organizations must establish a process for assessing the risks of AI-driven algorithms and their ethical usage.

The stringency of the governance is highly dependent on industry. For example, deploying AI algorithms in a financial setting could have greater risks than deploying AI in manufacturing. The use of AI for assigning consumer credit scores needs more transparency and oversight than does an AI algorithm that distributes parts cost-effectively around a plant floor.

To manage risk effectively, an AI governance program should look at three aspects of AI-driven applications:

- Data: What data is the algorithm using? Is the quality appropriate for the model? Do data scientists have access to the data needed? Will privacy be violated as part of the algorithm? (Although this is never intentional, some AI models could inadvertently expose sensitive information.) As data may change over time, it is necessary to consistently govern the data’s use in the AI/ML model.

- Algorithms. If the data has changed, does it alter the output of the algorithm? For example, if a model was created to predict which customers will purchase in the next month, the data will age with each passing week and affect the output of the model. Is the model still generating appropriate responses or actions? Because the most common AI model in marketing is machine learning, marketers need to watch for model drift. Model drift is any change in the model’s predictions. If the model predicts something today that is different from what it predicted (August 13, 2022), then the model is said to have “drifted.”

- Use. Have those that are using the AI model’s output been trained on how to use it? Are they monitoring outputs for variances or spurious results? This is especially important if the AI model is generating actions that marketing uses. Using the same example, does the model identify those customers who are most likely to purchase in the next month? If so, have you trained sales or support reps on how to handle customers who are likely to buy? Does your website “know” what to do with those customers when they visit? What marketing processes are affected as a result of this information?

How should it be structured and who should be involved?

AI governance can be structured in various ways with approaches that vary from highly controlled to self-monitored, which is highly dependent on the industry as well as the corporate culture in which it resides.

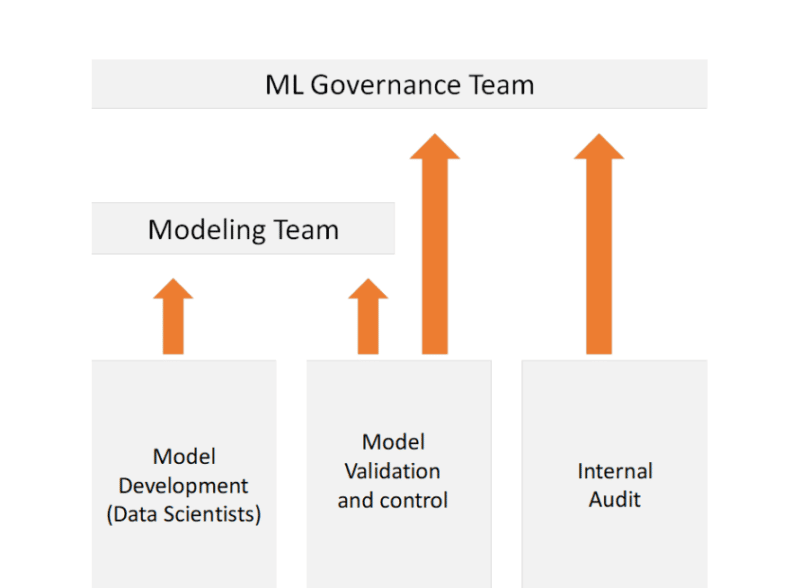

To be able to direct to the model development as well as its validation and deployment, governance teams usually consist of both technical members who understand how the algorithms operate as well as leaders who understand why the models should work as they are planned. In addition, someone representing the internal audit function usually sits within the governance structure.

No matter how AI governance is structured, the primary objective should be a highly collaborative team to ensure that AI algorithms, the data used by them and the processes that use the outcomes are managed so that the organization is compliant with all internal and external regulations.

Here is a sample AI Governance design for an organization taking a centralized approach, common in highly regulated industries like healthcare, finance, and telecommunications:

What can marketers contribute to AI governance?

There are several reasons for marketing to be involved in the governance of AI models. All of these reasons relate to marketing’s mission.

- Advocating for customers. Marketing’s job is to ensure that customers have the information they need to purchase and continue purchasing, as well as to evangelize for the company’s offerings. Marketing is responsible for the customers’ experiences and with protecting the customers’ information. Because of these responsibilities, the marketing organization should be involved in any AI algorithm that uses customer information or with any algorithm that has an impact on customer satisfaction, purchase behavior or advocacy.

- Protecting the brand. One of marketing’s primary responsibilities is protecting the brand. If AI models are being deployed in any way that might hurt the brand image, marketing should step in. For example, if AI-generated credit worthiness scores are used to determine in advance which customers get the “family” discount, then marketing should be playing an important role in how that model is deployed. Marketing should be part of the team that decides whether the model will yield appropriate results or not. Marketing must always ask the question: “Will this situation change how our primary customers feel about doing business with us?”

- Ensuring open communications. One of the most often neglected areas of AI/ML model development and deployment is the storytelling that is required to help others understand what the models should be doing. Transparency and explicability are the two most important traits of good, governed AI/ML modeling. Transparency means that the models that are created are fully understood by those creating them and those using them as well as managers and leaders of the organizations. Without being able to explain what the model does and how it does it to the internal business leaders, the AI Governance team runs the huge risk of also not being able to explain the model externally to government regulators, outside counsels, or stockholders. Communicating the “story” of what the model is doing and what it means to the business is marketing’s job.

- Guarding marketing-deployed AI Models. Marketing should also be a big user of those AI/ML models that help determine which customers will purchase the most, which customers will remain customers the longest, and which of the most satisfied customers are likely to recommend you to other potential customers or indeed churn. In this role, marketing should have a seat at the AI Governance table to ensure that customer information is well managed, that bias does not enter the model and that privacy is maintained for the customer.

But first, get to know the basics

I would like to say that your organization’s AI Governance will welcome marketers to the table, but it never hurts to be prepared and to do your homework. Here are a few skills and capabilities to familiarize yourself with before getting started:

- AI/ML understanding. You should understand what AI/ML are and how they work. This does not mean that you need a Ph.D. in data science, but it is a good idea to take an online course on what these capabilities are and what they do. It’s most important that you understand what impact should be expected from the models especially if they run the risk of exposing customer information or putting the organization at financial or brand risk.

- Data. You should be well-versed in what data is being used in the model, how it was collected and how and when it is updated. Selecting and curating the data for an AI model is the first place where bias can enter the algorithm. For example, if you are trying to analyze customer behavior around a specific product, you will usually need about three-quarters of data collected in the same way and curated so that you have complete as well as accurate information. If it’s marketing data that the algorithm will be using, then your role is even more important.

- Process. You should have a good understanding of the process in which the algorithm will be deployed. If you are sitting on the AI Governance team as a marketing representative and the AI algorithms being evaluated are for sales, then you should familiarize yourself with that process and how and where marketing may contribute to the process. Because this is an important skill to have if you serve on the AI Governance team, many marketing teams will appoint the marketing operations head as their representative.

No matter what role you play in AI Governance, remember how important it is. Ensuring that AI/ML is deployed responsibly in your organization is not only imperative, but also an ongoing process, requiring persistence and vigilance, as the models continue to learn from the data they use.

The post Governing AI: What part should marketing play? appeared first on MarTech.

(21)

Report Post