How Google Multisearch — Combining Text And Image — Will Change SEO

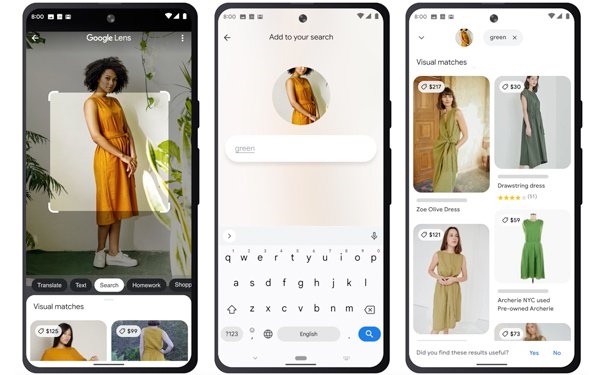

Google Lens now allows those searching to ask questions about the photo image they seek.

Made possible by advancements in artificial intelligence, multisearch enables people to ask a question about an object or refine the search by color, brand or a visual attribute.

For example, someone could screenshot a purple dress and add the query “green” to find it in another color, take a photo of a dining set and add the query “coffee table” to find a matching table, or take a picture of an exercise machine and add the query “accessories.”

When asked how this will change search engine optimization (SEO), Marty Weintraub, founder of Aimclear, said this capability makes it more like social, and wonders why it took so long. He said it was “stupid” that it took so long to invent a search capability that combines text and images on Google or Bing, or any other search engine.

“For social, paid and organic, you have to win it with the headline and image,” he said, pointing to an example in Pinterest. “It gives marketers a whole bunch more emotion to use in the creative. In terms of ranking indicators that occur from this hybrid, image vs text optimization, trying to control that, the horse already left the barn.”

SEO already is about more than words. It’s about phonetic clusters and meaning, and the entire site and what users do while on it, accessibility, and core web vitals, he said.

“How Google will regulate text on images will be interesting,” he said. “There are some preliminary guidelines, but for instance, in paid display advertising or organic social, we put text over the images, especially when we post in Facebook. Usually that test is advised by search.”

If Weintraub finds that a headline works in a $100,000 paid-search campaign, he will use the same in a paid-social campaign on Facebook.

“If you build it, they will spam it,” he said. “Words and images go together like peanut butter and chocolate, but some marketers will ruin it for everyone. It will be interesting seeing Google combine editorial requirements.”

For instance, there are some limits to what marketers can put in some images, and there are filters to prevent kids from discovering pornography in image search, he said.

“One thing is for certain,” he said, “it will not mitigate the need to buy paid search advertising. It will make organic real estate less on the page, and paid marketing more expensive. Google is not in the business of giving us free stuff.”

He also expects Google to create new ad units that exploit the format.

“Multisearch will most likely impact shopping sites more than any other vertical,” said Cory Hedgepeth, SEO marketing expert at 9rooftops. “A shopping site that displays clear, versatile product images, uses appropriate alt tags, and compliments all of that with robust descriptions likely prospers.”

He said that product site owners and operators should pay attention to those three-site page architectural facets.

“In some ways, I’d look at multisearch the same as I look at Google Images,” he said. “Products with clear images, proper descriptions and alt tags tend to do better than those without.”

Google’s multisearch feature is available in beta on Android and iOS in English in the U.S. The company said it is exploring ways that the feature might be enhanced by multimodal (MUM) search, Google’s latest AI model in search, to improve results for all the questions.

Google Lens now allows those searching to ask questions about the photo image they seek. MediaPost.com: Search & Performance Marketing Daily

(23)

Report Post