— May 11, 2018

Early in the morning, you log into your Google Analytics account only to discover that your website is suddenly going downhill.

To be sure you’re not dreaming, you quickly perform a Google search for the same keywords and pages that were generating lots of traffic for your website.

Sadly, you noticed that your ranking and traffic have tremendously plummeted.

Does this sound familiar?

Organic traffic drops are terrifying, especially when you have no clue as to what caused it.

In most cases, it’s natural to blame yourself for not doing your very best to prevent getting into this frightening condition. But in some other cases, you simply can’t figure out all the possible situations in which your website might decline in organic search traffic.

In every SEO campaign, the most critical part and the objective are always to generate and boost organic traffic to a website from the search engines.

This is partly because the potential customers from the search engine result pages (SERPs) are the most targeted and laser-focused on what your website is about.

According to Internet Live Stats, “Google receives over 66,000 searches per second each day.

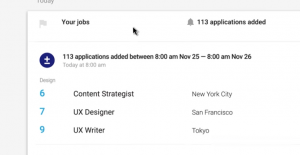

As you can see in the screenshot above, over 10 million searches were made on Google just within 4 minutes. Similarly, 2016 Marketing Report by Marketing Drive revealed that:

“Marketers see search engine optimization (SEO) as becoming more effective, with about 82% reporting effectiveness is on the increase and about 42% of this group saying effectiveness is significantly increasing.”

This goes to confirm that with a successful SEO strategy, you can reach this target & your traffic count will skyrocket by time. This will consequently affect your business & client acquisition in a very positive way.

Unfortunately, the SEO landscape has changed dramatically in recent time. Organic traffic comes and go — and the website’s organic performance appears to be as unstable as it gets. In the end, you might start asking yourself numerous questions such as:

- What caused my organic traffic to drop?

- Did Google update its algorithm again?

- Was there any sort of SEO attack on my website or was it something that I unknowingly did?

And while you’re still there searching for the reasons your hard-worked ranking and organic traffic suffered a blow, your frustration increases as the solution to your problem seems very far.

Finally, there can be various reasons and problems for an extreme organic traffic drop for any website. You simply need to figure it out and fix these problems as quickly as you can to avoid more damages.

Cheer up, because that’s exactly what this article will teach you how to do.

5 Simple Steps To Fixing Organic Traffic Drop

- Check the Simple Things First

While struggling and trying to rank a website in the SERPs, it can be very easy to focus on advanced tactics while underrating the importance of the simple on-page SEO strategies.

Why worry yourself about citation optimization, link velocity, or anchor text ratio, when the solution to your rankings problem could be a simple fix directly on your website?

Once you start to see organic traffic drop, ask yourself this question:

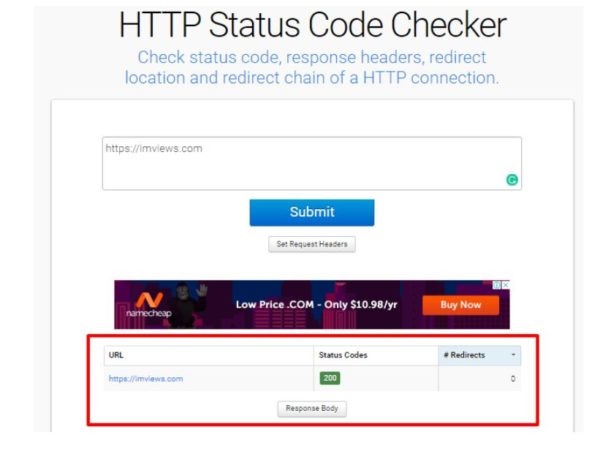

does your website return a 200 status code?

The 200 series status code is an indication that your website can be successfully communicated with. Furthermore, the normal 200 OK status code simply shows a successful HTTP request.

You can simply make use of a free tool such as “HTTP Status Code Checker” to determine if your website is actually returning a successful request. If it’s not, you can simply troubleshoot using the failed status code, like 410 (page permanently removed) or 404 (page not found).

Another thing you need to confirm is whether bots are successfully crawling your website.

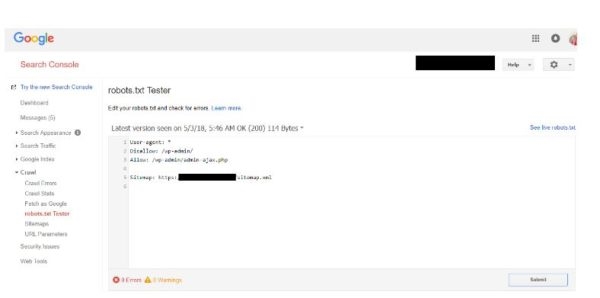

Ideally, robots.txt is a text file which is located in your web server’s top-level directory — and it’s work is to instruct bots on how to interact with your site.

Within this file, you can set exclusions and inclusions to your heart’s content, for example preventing bots from indexing duplicate pages or crawling a dev site.

Did you accidentally restrict search engine bots from crawling any of your important pages? Use Google’s Free Robots Testing Tool, to double check the robots.txt file – if you notice anything wrong, simply upload a more permissive file to the server.

- Diagnose a Google Penalty

This usually results in a big drop in rankings, and it often happens overnight. For instance, if you see that your website drops over 10-20 positions on too many keywords, this could be a penalty.

The major difference between manual and algorithmic penalties is that the first ones are manually applied by a Google employee, while the second ones are automatic and are usually released with numerous Google Updates.

Due to the fact that Google often makes updates without clearly saying it, you should always monitor for ranking changes.

You’ll know that Google has penalized you if the drop is harsh and instant, and you can notice that your website keeps on ranking on other search engines such as Yahoo or Bing.

So, how do you identify and recover from a Google Penalty?

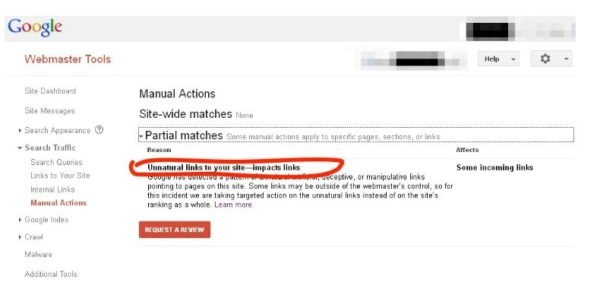

The first step to identifying what happened is to check your Google Webmaster Tools account.

This is where you’ll usually have notifications from Google concerning manual actions which they’ve taken against your website.

You’ll first have to check if there’s any notification in the “Site Messages menu.” There, you’ll be notified if GoogleBot detected any issues.

Furthermore, you have to check Google Webmaster Tools Manual Actions section, because that is where you’ll be notified of the Google penalties which have been applied to your website.

However, you have to accept the decision with a bold heart and move on to solving the problem.

Figuring out exactly what affected your website is the first step you have to take. If it is an on-page issue, you’ll have to reexamine your content and linking approach, and also check for duplicate content.

And if it is an off-page issue, you’ll have to identify and get rid of the links that were considered unnatural.

However, for the remaining backlinks still pointing to your website and weren’t removed, you just need to disavow them.

To recover from manual penalties (actions), you need to submit a reconsideration request. But know that it might take awhile till you regain your ranking position in the SERPs.

3. Examine Google Search Console

As difficult as Google algorithms can be, they are always very clear about what they want from a website. You need to religiously adhere to their guidelines and also implement their feedback for enhanced search performance.

Google Search Console is a free service offered by Google which will help you to track, monitor, optimize, and maintain your website’s visibility in search engine results pages.

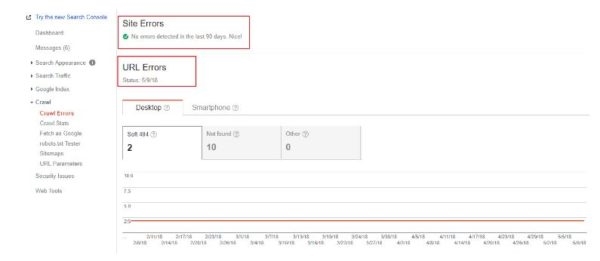

Check Google Search Console to know if there are any crawling errors interfering with the visibility or indexing of your website. Again, check if you notice:

- DNS errors

- Server errors

- URL errors

Go to Crawl > Crawl Errors to address any offending problems one after the other. Once done, mark as fixed.

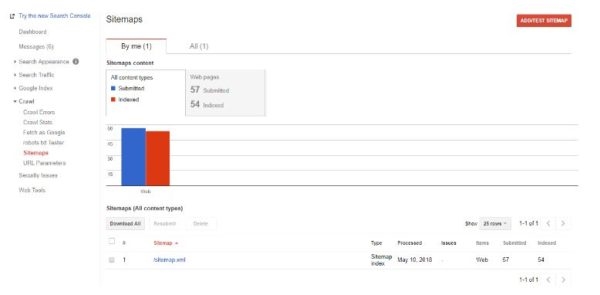

Moreover, via Google Search Console, you can submit an XML sitemap which charts your website’s structure. When loaded, examine if any discrepancy exists between the number of submitted URLs and the number of URLs Google indexed.

If the numbers are lean, then vital pages are possibly being blocked from search bots. The next thing to do then is to crawl your website with a scanning software like Screaming Frog, which will unearth the issue.

Finally, in your Search Console Preferences, ensure to check “Enable email notifications.” This makes it possible to quickly get alerts on any big problems — but endeavor to monitor Google Search Console regularly.

4. Check if You’re Indexing Everything Without Curtailing Your Content

Indexing every single page from your website and not curtailing your content is one good way of causing a drop in your organic traffic.

Google typically makes continuous efforts towards improving their search algorithm and detecting content that’s of higher-quality. That is the reason it introduced the Google Panda 4.0 update years back.

What does this update really aim at?

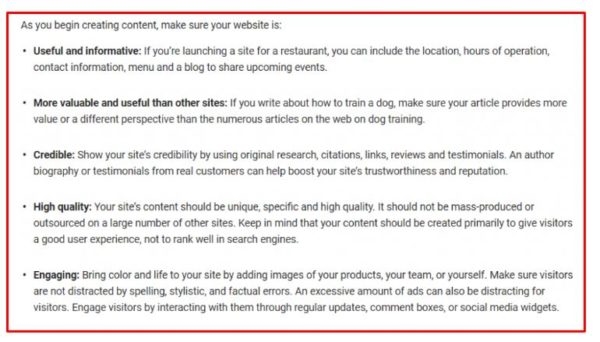

Simple! It penalizes low-quality content and increases the high-quality ones, based on Google’s idea of quality and valuable content. You can, in the screenshot below, see the kind of content you should not be looking at if you are truly determined in not making a mess out of your organic traffic.

Every website on the web has its evergreen and top-notch content, which generates organic traffic, and some other pages that are complete deadwood and useless.

So if you don’t want your organic traffic dropped, you should have only the pages you’re interested in ranking in the Google Index.

Else, you may end up contaminating the whole traffic of your website. The pages filled with low quality or obsolete content aren’t interesting or useful to the website’s visitor. Hence, they should be pruned for your website’s health sake.

Additionally, the performance of the entire website may be affected by low-quality content. And even when the site itself obeys the rules, mediocre indexed content may wreck the search traffic of the entire batch.

Therefore, if you don’t wish your organic traffic to dramatically drop because of content pruning and indexing, below are the necessary steps you need to take right now to successfully prune your content.

NOTE: Before proceeding with this, we want to make it clear on the fact that it’s really hard to take the decision of getting rid of indexed pages from the Google Index and, if done incorrectly, things may go wrong.

But at the end of the day, you need to keep the Google Index fresh with useful and meaningful information to be ranked and which helps your end users.

Step #1. Identify The Google Indexed Pages of Your Website

There are numerous methods of identifying your indexed content. Several methods can be found here, and select the one that is most suitable for your site.

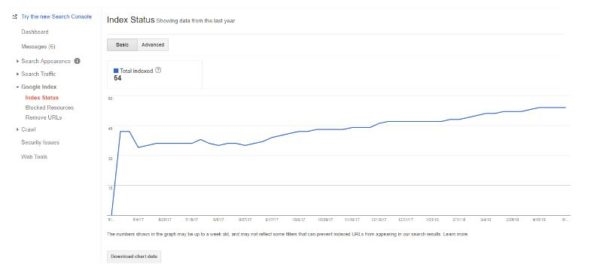

One good way of doing this is again, via your Google Search Console account, just like in the below screenshot. You’ll find the number of all your indexed pages together with a graphic representation of its evolution in the Google Index Status.

For you to get all the internal links on your website, once in your Search Console account, navigate to Search Traffic > Internal Links section. This way you will have a complete list of all the pages from your website (whether indexed or not) and equally the number of links that are pointed to each. This can be an awesome incentive for figuring out which of the pages should be subjected to content pruning.

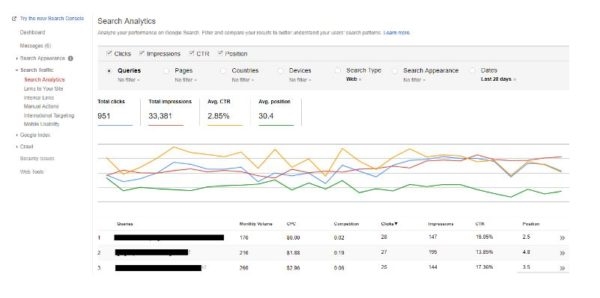

Step #2: Identify Your Website’s Underperforming and Low Traffic Pages

Yes, all the pages on your website matter but some matter less than the others. Hence, it’s essential to identify data related to the number of clicks, impressions, click-through-rate (CTR), and average position in the pruning process.

Step #3: No-Index the Underperforming Pages

When you’ve identified the pages pulling you down, as hurting as it might be sometimes, you should start to no-index them.

As difficult and time-consuming as it was to write them, and make them well discoverable by Google when it comes to removing them from the search engine’s radar, things are a little less complex.

You can find two ways to go about it below:

- Disallow the pages in the robots.txt file to inform Google to not crawl that part of your website. Bear in mind that you have to do it right as you do not want to further hurt your organic rankings

- Another way is to apply the meta no-index tag to the less-performing pages. Add the <META NAME=”ROBOTS” CONTENT=”NOINDEX, FOLLOW”> tag. That will inform Google to not index those specific pages, but at the same time to crawl every other page linked from it.

- You Got OutRanked by a Competitor

This usually leads to a little drop in ranking. When this is the case, you’ll see your competitor’s website that outranked you and the other similar websites will usually occupy the same positions as before.

Now, if you notice this kind of organic drop, the first thing is to beef up your link building campaigns and create more quality content that will beat what they have on their website.

Also, constantly tracking your main competitors and knowing their link building strategies could eventually predict their next moves and better position you to stay in the race.

Additionally, it’s important to keep a close eye on their content strategies as well. If they are continuously growing, it means they’re certainly doing something right.

Understand and modify your strategies accordingly. Also, try to be insanely creative and diverse with your content marketing tactics if you want to differentiate yourself from the fierce competition.

Conclusion

The moment you noticed there’s a huge drop in traffic in your Google Analytics, don’t worry, simply follow these steps to understand what might have caused it.

If you diligently follow all the 5 tips we shared here, you should be able to reverse the situation and take back your ranking position in the SERPs.

Even though you might be trying to follow the Google Webmaster Guidelines, you need to understand that Google’s algorithm is liable to change and these updates occur often enough.

And one of the areas that can be negatively affected by these changes is your website organic traffic.

Traffic drops are no fun, so anytime they occur, you have to ensure you inspect the problem further in order to decide whether it’s time to fright or just false alarm.

Digital & Social Articles on Business 2 Community

(57)

Report Post