We explain the data-driven processes we use to find testing opportunities, prioritise them in order of importance and start each CRO campaign with the greatest chance of success.

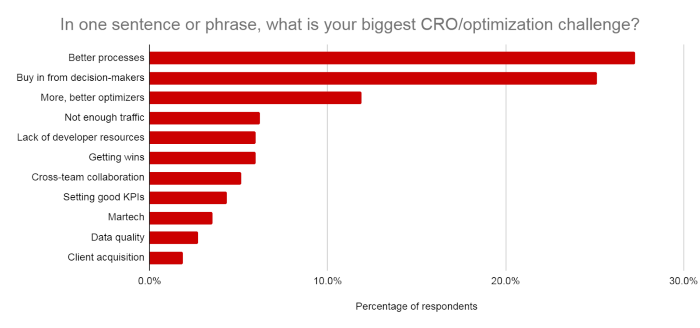

According to The 2019 State of Conversion Optimization Report by CXL, the biggest challenges marketers face with conversion rate optimisation (CRO) are improving their processes and convincing decision-makers to buy into conversion optimisation.

The same report reveals that marketers are “struggling to determine what they should test and the order to test it,” resulting in ineffective testing processes.

What we’re looking at

We’ve broken this guide down into five key sections, showing how we avoid the same problems highlighted in the CXL report above:

- Performance forecasting: How we use data to find opportunities, set test parameters and predict outcomes.

- Aims and goals: Setting specific goals for the next 6-12 months, based on our forecasting.

- Setting up the first tests: How we choose what to test and set up the first round of experiments.

- Learning from the first tests: Using the outcomes of early tests to inform future experiments.

- Reviewing month one: Analysing the first month, adapting the strategy where necessary and reporting the first round of findings to clients.

Conversion optimisation is a data-driven strategy by nature and your outcomes are only ever as good as the data you feed into your campaigns. This is the key theme you’ll see repeated through every step of the optimisation process we explore in this guide.

1. Performance forecasting

Every successful CRO campaign is driven by data, starting from the early planning stage. As with any strategy, you need to achieve an ROI with your conversion optimisation efforts and running accurate tests can require more time and resource investment than many marketers appreciate.

To achieve this ROI, you need to choose the right tests, run them correctly and prove the validity of their outcome.

Diving into the data

The first stage of any CRO campaign is to dig into your data so you can pinpoint opportunities and calculate their outcomes. This arms you with the insights to choose tests that will have the strongest impact, solve your biggest conversion problems and give your tests the highest chance of success.

At Vertical Leap, this starts with our CRO team looking at performance data related to your marketing objectives. At this point, we’re looking at a broad range of data attributed to every defined marketing goal, including:

- Purchases

- Successful checkouts

- Items added to basket

- Products viewed

- Contact form completions

- Phone calls

- Email signups

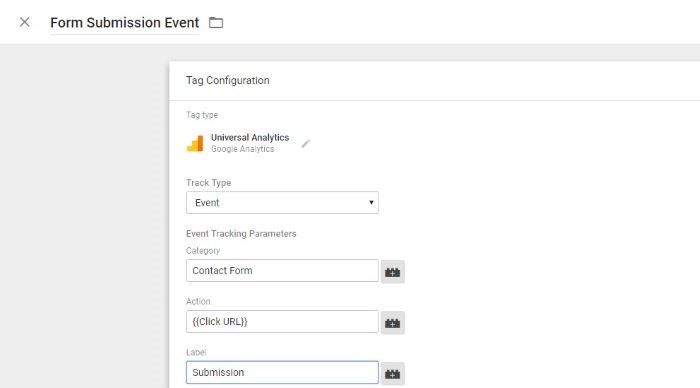

All of the actions above can be measured through URL tracking but you can dive even deeper into user actions by using Event Measurement in Google Analytics (formerly event tracking) or the equivalent technology in your chosen analytics platform.

With events, you can track click-based actions to measure detailed interactions like when a user clicks a CTA button, presses the play button on your video media player, starts filling out a form, enlarges a product video and any other interactions that allow us to measure engagement with your pages and goal completions.

By deep-diving into your data, we can pinpoint opportunities for improvement, diagnose performance issues with accuracy and prioritise tests based on their importance to your goals.

Forecasting outcomes and prioritising tests

With the necessary data at our fingertips, we can start to identify the best CRO opportunities based on goals, their projected impact and ROI. By using historical data for each individual client and cross-referencing this with data from other experiments, we can forecast the outcome of tests and prioritise them based on their expected value.

This allows us to set benchmarks for tests with greater accuracy before we invest resources into running them.

For example, let’s say we identify a landing page with below-average click-through rates. We can compare this data with similar CRO campaigns that are performing at a higher level and run this against data from other clients to build a more reliable forecast. This may tell us that increasing click-through rates by X will lead to product purchases on the next page increasing by Y or that reducing bounce rates by A will increase lead generation by B.

Data-driven forecasting allows us to set specific, realistic targets for individual tests and start with the ones that will have the greatest impact on your marketing goals.

2. Setting aims and goals

By forecasting the outcome of experiments, we can set achievable short-term and long-term goals for CRO campaigns. Aside from projecting the results of individual tests, we can map out the objectives of your campaigns for the next 6-12 months and set benchmarks for our team at every level.

This means every member of our CRO team knows what they’re aiming for. It also allows us to show clients what kind of results they can expect to achieve before we start running tests.

The goals set for your CRO strategy are the basis of every test and, as we’ll explain in more detail later, these should never change. There are plenty of reasons to adapt your testing methods as you compile more data and understand your users in greater detail but your CRO strategy should always remain laser-focused on achieving your marketing objectives.

3. Setting up the first tests

So far, we’ve looked at defining goals for your CRO strategy and using performance data to forecast the results of individual tests. This provides all the information we need to identify testing opportunities and choose which experiments to start with.

There are three key factors that we have to consider when prioritising experiments:

- Impact: The test’s forecast to increase conversions by the highest percentage.

- Conversion value: The importance of the conversion goal for each test (eg: purchases, cart additions, email signups, enquiries, page visits).

- Probability: The likelihood of a test returning the expected outcome.

By prioritising campaigns based on these three characteristics, we can start with the tests expected to improve performance by the highest margin and generate a return on your CRO investment as soon as possible.

Choosing high-impact test elements

There are plenty of online case studies describing tests where changing one button colour or using a different font lifted conversions by an unbelievable 500+%. Sadly, this isn’t how conversion optimisation works in the real world and case studies like these give marketers the wrong impression about CRO.

When was the last time you bought a product because you liked the colour of a CTA button?

Yes, a lack of contrast can cause users to skip right past your CTA without seeing it but that’s a much bigger issue entirely. If you want to influence user behaviour in your CRO campaigns, you need to focus on high-impact elements that have a real impact on purchase decisions:

- Page content: This is your entire message and, by far, the most influential element on the page – and if it isn’t, it should be.

- Loading times: It doesn’t matter how great your message is if users never get to see it.

- Layout: Layout dictates the visual structure of your message and how users digest it.

- Page experience: UX factors getting in the way of delivering your message and converting users.

- CTAs: Rightfully considered one of the most important page elements and a CRO priority.

- CTA placement: Even the best calls to action can underperform if they’re located in the wrong part of your page.

- Forms: Unwanted friction can prevent users from converting at the final hurdle, even after they’ve bought into your message – so make sure your forms aren’t getting in the way.

- Visual content: Images, video and a range of visual cues can enhance your message, engage users more effectively and even guide their eye to the most important parts of your page.

- Visibility: Ensuring the most important parts of your page stand out, using contrast, whitespace, colours and other visual techniques.

If you want to make a quick impact with your conversion optimisation strategy, start by measuring the loading times of your pages. If it’s taking longer than 2.5 seconds for the largest element on your page to load, optimising page speed should be your first priority.

Once your loading times are in check, it’s time to look at the most important element on your pages: content.

If your message doesn’t inspire users to take action, no design tricks are going to change this. Test different angles, selling points, pain points, benefits and key concepts in your message to find out what really gets a reaction from your target audience.

Run multiple versions of the same page with different messages against each other to determine which one is most effective. Next, you can test hero section variations to find the best way to introduce your offer with impact and encourage users to start scrolling down the page.

Also, pay attention to the content in your CTAs and optimise them to hammer home the key benefit in your message.

Once your content is optimised, you can shift your attention to the more visual elements of your page and CTA placement is always a good starting point. Use heatmaps to check that users are seeing your calls to action and experiment with different positions. Keep in mind that people don’t simply click buttons because they’re there; the message they see before arriving at CTAs determines whether they take action so don’t be afraid to place calls to action further down the page – just make sure your message is strong enough to keep them scrolling and inspire action.

Testing the right audience

The biggest challenge in conversion optimisation is getting accurate results from your tests. There are several variables that you need to try and mitigate here, one of which is the diversity of users who visit any one of your pages.

If you’re testing a page that generates 100,000+ sessions every week, how many of these visitors truly have the potential to convert?

You don’t want the results of your CRO tests to be skewed by users who are never going to complete your goals, to begin with. You want to focus on the core target audiences and buyer personas you’ve identified throughout your marketing strategy because these are the people you want to influence more effectively.

Let’s say your business only operates in specific areas or you’re promoting a mobile app and you know that iOS users tend to spend more money after downloading. Then, it doesn’t make much sense to test variations on people from areas where you don’t operate. Likewise, test data from Android users doesn’t deserve the same weighting as iOS users.

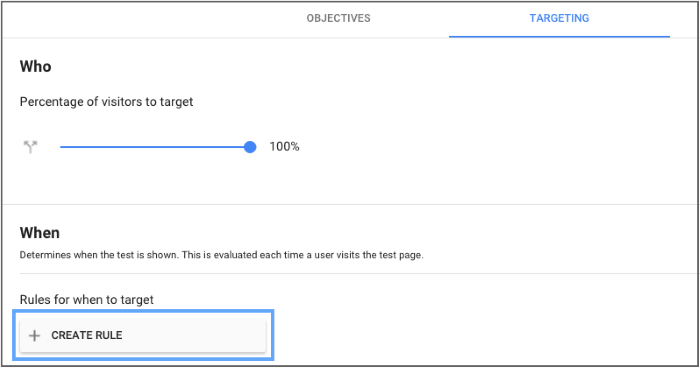

To ensure you get reliable test data from the audiences that truly matter to your marketing objectives, you need to segment test traffic. For example, in Google Optimize 360, you can target visitors based on their geographic location or the device they’re using for the two scenarios listed above.

Another useful example of test targeting is to only show variations to new users so that you’re not skewing results on return visitors.

For our clients, we source test subjects that match their target audiences based on age group, device category, gender, location, interests and more. So, if you want to target key decision-makers at specific types of B2B businesses, we can ensure your tests compile data from the people that really matter to your brand.

Fulfilling your CRO objectives

Everything we’ve discussed in this article, up until now, has been geared towards achieving the desired results from your tests. By forecasting outcomes, you have data-driven predictions to calculate the value of experiments and the ability to prioritise them based on their importance and expected outcome

Earlier, we also talked about defining the goals for each test and now you need to manage each experiment to give them the best chance of hitting your targets.

The most important thing, at this stage, is to ensure that the data you collect from each test is reliable. In the previous section, we talked about targeting experiments to focus on your most important audiences but how do you account for external variations like seasonal trends, economic fluctuations or even the weather?

All it takes is one bad summer to skew the results of CRO tests for a summer line of clothing.

The only way to guarantee the quality of your data and truly prove the results of your tests is to collect enough of it. The basic principle here is to run your tests for long enough to achieve statistical significance and minimise the impact of variables and anomalies.

Another way of validating outcomes is to compare them to other, similar experiments. While no two tests are ever the same, comparisons can help identify data flaws and this is no different to how Google compares outcomes for strategies like Smart campaigns.

You may find that early tests don’t produce the result you want but this doesn’t mean you can’t achieve your CRO objectives.

Even “negative” outcomes help us define next steps and your testing roadmap may change as your strategy matures. For example, we may detect anomalies or unexpected positives that tell us to move in a slightly different direction.

Your CRO objectives never change but the testing procedures should constantly improve to help you achieve them more effectively.

4. What we hope to learn from the first tests

If user behaviour was predictable, we wouldn’t need to run CRO campaigns, to begin with. As you’ll find with any long-term testing strategy, humans always retain the ability to surprise and this is why it’s so important to ensure the outcome of your tests are accurate.

In many cases, you’ll find that users don’t initially respond well to change (especially returning visitors) and, in others, you’ll see an overly positive response in the early phase of testing, which decreases over time.

Be careful that you don’t misinterpret the early outcome of tests and take false positives/negatives.

We don’t expect definitive answers from the first round of testing but we do hope to learn lessons that inform the next round of experiments. If the first batch of tests appears to prove our hypotheses correct, we then need to prove that this outcome is reliable with further testing.

Likewise, if early outcomes appear to contradict our hypotheses, we have to determine why. Is our hypothesis simply wrong, are the early results misleading or are there flaws in the testing method?

As long as we can prove the outcome of tests are accurate, wrong hypotheses are still positive outcomes because they prevent wrong assumptions causing mistakes in future campaigns.

If your testing methods are robust, every outcome is a lesson learned that will improve the performance of your overall marketing efforts. Even the outcomes of early experiments allow us to analyse the testing process to identify potential flaws, identify new experiments to try and develop a more reliable testing strategy.

5. Review the first month of testing

Once your tests have been running for a month, we always compile a detailed report to analyse progress, attribute results and define any changes being applied to your testing strategy.

This is important for us, as an agency, to review the first month of testing, take insights from the results and apply these lessons to future experiments.

However, this review is also crucial for you, the client, so that you can see exactly what is happening and the impact your tests are having. Not only does this establish a transparent working relationship, but it also provides a first-person view of the opportunities, outcomes and improvements being discovered during the first month of testing.

Even though a month isn’t long enough to take definitive answers from any test, the report shows that your CRO strategy is already generating valuable data and insights that will improve marketing and business decisions.

Finding the best CRO opportunities and achieving your testing goals is entirely dependent on the quality of your data. By compiling the right performance data related to your campaign goals, you can see where performance issues are occurring and diagnose cause with greater accuracy.

You can then forecast the outcome of test ideas to understand where the biggest opportunities are.

Once you know what to test, your forecast data provides the benchmarks you need to manage campaigns, measure their success and adapt your testing processes where necessary. All that remains is to confirm that your outcomes are reliable, demonstrate these successes to the decision-makers and apply these lessons to future marketing actions.

Business & Finance Articles on Business 2 Community

(44)

Report Post