Perhaps you’ve seen the movie, “A Christmas Story?”

If you haven’t seen this holiday comedy, which I highly recommend, let me at least tell you that the movie contains a subplot in which the main character jumps through hoops and exercises great patience, only to end up highly annoyed and disappointed thanks to the realities of marketing. Sound familiar? You’re not alone. Here’s a tale of our own disappointment—and how we turned it around.

While we’ll refer to the client only as such, you should know they use their digital marketing efforts for B2C lead generation. We began our engagement with this client in a number of areas, including search engine marketing, and from the very beginning touted the importance of testing and optimization. After all, what’s the point of driving traffic if they don’t convert into leads, right? Well, it took six months to convince them, to set them up with the proper tool (in this case, Optimizely), and to get them ready to run their first test.

Then began the hoop jumping, and the test of patience.

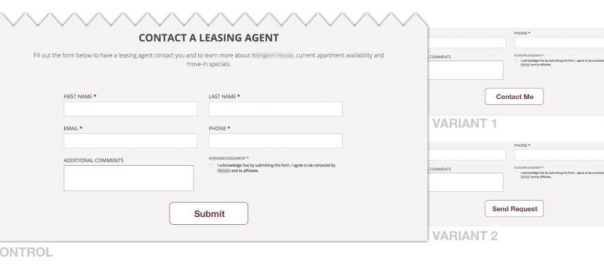

As you likely know, it is common practice in testing to start simple, especially when the client is new to it. So that’s what we did, initially suggesting a test of the copy for their primary “Submit” button, found on the lead form of their paid search campaign landing pages. We figured this was a good choice—every element of the conversion funnel either positively or negatively affects the experience, so why not start with the last barrier to conversion, the Submit button. However, despite our lengthy preparation and what we thought was successful onboarding, they pushed back with: “What if it doesn’t work? We can’t afford to lose even one lead.”

Their hesitation inspired a response on our part quite similar to that of our young hero in A Christmas Story when the secret message revealed by his hard earned decoder ring (an Ovaltine marketing gimmick) was, for him, a gigantic letdown. You see, that answer not only showed they didn’t want to run this test, it also showed they pretty much didn’t want to test at all! In order to benefit from testing, there has to be both risk and trust.

Risk is a necessity of ROI. Some test variations will succeed while others fail—failure is part-in-parcel to tests that provide real business impact. Moreover, these activities require trust that, despite the result, the testing outcomes will inform next steps and provide benefits in the long term.

So what happens next? Here’s how we turned that marketing frown upside down and enabled their testing program to launch and thrive.

1) Keep it simple and true, no matter what.

Obviously, we had to regroup. We were able to convince the client to run other tests around newsletter signups, and that was nice, but testing is supposed to help you solve business problems and achieve business goals. Their specific challenge was around lead generation and specifically improving product utilization and revenue growth. Newsletter signups were not going to achieve those goals on their own.

We could have tried to “wow” them with a large and complex test, but that would take time, and what we really needed was momentum. So, we kept it simple and stuck to our original submit button test, finding someone who was willing to sign off on it.

2) Make the test matter.

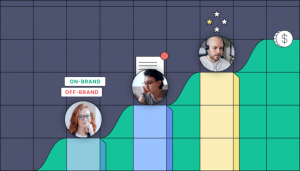

The test results were great! We ran the baseline, “Submit,” against two variations, “Send Your Request” and “Contact Me.” The winner, “Send Your Request,” improved the conversion rate by 25.6%. To get that much lift from a change that essentially costs nothing to implement is awesome! But again, we needed momentum, and presenting marketers with a percentage increase in a conversion rate was not going to accomplish that. We needed that to mean something to them. So we decided to paint a picture.

Based on the amount of traffic their landing pages received during the test period, we calculated how many leads they would have received using “Send Your Request” in place of “Submit.” This revealed that with this simple copy change they could expect an incremental 120 leads during the same amount of time and thus approximately 500 additional leads per year! At the time, we did not know the estimated value per lead (or we would have calculated the potential revenue of these leads as part of our pitch). However, the client’s own quick math revealed that a simple test can prove its worth, many times over.

3) Maintain momentum.

The test was straightforward and the results were easy to understand and share. So how do we make it replicable? We turned to the data for help. Digging deeper into the test results, we examined how different segments reacted to the variations. In doing so, one stood out—mobile visitors (in Optimizely, the default segment for a mobile visitor is someone who uses either a tablet or smartphone). For this audience segment, “Contact Me” was actually outperforming Send Your Request, but there weren’t quite enough mobile visitors to have statistical confidence in that result. So, we built another test.

In order to ensure they would sign off on this new test, we had to make it really count. We told them that if our assumption was correct and the trend held, we could use Optimizely to target different segments with different button copy. We did the math for them, explaining that while using “Send Your Request” would yield them almost 500 additional leads per year, segmenting the button copy combination could increase that number by another 100 leads. The more leads the better, as any client knows, so this wasn’t hard to sell when positioned in this way. The client was convinced of testing’s value, and we were finally off and running.

Not every test is a winner. And not every idea we planned for this client bore fruit. But the client has officially embraced the saying, “no risk, no reward.”

Spoiler alert! At the end of A Christmas Story, the main character gets what he always wanted. While testing and optimization rarely provide such storybook endings, it offers great lessons—and always an interesting story.

Business & Finance Articles on Business 2 Community(52)

Report Post