Is Privacy Dead In The Digital Age? What To Do About It: Part I

Let’s face it: Privacy is dead and rigor mortis set in a long time ago — back in the ’60s and ’70s, when computers began to store our data to target and customize direct marketing. The digital era and social media have merely brought the embalming to a greater art form.

While some are attempting to put the digital genie back in the internet bottle, the social network generation not only accepts that privacy is gone — they expect it.

The truth is that we live in a society where most people prefer to trade their personal information for the goods and services they use in their daily lives.

Part 1: Life on Blast

To quote New York millennial S.G.: “Privacy is dead in this digital age. Everyone puts their life on blast.” Don’t mistake or water down S.G.’s meaning. Every element of one’s life, good or bad, is out in front of everyone else. In this context, any of the millennial generation and those to come demand a level of personalization and socialization that makes privacy as a concept almost laughable. Woe to the brand that doesn’t already know everything there is to know about a digital millennial and hasn’t pre-tailored the experience based on that knowledge.

“What? You don’t already know my preferred music, size, and colors? Everything about me and my friends? You’re not prepared to continue the conversation we had 3 days or 3 years ago? I have to click more than once to buy because you failed to keep my data and shopping history? Sooo 20th century. Next.”

All the drama misses the two enormous elephants in the room: First, almost every aspect of our lives has been tracked, aggregated, trended, and targeted for decades with credit cards, loyalty cards, subscriptions, and more. Second, consumers must take on a much greater ownership of how they handle their personal information and how they personally manage and critically think about all the information coming at them. This challenge began well before social media, and while Facebook has a role in the solution, it is neither the cause of the problem nor the villain that some portray it to be.

Ultimately, we the users are our own biggest culprit. We have allowed ourselves to become passive consumers of whatever information social media, cable TV, or any other venue throws at us. Many of us have abandoned critical thinking and active navigation for reinforcement of what we already think and feel by insular digestion of micro-targeted content.

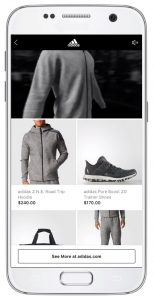

Long before Facebook, social media, or even the internet, retailers, banks, hotels, and so on were all collecting and tracking everything they could find out about you — everything you buy, when you bought it, where, what interests you expressed, or researched, or read. Then they used analytics to figure out what your purchases and activities mean about you and what you likely want, and what you likely will do next. Ostensibly they did all this to do a better job of serving you. The more a company knows about you, the better they can make products and services for you, and the more they can tailor their advertising and promotions for you — and of course make more money for themselves along the way.

Let’s get friendly with our emotions. When we contact a brand on the phone, Facebook, or Twitter about a problem, and they respond personally — knowing who we are, what we’ve bought from them, how we use it, the problems we’ve had in the past, and the history of the specific incident — we like that.

Most people love that! Because digital technology on the internet can track at a more refined level, it takes the process to an even more micro level. Facebook is the ultimate, so far, because 1) people use it so much every day, 2) users actually participate by liking, sharing, and talking, thus providing massively more specificity to the individual information, and 3) Facebook has done a pretty great job of organizing their service and tools so that they and their advertisers can make use of it.

Critically important and a key driver of the election manipulation is a Facebook advertising feature called dark posts. A dark post is an advertisement seen only by the people the advertiser has micro-targeted. The good aspect of the feature is that it enables an advertiser to better tailor messages to different people. The problem comes in when a bad actor, such as an election manipulator, uses the same capability to send conflicting messages (or different kinds of fake news) about a candidate or issue to different people — basically misrepresenting the subject to some or all groups.

People overall don’t pay much attention to this issue or care too much about it (at least not until it’s shoved in front of them with emotional hyperbole). People have consciously or vaguely traded their information and willingness to get ads in order to get the internet they like for little or no cost.

Actual hacking data breaches of private information in the last few years could prove much more damaging than what you like and don’t like on Facebook. Yet in a fairly short amount of time, all the brouhaha has blown over. Those don’t really feel as personal, or as core to one’s day-to-day life, as Facebook interactions. But that’s just the point. People value their social media interactions enough to trade their privacy. And no amount of articles, hyperbole, or congressional hearings will change that.

How can we give people the depth and range of social media experiences they want, while also giving them more control? Once they are given more control, will they use it? And one big question: What happened with Facebook that took all this to a new level? That will be the subject of Part 2 of this column.

(52)

Report Post