Large Language Models Have A Unique Voice That Technology Can Detect

Artificial intelligence (AI) has come under fire lately, whether it is OpenAI’s technology mimicking the voice of Scarlett Johansson or concerns around voice selection processes, or copyright content infringement for audio, video and text.

Alon Yamin, CEO and cofounder of Copyleaks, which provides AI governance and detection technology, says AI tools help people plagiarize content by humanizing the phrases and helping the content to read and sound more human.

But large language models (LLMs) also have their own voice.

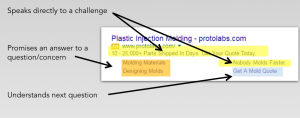

“Search engines look for original high-quality content and the sites get penalized if there’s similar content,” he says. “Even if the site is the originator of the content, the other site might have a stronger website authority. The originator of the content can still get hurt if their authority in Google’s view is weaker than the other site.”

Copyleaks detects a more sophisticated type of plagiarism — much more than a copy-and-paste type of plagiarism, he says. In this form, people would change words or translate the copy into another language.

When ChatGPT came out in November 2022, the models that detect plagiarism identified the style and unique voice of the writer. This enables Copyleaks to detect originality with human content.

“We discovered the same models also work well to identify the unique voices of large language models,” Yamin says.

Each LLM has its own style. In other words, Copyleaks can tell which of the LLMs or Chatbots wrote the copy. Or, if the copy was written by a human.

(20)

Report Post