Making sure generative AI doesn’t go “evil” on you requires putting a human in the loop. But there are going to be a lot of loops.

“There is no sustainable use case for evil AI.”

That was how Dr. Rob Walker, an accredited artificial intelligence expert and Pega’s VP of decisioning and analytics, summarized a roundtable discussion of rogue AI at the PegaWorld iNspire conference last week.

He had explained the difference between opaque and transparent algorithms. At one end of the AI spectrum, opaque algorithms work at high speed and high levels of accuracy. The problem is, we actually can’t explain how they do what they do. That’s enough to make them more or less useless for tasks that require accountability — making decisions on mortgage or loan applications, for example.

Transparent algorithms, on the other hand, have the virtue of explicability. They’re just less reliable. It’s like a choice, he said, between having a course of medical treatment prescribed by a doctor who can explain it to you, or a machine that can’t explain it but is more likely to be right. It is a choice — and not an easy one.

But at the end of the day, handing all decisions over to the most powerful AI tools, with the risk of them going rogue, is not, indeed, sustainable.

At the same conference, Pega CTO Don Schuerman discussed a vision for “Autopilot,” an AI-powered solution to help create the autonomous enterprise. “My hope is that we have some variation of it in 2024. I think it’s going to take governance and control.” Indeed it will: Few of us, for example, want to board a plane that has autopilot only and no human in the loop.

The human in the loop

Keeping a human in the loop was a constant mantra at the conference, underscoring Pega’s commitment to responsible AI. As long ago as 2017, it launched the Pega “T-Switch,” allowing businesses to dial the level of transparency up and down on a sliding scale for each AI model. “For example, it’s low-risk to use an opaque deep learning model that classifies marketing images. Conversely, banks under strict regulations for fair lending practices require highly transparent AI models to demonstrate a fair distribution of loan offers,” Pega explained.

Generative AI, however, brings a whole other level of risk — not least to customer-facing functions like marketing. In particular, it really doesn’t care whether it’s telling the truth or making things up (“hallucinating”). In case it’s not clear, these risks arise with any implementation of generative AI and are not specific to any Pega solutions.

“It’s predicting what’s most probable and plausible and what we want to hear,” Pega AI Lab director Peter van der Putten explained. But that also explains the problem. “It could say something, then be extremely good at providing plausible explanations; it can also backtrack.” In other words, it can come back with a different — perhaps better — response if set the same task twice.

Just prior to PegaWorld, Pega announced 20 generative AI-powered “boosters,” including gen AI chatbots, automated workflows and content optimization. “If you look carefully at what we launched,” said Putten, “almost all of them have a human in the loop. High returns, low risk. That’s the benefit of building gen AI-driven products rather than giving people access to generic generative AI technology.”

Pega GenAI, then, provides tools to achieve specific tasks (with large language models running in the background); it’s not just an empty canvas awaiting human prompts.

For something like a gen AI-assisted chatbot, the need for a human in the loop is clear enough. “I think it will be a while before many companies are comfortable putting a large language model chatbot directly in front of their customers,” said Schuerman. “Anything that generative AI generates — I want a human to look at that before putting it in front of the customer.”

Four million interactions per day

But putting a human in the loop does raise questions about scalability.

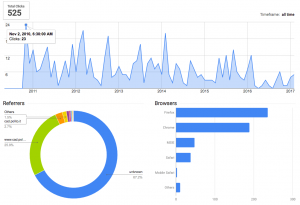

Finbar Hage, VP of digital at Dutch baking and financial services company Rabobank, told the conference that Pega’s Customer Decision Hub processes 1.5 billion interactions per year for them, or around four million per day. The hub’s job is to generate next-best-action recommendations, creating a customer journey in real-time and on the fly. The next-best-action might be, for example, to send a personalized email — and gen AI offers the possibility of creating such emails almost instantly.

Every one of those emails, it is suggested, needs to be approved by a human before being sent. How many emails is that? How much time will marketers need to allocate to approving AI-generated content?

Perhaps more manageable is the use of Pega GenAI to create complex business documents in a wide range of languages. In his keynote, chief product officer Kerim Akgonul demonstrated the use of AI to create an intricate workflow, in Turkish, for a loan application. The template took account of global business rules as well as local regulation.

Looking at the result, Akgonul, who is himself Turkish, could see some errors. That’s why the human is needed; but there’s no question that AI-generation plus human approval seemed much faster than human generation followed by human approval could ever be.

That’s what I heard from each Pega executive I questioned about this. Yes, approval is going to take time and businesses will need to put governance in place — “prescriptive best practices,” in Schuerman’s phrase — to ensure that right level of governance is applied, dependent on the levels of risk.

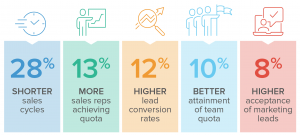

For marketing, in its essentially customer-facing role, that level of governance is likely to be high. The hope and promise, however, is that AI-driven automation will still get things done better and faster.

The post Mitigating the risks of generative AI by putting a human in the loop appeared first on MarTech.

(10)

Report Post