A/B testing is the industry standard for any digital activity aimed at increasing conversions or doing the best for your audience. Whether it’s about websites, email campaigns or in-app communication: everyone says, “Don’t forget to A/B test!”

But maybe you should. Because honestly, A/B testing isn’t the only kind of test. It’s just the only kind of test we’ve been able to easily do.

Only in the last few years has the alternative – no, it is NOT multivariate testing (more on that later) – become practical: Multi-Armed Bandit testing.

What Is The Multi-Armed Bandit Test?

Multi-armed bandit is a thought experiment that refers to a problem in probability theory. Don’t fall asleep yet, I promise it’s not that bad!

Here’s the premise: You walk into a roomful of slot machines (which are called one-armed bandits because they have a lever – an “arm” if you will – and they tend to lighten your pockets).

So: which of these machines pays out the most?

If there’s a machine in the room that pays out twice as often as the others, or twice as much, that’s the one you want, right? So when you have to choose which machine to use, you have a multi-armed bandit problem:

Knowing that each machine provides a random reward, you need to decide which machines to play, how many times to play each machine, and in which order to play them. Your objective is to maximize the sum of rewards earned through a sequence of lever pulls.

So what do you do?

Maximizing Multiple Slot Machine Pay Out With The A/B Method:

Using an A/B testing approach, you could pick two machines and test them, then stick with the best one.

Or you could test them all, one against the other, sequentially, pitting a new one against the one that performed best until so far. That’s of course provided you had a lot of time and money, and it was a very understanding casino…

Maximizing Multiple Slot Machine Pay Out With The Multi-armed Bandit Method:

Using a multi-armed bandit method:

1. Split 10% of the tokens you have equally between any of the machines (this is your exploration phase).

2. At the same time use the other 90% of the tokens in the machine that rewards you the most (this is your exploitation phase). If machine 1 pays out best, use that one….until your simultaneously ongoing exploration points to another machine.

While the percentages are up to you, hopefully you win some money and use it to explore and exploit more. In general, this is how you best play these multi-armed bandits.

How Is It Different From Regular A/B Testing?

When you A/B test a website you’ll run two possibilities – two styles of button, two blocks of copy, two seconds until the chat window pops up, etc. – and assess them in terms of conversions according to your chosen metric(s). The winner stays on, the lower performer gets the elbow.

To understand why, we need to drill a little deeper into testing methodology. A/B testing uses a short exploration phase and a long exploitation phase. They’re discrete; you do one, and then the other. You find out which machine is the best, then you pump the handle on that one — “forever”.

But suppose some of the machines change over time? Suppose the repair guy comes around and fixes some, or changes the payout schedule or algorithm? Then your A/B test is a little less reliable – not because of the number of variables, but because its discrete exploration and exploitation phases stop you from learning and earning at the same time, and, crucially, only allows you to select one answer.

So How About Multivariate Testing?

A word about multivariate testing here.

When you do a multivariate test, you are not pitting multiple versions of a page (with permutations of a single element) as in an A/B/n test against each other. Instead, you test multiple elements contained within a single page and which may or may not have multiple conversion goals.

The point of a multivariate test is to give you an idea of which elements on a page play the biggest role in letting you achieve the objective of that page.

In other words, this type of test identifies the best combination of element variants which, taken together, has a tendency to consistently produce the greatest increase in conversions. So, multivariate testing is not an alternative to A/B testing; it serves a different purpose altogether.

Going back to the casino, you are not playing around with the parts of a slot machine; you are trying out different machines altogether with variations on a single element – the payout (the metrics being amount and frequency). Which is why we’re comparing the viability of multi-armed bandit to that of A/B as opposed to multivariate testing.

Types Of Multi-Armed Bandit Setups

There are multiple forms of MAB testing, each with its own science fiction-esque name.

Zak Aghion, Co-Founder of Splitforce, shared details about the most popular ones in a post on Quora:

Epsilon-Greedy: the rates of exploration and exploitation are fixed

Upper Confidence Bound: the rates of exploration and exploitation are dynamically updated with respect to the UCB of each arm

Thompson sampling: the rates of exploration and exploitation are dynamically updated with respect to the entire probability distribution of each arm

Epsilon-Greedy is by far the most used, because of its (relative) simplicity and because it has been proven adequate for so many practical applications. Ex-Microsoft software developer Steve Hanov explains that more sophisticated implementations “may eke out only a few hundredths of a percentage point of performance.”

If you’re comfortable with a few lines of code and you’d like to run your own epsilon-greedy bandits from scratch, Steve offers a 20-line recipe in the same post.

What Can You Test With MAB?

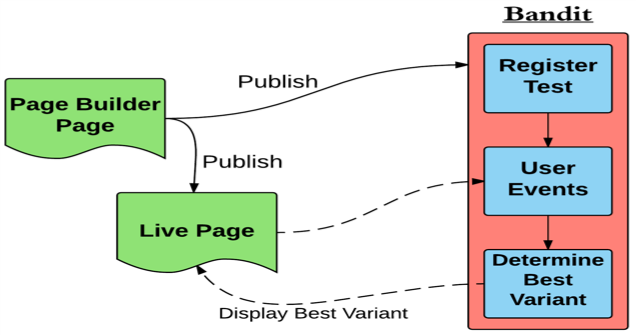

“Bandito” – Washington Post’s Real Time MAB Testing Framework

Anything you can test with A/B testing you can test with MAB. The difference is that instead of picking one option and sticking with it no matter what, a Multi-Armed Bandit test either:

- ideally runs forever and is always able to favor the current winner, or

- runs for a set amount of time but during that time you’re not losing money on knowingly keep on showing an option that isn’t making you money

Click-feed pages, like Buzzfeed’s, often work with a Multi-Armed Bandit setup where the algorithm automatically presents the articles in the order of most likely to be clicked by readers.

But you can just as easily test 2 headlines for your article and instead of showing the loser 50% of the time, have the algorithm favor the winner early on (show it more often) but still give the other one a fair chance (by showing it every now and then to see if maybe it has started to perform better).

A sure sign you can do all kinds of tests with MAB is that Google Analytics Content Experiment’s statistical engine uses multi-armed bandit:

Experiments based on multi-armed bandits are typically much more efficient than “classical” A-B experiments based on statistical-hypothesis testing. They’re just as statistically valid, and in many circumstances they can produce answers far more quickly. They’re more efficient because they move traffic towards winning variations gradually, instead of forcing you to wait for a “final answer” at the end of an experiment. They’re faster because samples that would have gone to obviously inferior variations can be assigned to potential winners. The extra data collected on the high-performing variations can help separate the “good” arms from the “best” ones more quickly.

Basically, bandits make experiments more efficient, so you can try more of them. You can also allocate a larger fraction of your traffic to your experiments, because traffic will be automatically steered to better performing pages.

Criticism

MAB based testing has also come under criticism for over-optimizing higher performing pages; because the algorithm sends more traffic to higher performing content, it is likely to reinforce small differences in low-traffic experiments and lead to skewed results.

If that’s happening (say, if you’re running Content Experiments and the results are coming back looking all kinds of crazy, with spikes in historically low-performing Goals), it may be time for a “sanity test” – consider doing your own A/B tests on those pages and comparing the results. Test your testing, sort of.

So Which Is Better – A/B Or Multi-armed?

If you really, really want the answer to that question, here it is:

- A/B is better. (Discussion on Hacker News)

- MAB is better. (Discussion on Hacker News)

- It’s complicated. (Discussion on Hacker News)

The question of which of these two testing methodologies is better is, like many such questions, a bit like asking whether a Hummer is better than a Lamborghini. Obviously, the answer depends on your needs. It makes more sense to start by looking at the businesses scenarios in which each test type excels.

In Bandit Algorithms for Website Optimization, author John Myles White identifies two key problems with A/B testing: One, you’re wasting time exploring suboptimal solutions long after it becomes obvious that they’re suboptimal. Two, the discrete jump from exploration to exploitation is inherent to the model even if it’s holding you back.

By contrast, bandit tests are adaptive, and continue to explore even as they exploit. So they contain a built-in way to deal with issues like seasonality that confound A/B tests, unless they’re already well understood.

Bandit tests excel for very short or very long testing cycles. If you’re running a brief test to establish where to start, bandits are probably your go-to. On the other end of the spectrum, if you’re doing a long-term, multi-version test of web pages describing a complex process or containing revenue-critical elements, MAB might be your best choice.

A/B tests excel for mid-length test cycles when only the variables being tested influence the result other variables are fairly well understood. Because A/B tests force you to wait for a final result before you have the requisite “significant” data to act on, unlike the ongoing approach offered by bandit testing, they’re better suited to mid-length tests.

If you have long-running tests aimed at perennial optimization, bandits are a better bet; they let you iterate testing and feed that information into your own actions. Google’s Stephen L Scott explains, in the official overview of Content Experiments, how they “take a fresh look at your experiment to see how each of the variations has performed, and we adjust the fraction of traffic that each variation will receive going forward.” That’s a level of agility A/B testing can’t match. But because MAB methods don’t usually test any one variable for a long time, they take a long time to produce results that are statistically significant, and are likely to reinforce success rather than discover and clarify failure, making them a better choice for some but not all marketing purposes.

So coming back to our question, which is better? I think it’s best to ask when instead:

- When should you use MAB instead of A/B?

- When should you use A/B instead of MAB?

Over To You

Ultimately, choosing between bandit and A/B comes down to the specific case you’re using it for. As we’ve seen, bandit excels in some areas, A/B in others. Comparing them is a false, apples-to-oranges choice, in that one is not meant to be “better” than the other; they’re each a good fit for different projects. But knowing the distinction and the scenarios in which to implement them makes the decision a whole lot easier. Please discuss in the comments if you have anything to add or if you’ve conducted some high volume multi-armed bandit testing!

Resources

- Multi-armed bandit experiments @ Google, by Steven L. Scott, PhD, Sr. Economic Analyst

- When to Run Bandit Tests Instead of A/B/n Tests @ ConversionXL, by Alex Birkett, Growth Marketer and Content Strategist

- FlowSplit: experimental WordPress Multi-Armed Bandit plugin based on Steve Hanov’s 20 lines of code that will beat A/B testing every time

- Bandito – The Washington Post’s real-time multi-armed bandit content testing framework

Hand-Picked Related Articles:

- Quick, Affordable A/B Testing With PPC

- Usability vs. Conversion Optimization

- 13 Conversion Rate Optimization Tools Compared

* Images: ![]() Public Domain, pixabay.com via getstencil.com

Public Domain, pixabay.com via getstencil.com

Multi Armed Bandits & A/B Testing: Which Makes You More Money

The post Multi Armed Bandits & A/B Testing: Which Makes You More Money appeared first on Search Engine People Blog.

Search Engine People Blog(118)

Report Post