Now You See It — With AI

by Sarah Fay , February 22, 2018

The following post was previously published in an earlier edition of AI Insider:

It seems to me the science of AI (technology that artificially replicates the way the human brain perceives, reacts and learns) is regularly underappreciated. We naturally take for granted the human brain’s ability to perform simple tasks such as listening and understanding, or seeing and identifying — but these brain functions have evolved over millions of years to become refined and automatic. What’s required for a computer to mimic these capabilities shouldn’t be underestimated.

If you show any three-year-old an image of a dog, she can tell you it’s a dog, and that is considered unremarkable, regardless of the fact that her brain is doing some fancy work to identify that image. For a computer to do the same requires a form of AI development called “deep learning,” which trains the algorithm to memorize what a dog looks like based on the arrangement of light and dark pixels.

Untrained, when shown an image of a dog, the computer will merely perceive a dark blob. It must be shown thousands of canine images labeled “dog” — and thousands of examples without such images, labeled “not a dog” — to be able to identify canines in all their many shapes and sizes.

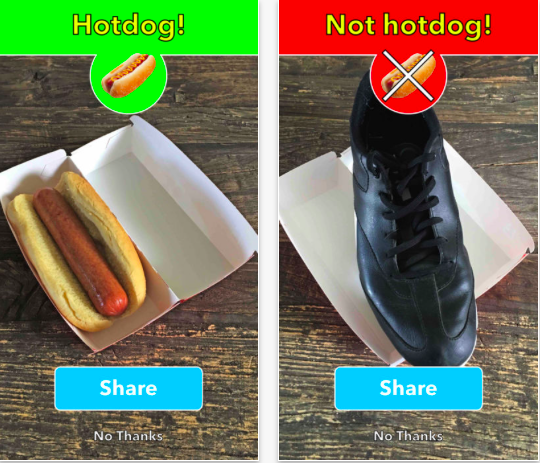

“Silicon Valley” fans may remember the farcical app developed by SeeFood Technologies, which identified food items as “hotdog” or “not a hotdog.” This made for good comedy, taking a whack at technology without a purpose, and giving an inside wink to computer vision developers.

In fact, Not a Hotdog is a real app created for the show using Google’s Tensorflow, Keras and Nvidia, holding an impressive 4.6 star rating from 479 real users in the iPhone app store, and now available on Android. Thumbs up to the writers of “SV” for stimulating thousands of downloads for a laugh.

In reality, computer vision has advanced to the point where algorithms can identify animals, objects, places and people – even individuals — through facial recognition, which is nothing short of miraculous when you think about it. There are a number of applications for brands to leverage computer vision as a way to be more in tune with the visual Web.

For example, GumGum uses computer vision to align a brand’s advertising messages contextually with images that reflect that brand.

The major behavioral shift toward sharing photos and images on platforms such as Instagram and Snapchat has made visual marketing an imperative. Beyond photo-sharing platforms, every digital content provider has become more image-rich, because people are more responsive to images than text — way more.

For example, tweets with images are shared 150% more than text-only content, according to “The Rise of the Visual Web. And Why It’s Changing Everything (Again) for Marketers,” a white paper developed by GumGum. The company’s CEO, Ophir Tanz, says that’s because “humans are visual creatures. We are not natively readers – that’s a higher order operation, not a basic capability.”

Simply put, the brain processes images faster than text — 60,000 times faster, according to a 3M study conducted in 1986 that’s also cited in the GumGum paper. Our eyes naturally gravitate to images, because we can pick up information faster that way.

Tanz cites proof of this:”In every eye-tracking study I’ve seen, the heat goes to the images on the screen.” He also notes multiple studies have shown people can make a quick distinction between the stock imagery used in advertising versus real world-images and content, which are favored with much more attention.

Advertisers using computer vision in their campaigns can calculate the benefits with a couple of assumptions: 1. With a closer adjacency to images embedded within editorial content, the advertising message will garner higher levels of attention; and 2. The creative budget will be lower as publishers’ images becomes part of the creative strategy.

All this should result in higher engagement and overall returns for the advertiser, a theory that appears to be proving out. Andrea Van Dam, CEO of media agency Women’s Marketing Inc., says, ” So many of our clients are in fashion and beauty, where visuals play a critical role. By using computer vision targeting methods such as GumGum’s, we’re seeing a significant uptick in engagement — with up to 14X that of standard rates.” (Full disclosure: I am on the board of WMI, which is how I first learned about GumGum.)

In addition to contextual content targeting for advertisers, computer vision can also deliver marketing insights. For instance, GumGum has an offering that captures all the instances of brand exposure in recorded sports events and across viral sharing platforms, which is useful in calculating the value of those sponsorships.

Another computer vision startup, Netra, detects social sharing of brand images, offering insights about customers who spontaneously include brand images in their feeds. Richard Lee, CEO of Netra, notes that “Consumers today are openly sharing billions of images per day on social media. Hidden inside these photos & videos are incredibly valuable insights on what consumers care about: their activities & interests, key life events, who they hang out with, and what brands they use.”

The thought here is that “visual listening” to what consumers are posting in their imagery is more accurate than hashtags alone or traditional surveys, focus groups, or questionnaires — and therefore even valuable for targeting.

Several others — Cortex, Adhark and Wevo — say they can predict which marketing images and messages will produce the best results, eliminating guesswork. And companies such as TVision Insights (I am an angel investor) and Affectiva are using facial recognition technology to help advertisers understand emotive reactions and attention to advertising and content — another way to more accurately calculate the value of advertising.

By using computer vision technologies to refine or define creative strategies, marketers will accelerate an industrywide shift, as the creative process becomes more closely tied to technology and data strategies. This journey has just begun, and there is a giant amount of potential for marketers to improve results, as I have written about previously.

Recognizing that creative is a key (and likely the biggest) determinate in driving ROI is the first step to leveraging visual data. And AI can now be used to “see” the best options for brands to improve.

MediaPost.com: Search Marketing Daily

(51)

Report Post