Nvidia Introduces Latest AI Chips That Google, Microsoft, Amazon Will Use

The future of advertising and other industries will change with the emergence of Nvidia’s latest chips and software.

On Monday, the company introduced the latest generation of artificial intelligence (AI) chips and software for running AI models, as the chipmaker looks to cement its position as the main supplier for companies building AI platforms such as Google, Microsoft, Amazon, Meta and others.

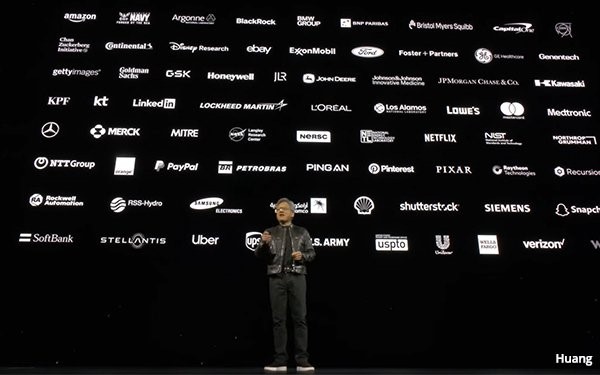

Jensen Huang, Nvidia founder and CEO, introduced the technology on Monday at the company’s developer’s conference in San Jose, calling the graphic part of his presentation a “home movie.”

Blackwell is the name of the new generation of AI graphics processors.

The first Blackwell chip is called the GB200 and will ship later this year, even as companies and software makers continue to scramble to buy Nvidia’s current generation of Hopper H100s and similar chips.

“Hopper is fantastic, but we need bigger GPUs,” Huang said at the company’s developer conference in California.

“$100 trillion of the world’s industries are represented in this room today,” he said.

The list of presenters at the conference not related to IT were vast and included companies such as Disney Research, L’Oreal, Uber, UPS and many others.

There were reportedly more than 11,000 in-person attendees and many tens of thousands more online, according to the company’s blog post.

Another way of computing is required to scale and drive down the cost, Huang told attendees. “Accelerated computing is a dramatic speedup over general-purpose computing, in every single industry,” he said.

Huang also presented Nvidia NIM. The company describes it as new way of packaging and delivering software that connects developers with hundreds of millions of GPUs to deploy custom AI.

Nvidia, founded in 1993, started as a graphic developer mostly known for video-game platforms, but by 2016 the company invested in a new type of computer, calling it DGX-1. Huang hand-delivered the company’s first DGX computer to, at the time, a San Francisco startup called OpenAI.

Nvidia built a new chip to scale up Blackwell. The chip is called NVLink Switch. “Each can connect four NVLink interconnects at 1.8 terabytes per second and eliminate traffic by doing in-network reduction,” according to the blog post.

Several important partnerships were announced to accelerate the development of GAI, such as Ansys, Synopsys, and Cadence. Nvidia also expanded partnerships with Dell, SAP, Microsoft, Google Cloud, and many others.

(11)