So many of us may have heard the term sitemap and robots.txt being used in association with a particular platform or a website. Surprisingly, not a lot of business owners know about the sitemap.xml and robots.txt.

Due to its complexity of understanding the uses may be the number one reason why marketers and business owners may not consider it as a big of a deal. These segments can have a significant

influence on the business structure and the relationship over customer engagement.

In this review, we will be diving into the aspects of the major differences and importance of Robot.txt and Sitemap.xml. Before we dive, we first need to discuss a few points that will help you understand the verticals of these segments.

Crawling (Spidering) a website is not the same as indexing!

So many of us have heard of the term “crawling” in terms of computation before, right? Well, it isn’t the same as Indexing a website. Let us elaborate;

Crawling

Empowered and driven by a software process, “crawling” is the process of fetching web pages through a designated software, and then it is read. The reading part is deployed to ensure that the content materials associated with all your online landing pages are not copied.

Furthermore, it follows the associated thousands and thousands of links across the network until it slithers across a huge number of connections and sites. This Crawling process is known as spidering.

Subsequent to a landing site, before it is “spidered”, the quest crawler will search for a robots.txt document. If it discovers one, the crawler will peruse that record first before proceeding through the page.

Since the robots.txt record contains data about how the web index should be administered, the data discovered there will train further crawler activity on this specific website.

On the off chance that the robots.txt record doesn’t contain any orders that forbid a client operator’s action (or if the site doesn’t have a robots.txt document), it will continue to slither other data on the site.

Indexing

Empowered and driven by a software process, indexing is the process to index the content of a website and then placed in an algorithmic-depository system (via cloud system of Search engine), so that it can be easily filtered and searched by online searchers through platforms like Google, Yahoo and Bing.

Sitemaps & Robots

It may seem that as we further progress in time, the complexity of technologies can sometimes be inevitable, and sometimes comprehensible with ease.

Nevertheless, understanding the verticals of how these technologies play a role over your website can not only help you in terms of preserving and solidifying a particular brand, but it also creates a vital channel for your site to be shown to potential buyers who may not even search for services, solution or product that your company may provide.

What is a Sitemap?

Specifically speaking, sitemaps are designed to enable Google and other major search engines to exceptionally crawl your site. The purpose of this is to provide the search engine crawlers with such a company site’s content.

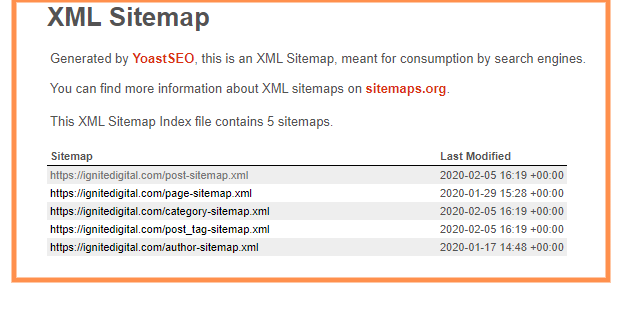

Sitemaps are configured in two categories;

A) XML – which is used for major search engines

B) HTML – which is used for its audiences / users / searchers

What is a Robots.txt file?

Robots.txt have specific jobs. They are singularly responsible for creating (coded) scripts with instructions to control web robots on how to crawl pages for websites.

The majority of the time, it is deployed for the sake of search engine robots.

The Importance

Do I Need to Consider This for My Business?

If you are considering to go down the rabbit-hole of SEO, then yes. If you want to gain a credible recognition as a legit entity, crawling your site not only protects you from other competitors from copy-and-pasting from your site, but it also helps to create a legitimate image of your business.

The robots.txt record is a piece of the robot’s prohibition convention (REP), a gathering of web measures that manage how robots crawl the web, access and file substance, and serve that substance up to clients.

The REP likewise incorporates orders like meta robots, just as page-, subdirectory-, or site-wide guidelines for how web search tools should treat joins, (for example, “follow” or “nofollow”)

Practically speaking, robots.txt records show whether certain client specialists (web-slithering programming) can or can’t creep portions of a site. These slither directions are determined by “prohibiting” or “permitting” the conduct of certain (or all) client specialists.

Some common use cases include:

• Preventing plagiarism content materials from appearing in SERPs (note that meta robots are often a better choice for this, and we will discuss meta robots in a later chapter.)

• They are also widely used to secure the privacy settings of a site. For example, a team of engineering staging site, documentations and other pieces of vital-yet sensitive information

• Keeping entire sections of a website private (for instance, your engineering team’s staging site)

• Keeping internal search page(s) from showing up in any public SERP locations

• Verification for the location of such sitemap(s)

• They also prevent major search engines from indexing certain files on your website like images and PDF files, etc.)

Specifying a crawl delay in order to prevent your servers from being overloaded when crawlers load multiple pieces of content at once is an essential configuration:

• Client specialist: [user-operator name] Disallow: [URL string not to be crawled]

Collectively, these lines are considered as the total robots.txt document. However, a single robot’s record can contain different lines of client operators and mandates (i.e., denies, permits, slither delays, and so on.)

What They Can Do For You

The Backbone of A Successful Site

As a golden rule, understanding the major differences of robots and sitemaps and how they actually work, can help businesses further define the root which works best for the business or any given organization.

While exposure is essential for any business, deploying the association of robots txt and sitemaps can have a dramatic impact over your authenticity, credibility and company overall image.

Digital & Social Articles on Business 2 Community

(104)

Report Post