Picture a Thousand Words (i.e., Make a Chart)

Adobe Analytics clients are genuinely surprised to learn how far off the mark their own reports have strayed. SAINT Classification technology isn’t rocket science—anyone who has used a Pivot table or a Lookup function in Excel is capable of getting their head fully around the deep magic involved. I could tell people a thousand times that their reports might not bear scrutiny, but instead let me make the case with four pictures that will do the job better.

I was preparing a Tracking First introduction for a client who asked that I use some of his own data for the demo. He sent me a SAINT classification file, and it served really well for the presentation; Tracking First easily detected their preferred style for creating and classifying their codes. Glancing at the spreadsheet itself, however, I saw that it contained many codes that should have been classified but clearly weren’t, and so I decided to dig a little deeper and analyze how well this specific SAINT table was being maintained.

A Viable Candidate

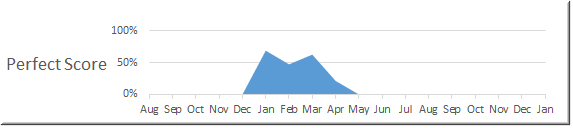

The first thing I found was that the report he picked had been receiving a pretty steady flow of new tracking codes for the previous six months.

Note that this graph isn’t showing a traffic (visitor) graph, but a simple count of new tracking codes that were being generated each month for this report. So here I discovered that this table was still relevant to some facet of this individual’s business.

So far so good.

First Blood: Distorted Codes

Starting in mid-September, however, the code generator(s) started to stray from the standards they themselves had set.

Codes were supposed to follow this format: [Prefix]+[Date in mmddyy format], and for the past quarter, one in four of the entries found in their SAINT table had strayed from that pattern.

Pattern consistency isn’t crucial to the SAINT classification process, unless you are using some automated detection rules within Omniture—which almost everyone is, to one degree or another (Marketing Channel rules, VISTA rules, Processing Rules, or the Classification Rule Builder). All of these rules require complete adherence to the custom standards you have set in creating tracking codes if Omniture is expected to use those codes programmatically when they arrive.

And even if you aren’t using rules, many Adobe clients who have chosen not to use SAINT classifications are stuck with search filters as the only way to segment subsets within their tracking codes. Each new inconsistency within the tracking codes will fragment the ensuing reports and complicate those filters that give you a clear picture of how a campaign or any one of its facets is performing.

Where (and When) the Wheels Came Off

At this point of my audit, I had only focused on the tracking codes themselves; I hadn’t even reached the classifications yet.

When I did shift my focus to classifications, I determined that the client intended to classify each of these new codes in five different ways. One classification in particular, Campaign Name, outshone all the rest in terms of accuracy and longevity. It was almost always classified the right way—that is, until September, when classification work for this report (along with all others) ceased entirely. Complete blackout.

When I showed this to the client, he remembered that September was when Judy left the team and handed this job off to somebody else. (Judy’s not her real name; her real name is Ruprecht.)

That’s so typical! While SAINT is a straightforward technology, it requires attention to detail and consistent efforts, which rarely survive unimpaired the transfer from one steward to the next.

And the kicker is that Adobe provides NO WARNINGS ANYWHERE that the reports your business users may have bookmarked in SiteCatalyst are no longer displaying valid data. These analyses came as a total surprise to the client who gave me that file.

The Worst News

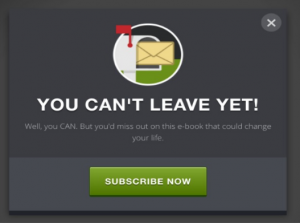

And the real zinger was yet to come. The worst news for this client was illustrated by my best graph:

Here you see that the job of fully preparing these five classification reports inside of Adobe Analytics was never done fully. Not even close. For a while last spring this client’s team was perfectly creating and classifying about 50% of their codes, but even that half-hearted effort collapsed within three months of starting.

Notice that this effort actually preceded the burst of codes they created through the summer months (see the first graph, above). It’s no accident that right when the work mattered the most was when that team stopped delivering, or even trying to deliver, on its original goals. More codes means more data to prepare and upload to Adobe. The burden of maintaining a tight ship, classifications-wise, requires continual dedication and attention to detail. And because humans rarely have such qualities to spare, classification efforts like these are usually manifested in flailing bursts of energy followed by silence, just like this graph depicts.

It’s All about Trust

About the same time I began auditing this SAINT table, another potential client expressed to me that his primary challenge within his organization is the lack of confidence that his business users have in the reports he works to provide in Adobe Analytics, and specifically their classification reports. Sadly, the truth is that business users are usually wise to question the validity of these reports.

I built Tracking First because I was tired of defending the quality of the reports to marketers who were often right to go on the attack. In my ten year career as a web analyst for dozens of Adobe Analytics clients, I found no weaker defenses than those typically strewn about campaign reporting, which is nuts, because that’s where the lion’s share of the money is being spent.

Tracking First, my company, keeps your entire organization 100% on-track when it comes to code creation and classification standards. And then, once you follow those codes to their final resting place within Adobe’s servers, you’ll see that what we’ve actually done is restored for you Adobe reports you can trust now and into the future—even after Judy/Ruprecht is long gone.

Digital & Social Articles on Business 2 Community(65)

Report Post