Kids sometimes lie about their age online. Many do it because they want access to platforms or age-restricted content for the sheer thrill of engaging in what they perceive as “grown-up” activities. Others are driven by peer pressure and FOMO.

And, as any parent will tell you, it’s incredibly tough to control online activity. But that’s not the real problem.

When children misrepresent their age online, they expose themselves to inappropriate content and, worse, predators. The FBI estimates that 500,000 adults are active each day, posing as underaged to target children for sex crimes. Furthermore, one in five children active online have been contacted by predators in the past year.

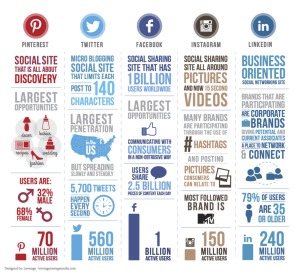

In recent years, lawmakers in many states and countries have enacted legislation restricting the use of social media by minors. These laws usually rely on technology such as biometrics and facial matching to verify age.

But these laws had pitted age verification proponents against privacy advocates, civil liberties activists, and business lobbyists. The latter group challenges new “parental consent” laws in court and often succeeds in blocking them on privacy or free speech grounds.

Given many misunderstandings and conflicts between entrenched positions from both sides of the debate over age verification, it’s no wonder this technology has not fulfilled its potential to improve children’s safety.

So, who do we turn to for help? It’s not likely to come from digital platforms that want to avoid forcing users through rigorous verification, which discourages signups.

Two sides of the same coin—verifying adults and verifying minors

Age verification entails far more than simply asking, “Are you 18?”

Let’s focus on two key scenarios. The first is simpler: verifying adulthood, where legal challenges often are based on data privacy rights. To help extinguish that concern, legislation can mandate “verify and delete,” whereby the social media or digital platform (or their third-party verification service) can delete all personally identifiable information (PII) except for basics like username, ID number, and birth date immediately after confirming the user’s identity.

The second and highly problematic scenario is proving legal childhood, to ensure the user is not an older bully or predator masquerading as a child. Ten-year-olds cannot be required to show an ID, so all legislation to date has focused on requiring parental consent, and usually parental access to all the child’s messaging and searches. Legal challenges to every new law seem to pop up very quickly.

Verify the parent, keep the child anonymous

Let’s consider a completely different approach: verifying the age only of the parent or guardian. Use verify and delete, so their PII is not stored. Require them to vouch—under pain of prosecution—for a child’s birth date. Then, the platform tethers the child’s account to the adult. The platform retains only the child’s birth date along with their parent’s ID number, IP address, and cell number or email.

It’s a delicate path to making this stick. A 2023 Arkansas law requiring parental consent for children to use social media was recently blocked in court. Civil liberty and privacy advocates also oppose a 2023 Utah law that gives parents extensive control over their children’s social media. The opposition to parental control on constitutional grounds tends to win over the safety of children. But what if the goal of legislation were narrowed to a single clear purpose: Prevent older people from accessing your child online? That should be able to thread all the needles to avoid or nullify legal challenges.

How would it actually work?

Make no mistake: This “ID the parent, keep the child anonymous” approach can survive legal challenges only if it avoids linkage to mandates on control or surveillance of children’s online activities. Guilt by association (with sweeping parental consent and control) has taken down age verification in state battles. Legislators need to understand that, despite being quiet and modest, age verification alone can be effective. Kids and criminals would do their best to find workarounds, but that would be difficult without leaving clues.

Make it the (uncontested) law of the land

Verifying adulthood and childhood are both truly important. For platforms and websites, the verify-and-delete approach can be quite practical. With the right technology and well-crafted legislation, robust age verification can do its job without violating protected rights.

In the critical battle to keep older bullies and predators away from children online, privacy-protecting age verification—of parents—could become the accepted law of the land. I am optimistic it can and will boost children’s safety and help law enforcement, without compromising anyone’s constitutional rights. It requires tightly focused legislation that “does one thing well.” The online platforms will know exactly how to comply, and hopefully the civil liberty groups will see the outcome as a win for them, too.

(14)

Report Post