Nvidia will provide computing power and its NeMo toolkit to Snowflake customers who can train AI on their own data.

A new partnership between Nvidia and Snowflake will let companies build generative AI assistants trained on their own data.

What it does. Nvidia is providing Snowflake’s customers with GPU-accelerated computing and its foundational large language model (LLM), called NeMo. The customers can use this with their own proprietary business data to develop LLMs for advanced generative AI services such as chatbots, search and summarization.

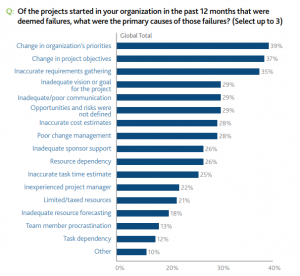

Why we care. Generative AI’s greatest weakness is its tendency to invent facts. This is especially true of systems like ChatBot, Bing and Bard, which have been trained on extremely large datasets. One way to reduce these “hallucinations” is to train the systems on smaller, limited amounts of data. Nvidia is making them even less likely with its NeMo Guardrails program. We are absolutely in favor of anything that makes data more reliable.

“Together, Nvidia and Snowflake will create an AI factory that helps enterprises turn their valuable data into custom generative AI models to power groundbreaking new applications — right from the cloud platform that they use to run their businesses,” Nvidia CEO Jensen Huang said in a statement.

This isn’t the first service to let companies use generative AI in this way. Last month, Microsoft launched Azure AI Studio, which lets customers create custom AI-powered apps called copilots. These can take a number of forms including running as chatbots.

The post Snowflake and Nvidia partner to provide companies with generative AI apps appeared first on MarTech.

MarTech(12)

Report Post