The old days of gaming Google’s ranking algorithm are over, but columnist Eric Enge notes that many SEO professionals haven’t moved on yet.

Once upon a time, our world was simple. There was a thesis — “The Anatomy of a Large-Scale Hypertextual Web Search Engine” by Sergey Brin and Larry Page — that told us how Google worked. And while Google evolved rapidly from the concepts in that document, it still told us what we needed to know to rank highly in search.

As a community, we abused it — and many made large sums of money simply by buying links to their site. How could you expect any other result? Offer people a way to spend $2 and make $10, and guess what? Lots of people are going to sign up for that program.

But our friends at Google knew that providing the best search results would increase their market share and revenue, so they made changes continually to improve search quality and protect against attacks by spammers. A big part of what made this effort successful was obscuring the details of their ranking algorithm.

When reading the PageRank thesis was all you needed to do to learn how to formulate your SEO strategy, the world was simple. But Google has since been issued hundreds of patents, most of which have probably not been implemented and never will be. There may even be trade secret concepts for ranking factors for which patent applications have never been filed.

Yet, as search marketers, we still want to make things very simple. Let’s optimize our site for this one characteristic and we’ll get rich! In today’s world, this is no longer realistic. There is so much money to be had in search that any single factor has been thoroughly tested by many people. If there were one single factor that could be exploited for guaranteed SEO success, you would already have seen someone go public with it.

‘Lots of different signals’ contribute to rankings

Despite the fact that there is no silver bullet for obtaining high rankings, SEO professionals often look for quick fixes and easy solutions when a site’s rankings take a hit. In a recent Webmaster Central Office Hours Hangout, a participant asked Google Webmaster Trends Analyst John Mueller about improving his site content to reverse a drop in traffic that he believed to be the results of the Panda update from May of 2014.

The webmaster told Mueller that he and his team are going through the site category by category to improve the content; he wanted to know if rankings will improve category by category as well, or if there is a blanket score applied to the whole site.

Here is what Mueller said in response (emphases mine):

“For the most part, we’ve moved more and more towards understanding sections of the site better and understanding what the quality of those sections is. So if you’re … going through your site step by step, then I would expect to see … a gradual change in the way that we view your site. But, I also assume that if … you’ve had a low quality site since 2014, that’s a long time to … maintain a low quality site, and that’s something where I suspect there are lots of different signals that are … telling us that this is probably not such a great site.

(Note: Hat tip to Glenn Gabe for surfacing this.)

I want to draw your attention to the bolded part of the above comment. Doesn’t it make you wonder, what are the “lots of different signals?”

While it’s important not to over-analyze every statement by Googlers, this certainly does sound like the related signals would involve some form of cumulative user engagement metrics. However, if it were as simple as improving user engagement, it likely would not take a long time for someone impacted by a Panda penalty to recover — as soon as users started reacting to the site better, the issue would presumably fix itself quickly.

What about CTR?

Larry Kim is passionate about the possibility that Google directly uses CTR as an SEO ranking factor. By the way, do read that article. It’s a great read, as it gives you tons of tips on how to improve your CTR — which is very clearly a good thing regardless of SEO ranking impact.

That said, I don’t think Google’s algorithm is as simple as measuring CTR on a search result and moving higher CTR items higher in the SERPs. For one thing, it would be far too easy a signal to game, and many industries that are well-known for aggressive SEO testing would have pegged this as a ranking factor and already made millions of dollars on this by now. Second of all, high CTR does not speak to the quality of the page that you’ll land on. It speaks to your approach to title and meta description writing, and branding.

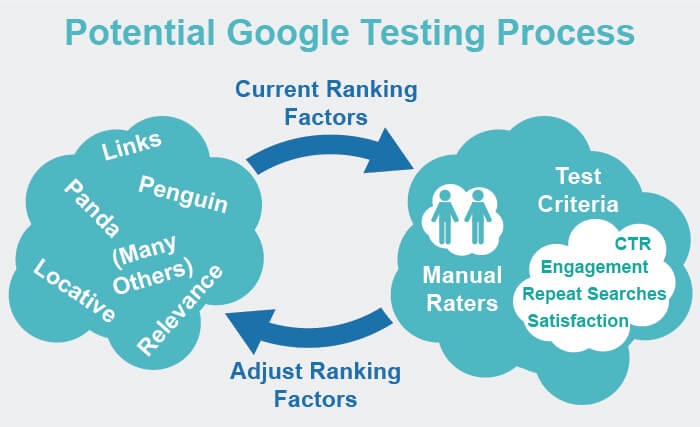

We also have the statements by Paul Haahr, a ranking engineer at Google, on how Google works. He gave the linked presentation at SMX West in March 2015. In it, he discusses how Google does use a variety of user engagement metrics in ranking. The upshot of it is that he said they are NOT used as a direct ranking factor, but instead, they are used in periodic quality control checks of other ranking factors that they use.

Here is a summary of what his statements imply:

- CTR, and signals like it, are NOT a direct ranking factor.

- Signals like content quality and links, and algorithms like Panda, Penguin, and probably hundreds of others are what they use instead (the “Core Signal Set”).

- Google runs a number of quality control tests on search quality. These include CTR and other direct measurements of user engagement.

- Based on the results of these tests, Google will adjust the Core Signal Set to improve test results.

The reason for this process is that it allows Google to run their quality control tests in a controlled environment where they are not easily subject to gaming of the algorithm, and it makes it far harder for black-hat SEOs to manipulate.

So is Larry Kim right? Or Paul Haahr? I don’t know.

Back to John Mueller’s comments for a moment

Looking back on the John Mueller statement I shared above, it strongly implies that there is some cumulative impact over time of generating “lots of different signals that are telling us that this is probably not such a great site.”

In other words, I’m guessing that if your site generates a lot of negative signals for a long time, it’s harder to recover, as you need to generate new positive signals for a sustained period of time to make up for the history that you’ve accumulated. Mueller also makes it seem like a gradated scale of some sort, where turning a site around will be “a long-term project where you’ll probably see gradual changes over time.”

However, let’s consider for a moment that the signal we are talking about might be links. Shortly after the aforementioned Office Hours Hangout, on May 11, John Mueller also tweeted out that you can get an unnatural link from a good site and a natural link from a spammy site. Of course, when you think about it, this makes complete sense.

How does this relate to the Office Hours Hangout discussion? I don’t know that it does (well, directly, that is). However, it’s entirely possible that the signals John Mueller speaks about in Office Hours are links on the web. In which case, going through and disavowing your unnatural links would likely dramatically speed up the process of recovery. But is that the case? Then why wouldn’t he have just said that? I don’t know.

But we have this seeming genuine comment from Mueller on what to expect in terms of recovery with no easily determined explanation of what signals could be driving it.

We all try to oversimplify how the Google algorithm works

As an industry, we grew up in a world where we could go read one paper, the original PageRank thesis by Sergey Brin and Larry Page, and kind of get the Google algorithm. While the initial launch of Google had already deviated significantly from this paper, we knew that links were a big thing.

This made it easy for us to be successful in Google, so much so that you could take a really crappy site and get it to rank high with little effort. Just get tons of links (in the early days, you could simply buy them), and you were all set. But in today’s world, while links still matter a great deal, there are many other factors in play. Google has a vested interest in keeping the algorithms they use vague and unclear, as this is a primary way to fight against spam.

As an industry, we need to change how we think about Google. Yet we seem to remain desperate to make the algorithms simple. “Oh, it’s this one factor that really drives things,” we want to say, but that world is gone forever. This is not a PageRank situation, where we’ll be given a single patent or paper that lays it all out, know that it’s the fundamental basis of Google’s algorithm, and then know quite simply what to do.

The second-largest market cap company on planet Earth has spent nearly two decades improving its ranking algorithm to ensure high-quality search results — and maintaining the algorithm’s integrity requires, in part, that it be too complex for spammers to easily game. That means that there aren’t going to be one or two dominating ranking factors anymore.

This is why I keep encouraging marketers to understand Google’s objectives — and to learn to thrive in an environment where the search giant keeps getting closer and closer to meeting those objectives.

We’re also approaching a highly volatile market situation, with the rise of voice search, new devices like the Amazon Echo and Google Home coming to market, and the impending rise of the personal assistants. This is a disruptive market event, and Google’s position as the number one player in search as we know it may be secure, but search as we know it may no longer be that important an activity. People are going to shift to using voice commands and a centralized personal assistant, and traditional search will be a minor feature in that world.

What this means is that Google needs its results to be as high-quality as they possibly can make them. Yet they need to keep fighting off spammers at the same time. The result? A dynamic and changing algorithm that continues to improve overall search quality as much as they can. To maintain a stranglehold on that market share, and establish a lead, if at all possible, in the world of voice search and personal assistants.

What does it mean for us?

The simple days of gaming the algorithm are gone. Instead, we have to work on a few core agenda items:

- Make our content and site experience as outstanding as we possible can.

- Get ready for the world of voice search and personal assistants.

- Plug into new technologies and channel opportunities as they become available.

- Promote our products and services in a highly effective manner.

In short, make sure that your products and services are in high demand. The best defense in a rapidly changing marketplace is to make sure that consumers want to buy from you. That way, if some future platform does not provide access to you, your prospective customers will let them know.

Notice, though, how this recipe has nothing to do with the algorithms of Google (or any other platform provider). Our world is just no longer that simple anymore.

[Article on Search Engine Land.]

Some opinions expressed in this article may be those of a guest author and not necessarily Marketing Land. Staff authors are listed here.

Marketing Land – Internet Marketing News, Strategies & Tips

(107)