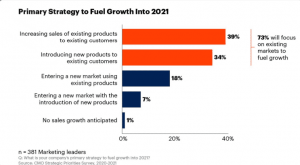

Columnist Eric Enge recaps the SMX West 2016 keynote by Googler Behshad Behzadi, who envisions a not-too-distant future in which voice search becomes more ubiquitous — and may even replace the search box.

On March 1, Behshad Behzadi, Google’s director of conversational search, gave a keynote address at SMX West in San Jose. This keynote was loaded with insight into Google’s perspective on where search is today, and where it’s going.

In today’s column, I’m going to provide a review of some of the things I took out of the keynote, then offer my thoughts on what the future holds. In short, I’m going to outline why this spells impending doom for the concept of a “search box.”

We actually got some initial insight into this right at the beginning of the keynote. Google’s goal is to emulate the “Star Trek” computer, which allowed users to have conversations with the computer while accessing all of the world’s information at the same time. Here is an example clip showing a typical interaction between Captain Kirk and that computer:

Behzadi also showed a clip from the movie, “Her,” and noted that “Star Trek” was imagining a future 200-plus years away (the show originally aired in the 1960s), and “Her” was envisioning a future just over 20 years away. Behzadi, on the other hand, believes that this will unfold in less than 20 years.

Google timeline

A quick history review will show us just how rapidly Google has changed over the years:

In addition, the growth of Google’s Knowledge Graph has been prolific:

Another key driver of change is that we will continue to get more and more devices to speak to at home:

As a result of this, users will get increasingly comfortable speaking to computers, and this will drive an increase in natural language usage in search queries.

Another thing driving this increased natural language usage is the improvement in speech recognition quality. According to Behzadi, today, the speech recognition error rate is down to eight percent, whereas two years ago, it was at 25 percent. Note that for more than 30 minutes of his keynote, he was continually doing voice demos, and not a single recognition error occurred.

Some other key points about the growth of voice search:

- Voice search is currently growing faster than typed search.

- There are many times where voice is the best way to interact (driving, cooking).

- It’s becoming more and more acceptable to talk to a phone, even in groups.

During the live video keynote event I did with Gary Illyes, he told me that the number of voice queries in 2015 was double that in 2014. Illyes also told me that voice queries were 30 times more likely to be action-oriented than typed queries.

The other major implication of the move to voice search is that it takes search out of the standard practice of going to a web page and typing in a query. Access to voice search needs to be ubiquitous, not require you to go to a special place to do it.

The future of search is to build the ultimate assistant

This is what Behzadi told us, and this idea that search should be the ultimate assistant is a fascinating conceptualization of where things are going. It has many, many implications.

Here is how Behzadi characterized some of the ways that Google thinks about this in a mobile-first world:

Mobile Attributes:

- Knowledge About the World

- Knowledge About You and Your World

- Knowledge About Your Current Context

Your Assistant Needs to Be There:

- Whenever You Need

- Whenever You Are

- To Help You Get Things Done

In case you’re wondering how well people will adapt to this notion of living via their personal assistant, my college-age children are already a good part of the way there, as is my 81-year-old mother-in-law. As more capability comes along, they will go right along with it.

Illustrating with examples

Behzadi is confident that Google is making great strides toward these goals, and he provided a whole series of interesting demos of the progress Google has made.

Parsing complicated natural language

He provided many examples of this, but the one that stood out for me was this query:

“Can you tell me how is the, what was the score of the last game with Arsenal?” You can see the result here:

As you can see, during the query, when I repeated it on my phone, I changed the direction of the sentence in the middle. Google was able to parse that down to an understanding that the real question started in the second half of the malformed sentence.

App integration

Another interesting demo was of the degree of App integration. At one point, Behzadi opened Viber, which is an instant messaging and VoIP app, and showed a dialogue that he was having with coworker about dinner.

One restaurant they referred to in the dialogue was CasCal, which is a tapas bar in Mountain View. So then he said, “OK Google” and asked, “how far is it?” Google provided the answer.

Next he said, “Call CasCal.”

For demo purposes, he then hung up, as he really didn’t want to chat with CasCal in the middle of his keynote, but he then followed that with the query, “book a table for 8 p.m. Friday for five people,” which launched the OpenTable App.

Lastly, he asked the Google app to “navigate to CasCal restaurant,” which opened up Google Maps.

This type of integration goes through some very complex interactions to address a fairly basic human need. Currently, Google is only integrated with about 100 apps currently, but the number is growing.

Google is clearly focusing on the most popular apps, too. For example, Behzadi did another demo showing integrations with Facebook and WhatsApp that was pretty cool.

Understanding context

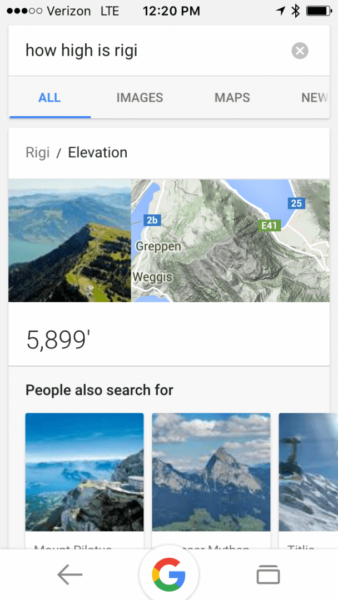

There were also a few interesting demos with regard to understanding context. In one, he started with the query “how high is rigi.” However, given that he was standing in San Jose at the moment, this was heard as “how high is ricky,” and something like this screen shot came back:

He tried it again, and then got a result for “how high is reggie,” which was still not what he wanted. So to help the system along, he then tried the query “mountains in switzerland,” which produced a carousel result:

After that, he tried the “how high is rigi” query and scored paydirt:

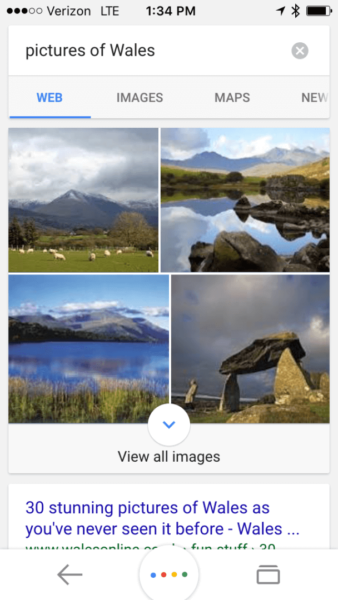

I promised myself not to put too many sequences in here, but I couldn’t resist including this one. It starts with the query “pictures of Wales.” I spoke this query into the Google app, but I got results related to the animal (whales) instead of the country (Wales), which was what I was looking for.

So, I clicked on the microphone button in the Google app and spelled it out: “w-a-l-e-s.” And Google got it right:

Remembering context throughout a conversation

I have one last sequence I’d like to show before I dig into my thoughts on the meaning of all this. This is a sequence related to a famous building, and it is a modified version of one that I’ve demoed many times. The sequence of queries is as follows:

- “where is coit tower”

- “i want to see pictures”

- “how tall is it”

- “who built it”

- “when”

- “what are the opening hours”

- “show me restaurants around there”

- “how about italian”

- “actually, i prefer french”

- “call the second one”

Almost unbelievably, at the end of this sequence, Google has managed to maintain the complete context of the conversation:

What does all of this mean?

Google has clearly made great strides toward being a more complete personal assistant and in understanding natural language. It also has a very long way to go from here. We don’t have the “Star Trek” computer yet, and it’s definitely more than a decade away. Behzadi believes it’s less than 20 years away, and he may be right.

As I suggested in the title of this piece, over time this will spell the end of our dependence on the search box. Ultimately, the notion of searching is really about gaining access to information. In the long run (say 10-plus years from now), we’ll view that as a utility that must be integrated into everything we do.

Instead of going to a search box, all I’ll need to do is go to a device that has access to my personal assistant. That could be my smart watch, my TV, my phone, my tablet, my car or any other device that helps me manage the world around me.

Wherever I am, or whatever I’m doing, I’ll want the information I want, even if it does not fit the current context. Ideally, the personal assistant I use should consider my current context but be ready to get switched to a different context if I guide it to do so (consider the “rigi” and “wales” examples I shared above).

We’ll also get used to hearing people speak to their devices, and some of the stigma we feel about that today will fade. You can already see that happening, as more and more people are developing the expectation of voice interaction with their devices.

I don’t see the keyboard going away entirely, though. For example, I’m not likely to ask my personal assistant to buy hemorrhoid medication using a vocal command while sitting in my office with others around.

I think that we’ll continue to have some situations where keyboard entry remains a better way to do things for some time to come. But I also think that the usage of the keyboard will decline at some point in the future (probably in the next five years).

Of course, one of the big issues that people will raise about this is the lack of privacy. I agree that this is a critical issue that deserves a lot of attention.

On the flip side of that is that people will get a lot of leverage from being able to better manage their lives by using smart technology like tomorrow’s personal assistant. I hope that as all of this unfolds, the privacy issues, and the trustworthiness of those who hold all this information about us, is dealt with sensitively.

Google is not the only company investing in this technology. Apple (Siri) and Microsoft (Cortana) are making big investments in personal assistant technology as well. One sure thing is that this is coming toward us fast!

Videos

See the full keynote speech below, as well as the Q&A.

[Article on Search Engine Land.]

Some opinions expressed in this article may be those of a guest author and not necessarily Marketing Land. Staff authors are listed here.

(Some images used under license from Shutterstock.com.)

Marketing Land – Internet Marketing News, Strategies & Tips

(99)

Report Post