Split testing, or A/B testing, is no secret in the world of ecommerce. 75% of the top 500 internet retailers do it. Even startups and growth hackers do split testing.

However, despite it being an open secret, not a lot of businesses do it or believe in it as a measurement tool. A good 63% of marketers even optimize their sites based purely on intuition and on best practices.

Clearly, that is not enough.

If you do not start split testing, you’re running away from conversions and growth you won’t uncover from any other means. And in the case above, you won’t be able to question assumptions widely accepted as is.

In this article, we will walk you through the process of split testing and how your business can take advantage of it.

But before we get into the details, it needs to be asked – what is A/B testing?

What is A/B testing?

A/B testing is a method of comparing two variations of anything you want to test on your site and determining which of the two performs better in improving your conversion rate. The two variations are compared to one another using live traffic.

The statistics and data you will derive from the test will validate the changes you want to make on your site.

In an A/B test, you take a page on your webpage to create a second version of it. The original version or variant A will be shown to half of your traffic and the other half will be shown the modified version or variant B.

The engagement and key performance metrics per variation will be collected, measured and from there, you will know whether the change had a positive, negative or no effect at all to your visitors’ behavior.

Benefits of running an A/B test

Implementing a split test is relatively simple and you can subject anything to it. From your site’s headline and search bar to the navigation and funnel components on the checkout, split testing also provides you with the following benefits:

Helps you make informed decisions. A/B testing allows you to make decisions driven by data, and not on intuition or hunches. Instead of just implementing random changes on your site and hoping for the best, split testing reduces or eliminates your risk for failure. When done regularly, split testing significantly improves your bottom line.

Makes the most out of your hard-earned traffic. Through regular split testing, you can optimize the experience your shoppers will have every time they visit your site. As your conversion rate increases, the value you get from your shoppers increases as well, saving you from spending more on traffic acquisition.

Approve or disprove assumptions. Split testing allows you to confirm the truth of widely accepted assumptions and know whether the same applies for your site.

Over time, split testing creates a healthy, data-driven environment. Instead of just following the opinions of those in the upper ranks, you have data and the power of statistics to determine the direction of your business.

Aside from conversion rates, A/B tests can be used for improving other metrics, such as bounce rates, click-through rates, and call-to-action.

Selecting the best A/B testing tool

For successful split tests, you need a reliable and efficient A/B testing tool at your disposal.

Managed VS. Self-serviced testing tools. The answer to this is depends on your confidence in running split tests.

With a managed A/B testing tool, the software’s solution team will create, run, and analyze the results of the test for you.

On the other hand, you have the freedom to create, run, and analyze your tests with a self-serviced A/B testing tool.

For starters, go for a managed testing tool, and as you get to familiarize yourself with A/B testing and how tools work, go for a self-serviced option.

A dedicated resource. You need all the help you can get when running your split tests. It is, after all, a collaborative process as developing and implementing tests is a time consuming endeavor and cannot be done alone.

Go for a testing tool that has a dedicated resource, providing assistance so you can get maximum ROI.

Support for mobile testing. There are many opportunities for improving customer experience on mobile and on mobile apps. 50.3% of e-commerce traffic comes from mobile devices, hence making mobile a new and exciting area for split testing.

Mobile optimization may not yet be one of your priorities, but mobile testing capability is encouraged when selecting your A/B testing tool.

Capacity for multi variant testing. As you gain more confidence conducting A/B tests, you will eventually consider running tests testing multiple elements on a page, and for that you will need a testing tool with multi variant testing capability.

As you can see, there are many factors to use when selecting the best solution for your company needs.

Below is a list of A/B testing tools you can choose from categorized according to the segment of customers it is popularly catered to:

- Small businesses: Tasty, Google Analytics, Visual Website Optimizer

- Mid-size businesses: Optimizely

- Enterprises: Adobe Target, Maxymiser, Monetate, Qubit, Sitespect

You can also refer to the above charts from Trust Radius to check the features each of these tools offer.

How to know what to improve on

To uncover the areas that have the biggest opportunities for improvements, you need to take a good look at your data and know where you are currently standing.

Run several analytics tools and study quantitative data. At this point, the minimum tools you need to have is a basic analytics tool, like Google Analytics supplemented by a conversion analytics tool, like KISSmetrics, and a user interaction software or heat map, like Crazy Egg.

Install them and let it run for a few days or weeks to collect data. Once the results are in, pay attention to important metrics, such as conversion rate at different stages in the funnel, average time on page, and exit rate. Identify the metrics that have the most valuable conversions and start from there.

Study your heat maps well. Are your visitors able to find the information they need?

Are they paying attention to the most important elements on your site?

Are some of your page elements distracting your visitors more than helping them?

These are just some of many questions you can ask that a heat map can answer.

Get qualitative insights. Qualitative insights allow you to understand your customers and why they do the things they do on your site. It is a great place to spot trends and dive into issues that could be causing trouble.

Surveys, feedbacks and usability tests are great sources for qualitative insights that will complement data derived from your analytics tools.

Example 1: Free shipping incentive increases orders by 90%.

Based on analytics data, NuFACE, an anti-aging skin care company, found out that although their visitors were interested in their products, shopping cart abandonment was still high.

They wanted to test whether a free shipping incentive will fix the problem.

The results showed that with the free shipping incentive, it did not only increase their orders by 90%, but it also lifted their Average Order Value by 7.32%.

Example 2: Resolving anxiety with authenticity badge increased conversions up to 107%.

Express Watches, a retailer of Seiko watches in the UK, discovered from surveys that their customers felt strongly about the authenticity of the watches they are buying compared to the prices.

To solve this, Express Watches replaced its initial ‘Never Beaten on Price’ badge to an authenticity badge that read ‘Seiko Authorized Dealer’.

This led to a 107% increase in their conversions.

Create hypotheses to fix the issue

This is one of the most critical steps of A/B testing, but also the shortest since majority of the legwork has been done during data collection and analysis.

This is the time to look at your store through your customer’s eyes – what do they want to expect? Try to play with the page for a while and brainstorm all ideas on what can be done better.

For instance, if your call-to-action is not receiving as much attention as you have hoped, you could try fixing it with the following solutions:

- change the copy

- increase the copy’s font size

- make the button color red

- increase the size of the button

- remove surrounding elements that may be distracting

With all these brainstormed solutions, select one, and state it clearly into a hypothesis.

The hypothesis is the purpose behind your A/B test and is often stated in this formula:

Remember: Your hypothesis is your educated prediction. There is a 50% chance that it will work and also a 50% chance that it won’t.

Once you have finalized your hypothesis, you are now ready for the next step – testing.

Split Testing vs. Multi-Variant Testing

We’ve been talking largely about split testing so far, but there is also one type of testing, called multi-variant testing.

To recap, split testing is a method of comparing two variations of anything you want to test on your site and determining which of the two performs better in improving your conversion rate. The two variations are compared to one another using live traffic.

In the illustration below, the original black call-to-action button is compared to a variation of the call-to-action button in red.

Multi-variant testing follows the same core principles of split testing, but it compares more than just two variables. Also, instead of pitting one variant over the other, multi-variant testing measures the effectiveness each variation has to the ultimate goal.

In the illustration below, you will see that there are four versions of headlines and images to be tested.

Pros and cons of split testing and multi-variant testing

Split testing is a popular and widely used testing method due to its simple concept and design.

With the number of tracked variables being small, split tests are easy to run, and the results are quick to derive at and interpret.

However, the limitation lies in its name – it can only measure the impact of a single variant compared to the original version. If you are looking to test more than one, a multi-variant test is your best approach.

But before you dive into it, you need to make sure that you have a significant amount of traffic to complete a multi-variant test.

On the downside, it runs the risk of not being feasible since multi variant tests are factorial, meaning a large number of possible variations can be tested and might take more time to finish it.

Common uses

Split tests have many uses given its versatility. Use it to increase the number successful checkouts or average order values on your homepage promo, navigational elements, and checkout funnel components.

Multi-variant testing is best used when designing landing pages. Knowing the impact of certain elements can be applied to future campaigns even if the context by then will be different. Additionally, if you want to learn about interaction effects, a multi-variant test is highly recommended.

Analyzing A/B test results

Approaching the tail end of a split test is the analysis of the test results.

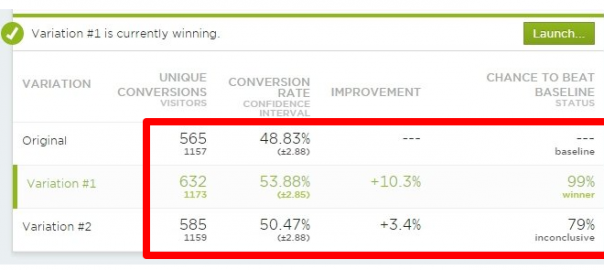

Thankfully, A/B testing tools today have built-in statistical tracking and analysis, freeing us from doing the lengthy computations ourselves. It shows how each variation played out during the test complete with confidence interval. Below is a summary screen of a computation.

However, it is recommended to go beyond what these values mean by doing post-test analysis, which means doing it outside the A/B testing tool you are using. And a popular tool for post-test analysis is Google Analytics.

Popular A/B testing tools, like Optimizely can be easily integrated with Google Analytics, and once successfully integrated can look something like this:

Reviewing your data using Google Analytics allows you to have more confidence and trust with the results of your test.

But if you want to know the statistical significance of your results, meaning the test will yield the same result when repeated in the future, you need to pull the data into an Excel sheet and auto-calculate it.

Thankfully though, Visual Website Optimizer built an A/B testing significance calculator, which you can download here.

Conclusion

We just discussed everything you need to know about A/B testing for your site.

Keep in mind these five main points:

Analyze your data. Quantitative and qualitative data will provide insight on what you need to improve on your site. Look for pages with low conversion rates or high drop-off rates. You can also look at areas of your site that receives the highest traffic. This is a good starting point for you since it will allow you to collect data faster.

Set your goals. There is no limit to the goals you want to achieve through A/B testing. Examples are decreasing shopping cart abandonment, increasing email signups or even as simple as getting visitors to click on a link.

Create your hypothesis. With your goals set up, you can start generating ideas for your variations and hypotheses. If you have a lot of hypotheses to test, rank them according to expected impact.

Create your variations. Use an A/B testing tool or software where you will create your variations. QA your variations to ensure your predictions are met.

Run the experiment and analyze results. Measure and compare the interactions of your visitors on variant A and B. Study the results generated by your chosen testing tool and cross check it with Google Analytics. Don’t forget to calculate for the statistical significance!

Now that you are armed with the knowledge and tips, are you ready to conduct your first A/B test?

Digital & Social Articles on Business 2 Community(136)

Report Post