Understanding Cognitive Bias To Interpret Data, Understand Trends

by Rives Martin, Op-Ed Contributor, February 22, 2017

People are all prone to see patterns and causality, a trait that has presented evolutionary advantages that have benefited our ancestors for generations. The idea of “seeing causality” ranges from understanding what times of day are safe for an animal to go to the watering hole to a person “seeing” systematic patterns in multiple trials of a coin flip. The same occurs in advertising.

Suppose you are a gazelle. You and your herd go to the watering hole several times daily to quench your thirst. When you go doesn’t matter, so long as you get sufficient water. Recently, however, you have seen several lions stalking the watering hole around dusk. There are two possible scenarios.

Either a pattern exists and lions do actually have a higher tendency to hunt at dusk or no pattern exists and on a few random occasions you arrived at the watering hole at dusk and there happened to be lions. Pattern or no pattern, it is in your best interest as a gazelle to avoid the watering hole at dusk.

In this scenario, seeing patterns that are not there does not harm us, while missing patterns that exist could lead us into unnecessary danger. It is easy to see how we have evolved to “over-see” patterns and see trends in random variation. However, when our job is to accurately interpret data and understand trends, this once helpful tendency can lead to sub-optimal business decisions.

I have outlined some major cognitive biases that people often fall victim to when analyzing data and everyday situations, as well as active measures that marketers can take to guard against such pitfalls.

The illusion of understanding and validity, Scenario 1: Sales increased 16% over last week because I replaced 20 broken URLs.

Marketers have a tendency to overestimate how much they actually know and how much they can control. This leads to overconfidence, empty promises, and unfounded recommendations. Analysts often feel pressure to provide clients with all the answers. Often they are asked to provide explanations to questions that are much larger than the scope that they can see.

Digital advertising performance has many moving pieces, and it can be difficult to accept that you understand and have control over a very limited part of the bigger picture. Knowing this to be true, clients still have a “hunger for a clear message about the determinants of success and failure of their campaigns. They need stories that offer a sense of understanding, however illusory” (Daniel Kahneman).

In his book, Thinking: Fast and Slow, Kahneman outlines an image of the mind as a machine with two systems, working together to make sense of the world and to make decisions. He describes System 1 as fast, intuitive, automatic, and emotional. System 2 is slow, deliberate, with effort, and logical. While we may think that all of our decisions are a result of conscious thought, 90% of decisions stem from the subconscious and automatic processes of System 1.

System 1 automatically creates a coherent story from the information at hand — regardless of its source, quality, or completeness. System 2 must decide whether or not to believe that story. When we are in a hurry or tired, or a client is demanding answers, this initial narrative is tempting. Reluctance to engage your System 2 inevitably leaves you vulnerable to mistakes.

Looking at the above example, it is easy to see why the analyst associated his actions with the increase in sales — cause and effect. However, other explanations are possible:

A direct mail piece hit homes that the analyst was not aware of.

The client’s biggest competitor’s site was down temporarily.

Oprah featured the product on her show.

Some kid posted a viral video using the product on YouTube.

Nothing in particular caused it to be what it is — chance selected it from among its alternatives.

The most likely scenario is some combination of several scenarios, both those listed above and others. Our tendency to oversimplify results fosters a dangerous overconfidence in our ability to predict the future. This manifests itself in relying too heavily on forecasts based on a foundation of delusion.

How to avoid bias

Marketers are not helpless against the way our brains are wired. There are specific measures that marketers can take to combat natural biases. It begins with being aware that these biases exist and are affect decision making. Instead of rushing to conclusions, slow down and engage your System Be thoughtful, deliberate, and logical with how you approach your research. The best way to do this is to establish a structured system or checklist to guide how you evaluate potential data trends. Even if you feel confident in your gut reaction, go through your checklist; System 1 can craft convincing stories out of thin evidence.

It is important to frame the insights and recommendations when presenting them to a client. Do not underestimate the role that the other factors play in determining your results. It can also be helpful to create a list of questions you would not want the client to ask. This list will reveal your blind spots, helping you to better understand your limits and to provide useful information to the client. Finally, don’t rule out chance as a possible explanation for an apparent trend.

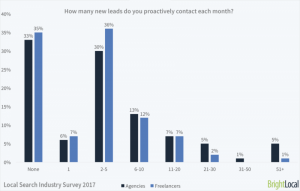

The law of small numbers (and big mistakes), Scenario 2: Our furniture campaign was really inefficient this week. Why haven’t you pulled back bids to bring spend in line with sales?”

Small sample sizes are deceptive — they lead to misinformed decisions and sub optimal performance. Just as we are prone to see causality in two independent but seemingly related events, we are also prone to believe stories created by incomplete data. Referencing our two-system view of the brain, Khaneman wrote, “System 1 runs ahead of the facts in constructing a rich image on the basis of scraps of evidence.” System 1 has no concern for data quality, source, or completeness. That is a System 2 task. Remember – because attention is a limited and valuable resource, our mind is wired for efficiency, and we are often lazy and looking for corners to cut. This leads to bias.”

If you’re charting data and notice a downward trend, your first instinct may be to assume that it has some kind of downward pressure on it. Right? But often, a downward trend levels out as more data is collected.

This is known as a regression to the mean and is often mistaken as a causal force. When in reality, it is a reliable statistical inevitability. “When our attention is called to an event, associative memory will look for its cause… Causal explanations will be will be evoked when regression is detected, but they will be wrong because the truth is that regression to the mean has an explanation but does not have a cause” (Khaneman).

Advice

The key to avoiding big mistakes due to small sample size is to be aware of how much data you do not have. Approach your analysis with the rigor of the scientific method. Make an observation, ask a question, form a hypothesis, conduct your research, and draw a conclusion based on the data. Establish a cut off beforehand to determine how much data you will need to be confident in your conclusion. Do a search for data that would disprove your hypothesis, if only briefly.

In Summary

While it may be difficult or uncomfortable to accept, random events do exist, and our tendency to deny randomness carries significant consequences. Work to acknowledge and address the biases you have against randomness, and identify instances where randomness may be playing a role in your decision making. Slow down and engage your System 2 – don’t blindly take off with the first story that your System 1 constructs. Do not make decisions based on incomplete data or small sample sizes. Do not try to over-explain unknown movements in data – random events by definition do not lend themselves to explanations. Random things really do happen.

MediaPost.com: Search Marketing Daily

(45)

Report Post