An analysis of how CDP users plan to use the platforms finds that requirements differ greatly.

Use cases are the bacon of technology management: they make everything better, from strategic planning to product deployment. They’re especially important for Customer Data Platform projects, where requirements are often poorly understood. To make things easier, the CDP Institute offers a free online use case builder that leads users through the use case development process.

We recently examined anonymized data from more than 70 use cases created in the builder and published the results. Here are some conclusions we hope you find useful.

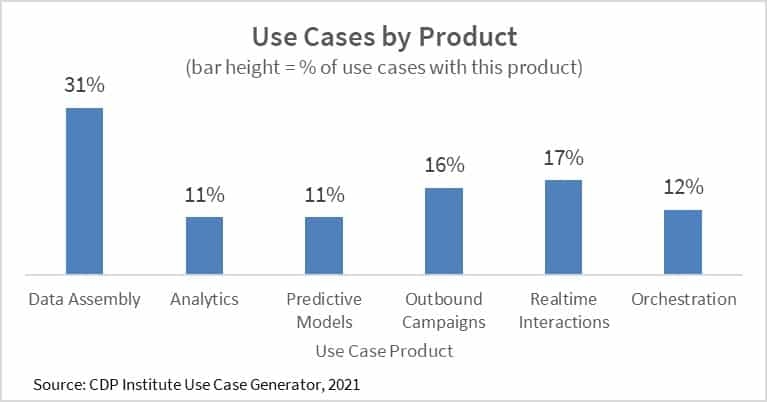

- CDPs are both platforms and applications. We ask users to classify their use case based on its ultimate product. The options form a sequence – or you can call it a maturity model – that starts with data assembly, move to analytics and predictive models, and ends with outbound campaigns, real-time interactions, and cross-channel orchestration. Each relies on the preceding products: you can’t do orchestration without campaigns, can’t do campaigns without analysis, and can’t do anything without assembling the data first.

Before looking at the data, we would have guessed that the more basic levels would be more common, simply because more users would be at earlier stages of development. We would have been wrong: products were clustered at either end of the sequence, with data assembly accounting for 31% and two of the most advanced, campaigns and interactions, accounting for 33%.

On reflection, we realized that analytics and predictive models are rarely goals in themselves: users want their CDP to provide measurable value, which usually means driving an application such as a campaign or real-time interaction. This happens either when the CDP itself provides that application or when the CDP is a platform that feeds its data to an external application. People who specified a data assembly use case probably had good applications in place, and simply wanted their CDP to support them. People who specified campaigns, interactions, or orchestration as goals wanted those applications built into their CDP. A close look at the use cases listed under analytics and predictive models supports this view: many of these were sending their results to external sales or customer service applications, which few CDPs can provide.

This variety of use case products helps to explain the continuing confusion over what’s really needed in a CDP. There is no one answer: some buyers only want a platform CDP that assembles data; others want their CDP to include analytic or customer-facing applications. Which category you fall into becomes clear when you define your use cases.

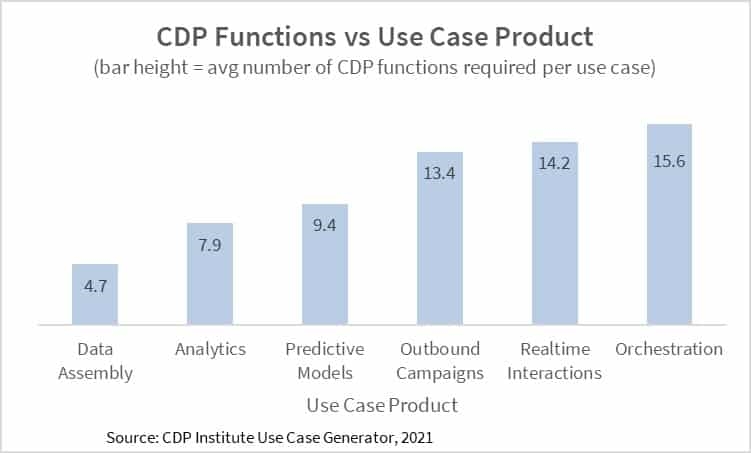

- Many use cases have simple requirements. CDPs often have dozens or even hundreds of data sources. Yet one quarter of the use cases required three or fewer sources and two or fewer destinations. Averages were 5.2 sources and 4.3 destinations. There were similarly low figures for the number of departments, Key Performance Indicators, and CDP functions. More advanced use cases don’t necessarily involve more data or departments, but they do require more functions since they depend on the previous tasks in the sequence.

The lesson is it’s fairly simple to deploy initial CDP use cases and start earning value – especially if you start with the less demanding use cases like data assembly and analytics. That’s the good news.

In practical terms, this result means that expanding beyond the initial, simple set of use cases requires continual incremental work to add new data sources, new users, new measures, and new functions. That, in turn, means that CDP projects can get the most returns as quickly as possible by first deploying use cases that require the same resources, and then moving on to cases that overlap with those resources as much as possible. Adding use cases for a new department is an especially big leap because you’ll probably need to add new sources, users, functions, metrics, and destinations all at once.

This incremental deployment is the classic “crawl, walk, run” approach beloved by consultants everywhere. It’s a good approach but, unfortunately, can be misinterpreted to mean that you it’s safe to start your project by focusing on just a small group of initial users. The fallacy should be clear: those initial use cases may need only a few resources but later use cases will require many more. You have to identify those long-term needs at the start of the project so you can buy a system that will meet them and can build a realistic project plan. That requires collecting use cases from future users at the start, even though those cases won’t be deployed any time soon.

The full report includes many more insights and enough charts to wallpaper a small shower. Enjoy.

The post What the CDP Institute learned from dozens of use cases appeared first on MarTech.

(39)

Report Post