As web technologies continue to grow and evolve, interest in technical SEO is growing along with it. Columnist Patrick Stox looks ahead to see what advancements are on the horizon and how these may impact technical SEO.

It seems as though technical SEO is experiencing a resurgence in popularity. In 2016, Mike King believed that we were on the cusp of a technical SEO renaissance due to the rapid advancement of web technologies. And in 2017, interest continued to boom. Needless to say, spirits are high going into 2018 with a renewed passion for learning and the importance of technical SEO.

I love the new energy and focus, but I also realize that people have limits on their time and effort, and I wonder how far people are willing to go down the rabbit hole. The web is more complex than ever and seems to be scaling and fracturing exponentially. At the rate we’re going, I’m not sure it’s possible to keep up with everything — and I believe we will start seeing more specialization within technical SEO. increased interest in machine learning. I would like to see more tools build in things like workflow and processes, or even understanding, rather than just being data dumps, as they mostly are now.

More data

Everyone is creating (and being able to access) more data than ever before. With this expansion, we have to make sense of more data and expand our skills in order to process this amount of information. We’re getting more data about customers, websites, the web itself, and even how people use the web.

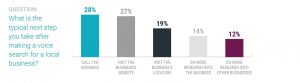

One of the most exciting prospects for me is in using clickstream data for customer journeys. No longer do we have to guess where people came from and what actions they took in some idealistic view; we can see all the different paths people took and their messy journeys through the web, our site, and even competitor websites. We’re seeing a lot more SEO tool companies starting to use clickstream data, and I’m curious to see what uses they will have for this data.

Google is ramping up their efforts on data as well, including sourcing more data than ever before from users. Google is gathering more data with things like Google My Business Q&A that will likely be used for voice search if it’s not already. Google Webmaster Trend Analyst Gary Illyes stated at Pubcon Vegas in 2017 that there will be more focus on structured data and more applications for the data this year.

Eventually, Google will be able to understand our websites well enough that they won’t need the structured data. We’ve already seen Google provide more and more Knowledge Graph information and rich results, showing other people’s data on their pages like weather, song lyrics, answers and much more. It seems Google is rapidly expanding showing other people’s data on their pages and eventually, as Barry Adams put it, they will likely treat websites as data sources.

I don’t know if I love the prospect of this or hate it. On one hand, I hate that they can scrape and use our data; on the other hand, I think it would be amazing to see a whole new kind of search where facts, opinions, POVs and more are aggregated and broken down into a summary of a topic. Having everything provided for me instead of reading through a bunch of different websites (and likely dealing with a bunch of different ads and pop-ups) sounds pretty good to me as a user. If Google doesn’t go this route, that leaves a space for a competitor to do this and actually differentiate themselves in the search space.

Mobile, speed, security

Google’s mobile-first index is rolling out and will be for quite awhile. This is going to take a shift in thinking for SEOs and tool providers from desktop to mobile. Even Google, in presentations, now mostly talks about “taps” instead of “clicks,” which I’ve found to be an interesting shift.

The mobile-first index is going to create havoc for technical SEOs. Many sites will have issues with pagination, canonicalization, hreflang, content parity, internal linking, structured data and more. One positive change that I like is that content hidden for UX reasons will be given full weight in the mobile-first index, which means we have a lot more options for content design and layout.

Google has also been saying it will look into speed being a more prominent metric for the mobile-first index. Right now, it’s basically on/off, and you’re only hurt by speed if you’re really too slow — but they may actually weight this heavier or change how they handle the weight based on the speed in the future.

Security is top of mind for a lot of people right now. Last year saw some of the biggest breaches of data in history, such as the Equifax hack. Google has been pushing HTTPS everywhere for years, and now Chrome has started marking pages as “Not Secure” in a good first step for mostly form pages, but eventually, they plan to show this on all HTTP pages and with a more noticeable red warning label.

The EU also has new rules in place around privacy, known collectively as GDPR (General Data Protection Regulation). The push for security and privacy may take away a lot of the data that we currently have available to us and make maintaining the data we have more complex, as there may be different rules in different markets.

The expanding and fractured web

I don’t even know where to start with this. The web is growing, and everything is changing so fast. It seems like every week there’s a new JavaScript (JS) framework. They’re not just a fad — this is what the websites of the future will be built with. All of the search engines have seen this and have made great strides in crawling JS. (Yes, even Bing.)

We have all sorts of new technologies popping up, like AMP, PWAs and GraphQL. The web is fractured more than ever before, and a lot of these new technologies are more technical than ever before and seem to constantly change. (I’m looking at you, AMP!) We may even see more around VR (virtual reality) websites and AR (augmented reality) websites, as some of these devices are becoming more popular.

Rise of tools

I expect to see a lot of changes in tools and in different systems this year. I’ve seen a few systems recently that specify in the HTTP header when they fire a redirect, which helps to troubleshoot when redirects can be at multiple levels and there is a lot of routing. This is one trend I wish would become a standard. I think we’re going to see more things at the CDN level as well. I don’t think many SEOs are offloading redirects and processing them at the edge yet, but that’s generally the best place for them.

I’m really excited for all the advancements in JavaScript as well and seeing what everyone will do with Service Workers, and especially things like Cloudflare Workers, which allow you to process JS at the edge. I’ve seen an uptick in posts using JS injection through things like Google Tag Manager, but processing this change is slow and happens after the page load. Changing the same thing before it’s delivered to the user, which would be possible at the edge, will be a much better solution.

I’m also excited to see what SEO tools are going to come up with. There’s more data available than ever before, and I’m seeing an increased interest in machine learning. I would like to see more tools build in things like workflow and processes, or even understanding, rather than just being data dumps, as they mostly are now.

The class of tool I’m most excited about and expect to really come into its own this year, I don’t even know what to call. These tools are basically systems that sit in between your server and CDN (or could also act as a CDN) that allow you to make changes to your website before it is delivered. They can be used for testing or just to scale fixes across one or multiple platforms. The most interesting part of this for me will be how they differentiate themselves, as in what kind of logic, rules and suggestions will they make and whether they will have any error discovery.

The tools I’m talking about are Distilled ODN, Updatable from Ayima, RankSense and RankScience. They give you full control of the DOM (Document Object Model) before the website is served. It’s like having a prerender where you can change anything you want about your website. These systems have the potential solve a lot of major issues, but I wonder about their longevity, even though they are fairly new, as the previously mentioned Cloudflare Workers could do these changes as well.

Google will help

I talked about smarter tools with more understanding, but Google has tons of data as well. Their goal is to serve the best page for the user, regardless of who optimized the best. As they see the same problems on websites over and over, I fully expect them to start ignoring or correcting more technical SEO problems on their end. We’ve seen this time and time again in how they handle duplicate content, 301/302 redirects, parameters and so much more. For now, we still do everything we know we should do, but I wonder how many of the things we fix now won’t need to be fixed in the future.

Google is also giving us more data than ever before. In the beta for Google Search Console, we have a lot of new tools for technical SEO, including the Index Coverage and AMP Status reports. These are great for showing the types of problems on the website, but the interesting thing to me is that Google has identified and categorized all these different problems. If they know the problems, they could correct for them — or maybe, as an intermediate step, make suggestions in GSC for webmasters.

Conclusion

Technical SEO has a brilliant future ahead of it. I see so many new people learning and adapting to challenges, and the energy and excitement in the space is incredible. I’m looking forward to a great 2018, seeing what advancements it will bring and what new things I can learn.

[Article on Search Engine Land.]

Opinions expressed in this article are those of the guest author and not necessarily Marketing Land. Staff authors are listed here.

Marketing Land – Internet Marketing News, Strategies & Tips

(48)

Report Post