Prioritization is a critical skill to master when building out a testing program. It’s about making smart choices and applying discipline to the decision-making process.

In my experience helping companies build their test programs from scratch, as well as optimizing more mature programs, I’ve seen the benefits of adopting a rigorous prioritization scheme time and time again. Besides helping turn a high volume of ideas into an actionable plan, prioritization makes the entire company smarter by forcing the testing team to think deeply about the factors that make an optimization practice successful.

Based on my experience working with Optimizely customers, here are three key steps to follow to bring prioritization into your optimization strategy.

Step 1: Build A Hypothesis Library

Before you can prioritize which experiment to run next, you need hypotheses—predictions you create prior to running an experiment. Brainstorm sessions (and this article) are great ways to generate a lot of hypotheses.

A note on great brainstorms: they are, by nature, free-wheeling, blue-sky, gesticulation, and whiteboard-intensive activities. They’re multi-disciplinary. They’re imaginative. When you leave a brainstorm, you should feel like you have more hypothesis ideas than you can possibly run this entire year! The more ideas the better. (A full blog post on how to lead a great test idea brainstorm coming soon.)

Once you have ideas, you want to see them all in one place to start prioritizing. Call this your repository, backlog, library—it’s the place where experiment ideas live.

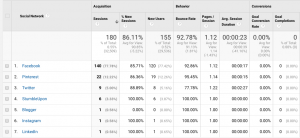

Tools I have seen my customers use for their backlogs are:

- Trello

- Pros: Very simple, shareable, visual way to manage tests at different stages of development.

- Cons: I’m less familiar with Trello, but from what I’ve seen, it seems naturally less robust and might work better for ‘per-test’, as opposed to ‘program-level’ documentation.

- Pros: Very simple, shareable, visual way to manage tests at different stages of development.

- JIRA

- Pros: Robust, configurable system that is directly connected to development team. Great for managing test submissions, has some charting built in.

- Cons: Not very user-friendly, can add layers of friction to non-developers; might also leave you ‘fighting the queue’ if your development team is taking on tests as tickets competing with other work.

- Pros: Robust, configurable system that is directly connected to development team. Great for managing test submissions, has some charting built in.

- Asana

- Pros: Great for task management and set of sub-tasks, social assignment features.

- Cons: Might best serve as a social task management tool on top of your core roadmap (just like I’ve found Basecamp & Trello), not intended for sophisticated formulas or charting.

- Pros: Great for task management and set of sub-tasks, social assignment features.

- Documents (gDoc, Word, etc)

- Pros: Ultra simple, flexible. Quick to make.

- Cons: No dynamic functionality, not great for task management; good for individual test scoping.

- Pros: Ultra simple, flexible. Quick to make.

- Spreadsheet (Google, Microsoft)

- Pros:; Ultimately flexible, dynamic functionality, charting built in; the de facto choice for most professionals.

- Cons: Might require more thought and effort to set up, will require maintenance and controls for sharing; desktop-based versions not easily shareable.

- Pros:; Ultimately flexible, dynamic functionality, charting built in; the de facto choice for most professionals.

- Basecamp

- Pros: Simple, social. Boards are well suited for high number of items. I love the ease of use.

- Cons: Like Trello, Basecamp may be good for individual tests, but lacks the depth of charting or calculation functionality for more sophisticated schemes

- Pros: Simple, social. Boards are well suited for high number of items. I love the ease of use.

- …or a great project management tool out there that I may not have heard about. What does your team use?

First person to respond in the comments with evidence of a custom application for managing tests wins an Optimizely shirt!

Once you have experiment ideas in your backlog, the question becomes: how do you prioritize which ones to tackle and which to table? We need some criteria.

Step 2: Set Prioritization Criteria

This is the real meat of the prioritization process. Here, you place a flag in the sand and say, “These are the factors that we think will make this test a valuable use of time—better than those other tests that we’re letting sit patiently on sidelines.”

The most fundamental theory of prioritization is to try to assign criteria that proxies what the return on investment (ROI) of a test will be.

Return on Investment is, at the most basic level, a ratio between the cost of doing something (the Investment) and the expected revenue/conversions/leads generated/downloads/video-views/pages viewed/articles shared (the Return) of that something.

Calculating ROI for tests by thinking through these questions:

- Estimate the effort (cost) of the test. Once you’ve gotten past the basic steps of learning how your testing tool works and what your team is capable of, you can get a basic sense of the effort involved to do something. Consider these criteria:

- Number of hours to design and develop tests. You can be quite rigorous in assessing the relative cost of different team members’ time.

- Volume of tests your production team can (should) manage. Given bandwidth, how do we design our tests to run as many tests as possible? Higher volume of tests generally leads to greater overall success.

- Balance between casting a wide net of simple, low-hanging-fruit tests and more complicated tests that may have more fundamental effects on site experience. Both are important. Optimally you will run a sliding scale of experiments from a high volume of easier tests to a lower volume of complicated tests.

- Number of hours to design and develop tests. You can be quite rigorous in assessing the relative cost of different team members’ time.

- Estimate the impact (value) the test will provide. This is much more difficult to determine, particularly for teams newer to testing. Consider these criteria:

- Does this test employ a well-known U/X optimization concept, like removing distraction in a checkout flow? That’s a good sign!

- Does this test address a KPI? Prioritize tests that are design to have direct impact on your bottom line metrics.

- Does this test take place on one of your critical flows? While comprehensive experience optimization will take on every aspect of the customer experience, not every page or app view are created equal; tests on most highly trafficked pages and key conversion flows will inherently be more impactful.

- Case study from an online retailer who increased RPV by 38 percent by testing the checkout funnel.

- Does this test explore messaging strategy? Experimenting with copy and messaging is high value because you can apply it to other areas of your marketing.

- Does the test change more than one element on a page? Or is it tightly focused on one element, like a button or headline? Tests on single elements will naturally be less impactful and may require more traffic. This doesn’t mean these tests shouldn’t be done, just weighed properly.

- How dramatic of a difference are our variations from the original? If we’re testing button colors against a control of blue, are we testing more shades of blue or trying out neon yellow? The degree of difference will often determine the degree of effect you can see.

- Does this test employ a well-known U/X optimization concept, like removing distraction in a checkout flow? That’s a good sign!

As some of the awesome testing pros in our community have discussed, there are more complicated approaches to define test prioritization criteria.

Keep in mind… As you define criteria, know that there will be some things you won’t ever know for certain (this is experimentation, after all). Many things won’t become clear until your team starts to build a collective test memory—another great result of using a central test repository tool! Your results should be recorded and stored for learning! Try it out and optimize your approach as you would your site, using data and iteration.

If you’re a beginner or new to testing, I recommend prioritizing low effort over high-value tests. Simpler tests—things like swapping an image, changing a call to action (CTA)—will help you learn the process and better gauge the perceived value of tests further down the line.

And finally, there will always be other considerations, such as:

- Timeliness. Certain tests need to support a media campaign or a website redesign. Be sensitive to the rest of the company’s needs for your sites and apps.

- What your boss tells you to do. All HIPPO jokes aside, bosses are supposed to be a conduit for your company’s overarching strategy. If your boss says you need to place a relative focus on mobile web tests, and you hadn’t already considered doing so, it’s probably a good idea to prioritize a few mobile tests higher in the queue. (That being said, once you’ve created and socialized a great prioritization scheme, you will certainly find it easier to secure buy-in from management—who wouldn’t love to see their team coming to the table with smart, proven approaches to their work!)

Step 3: Schedule, Commit, & Execute

Once you’ve ranked your tests by some approximation of their ROI, all that’s left is to schedule, build, and execute. You’ll need to get buy in, create documentation, and measurement plans for individual experiments, and use GANTT charts or other great tools to translate your prioritization into an actual project schedule.

Make sure you stay true to the criteria you set beforehand. Reference your logic when analyzing and reporting on results. By building a strong prioritization framework into your testing strategy, you’ll begin to see greater impact for the tests you chose to run. You’ll be smarter about how you iterate. And be able to say “no” to those tests that just aren’t worth your time.

Interested in learning some prioritization criteria that you can incorporate into your own roadmap? Join me on Thursday for an in-depth webinar–Testing for Success: Top Tips for Unlocking Revenue.

(269)

Report Post