With real-time algorithm updates becoming the norm in place of manual releases, what issues might arise? Columnist Patrick Stox examines some potential effects of faster algorithm updates.

Every update of Panda and Penguin in recent years has brought joy to some SEOs and sorrow to others. As the algorithms become real-time, the job of an SEO will become harder, and I wonder if Google has really thought of the consequences of these updates.

So, what potential issues may arise as a result of faster algorithm updates?

Troubleshooting algorithmic penalties

Algorithmic penalties are a lot more difficult to troubleshoot than manual actions.

With a manual action, Google informs you of the penalty via the Google Search Console, giving webmasters the ability to address the issues that are negatively impacting their sites. With an algorithmic penalty, they may not even be aware that a problem exists.

The easiest way to determine if your website has suffered from an algorithmic penalty is to match a drop in your traffic with the dates of known algorithm updates.

In the screenshot below, you can clearly see the two hits for Penguin back in May and October of 2013 with Penguin 2.0 and 2.1.

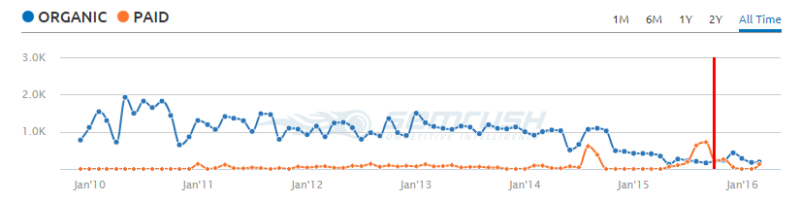

Without analytics history, you look for traffic drops using tools that estimate traffic (such as SEMrush), although the traffic drops may also be from website redesigns or other changes. The site below has had their traffic depressed for over a year because of Penguin 3.0, which hit in October of 2014.

For possible link penalties, you can use tools like Ahrefs, where you can see sudden increases in links or things like over-optimized anchor text.

In the screenshot below, the website went from only a couple of dozen linking domains to more than 2,000 in a very short period of time — a clear indicator that the site was likely to be penalized.

Another easy way to determine if there is an algorithmic penalty is to see if a site ranks high in Maps but poorly for organic for different phrases. I’ve seen this many times, and sometimes it goes undiagnosed by companies for long periods of time.

Unfortunately, without having the dates when major updates occurred, SEOs will need to look at a lot more data — and it will be a lot more difficult to diagnose algorithmic penalties. With many companies already struggling to diagnose algorithmic penalties, things are about to get a lot tougher.

Misdiagnosis and confusion

One of the biggest problems with the real-time algorithm updates is the fact that Google’s crawlers don’t crawl pages at the same frequency. After a website change or an influx of backlinks, for example, it could take weeks or months for the site to be crawled and a penalty applied.

So even if you’re keeping a detailed a timeline of website changes or actions, these may not match up with when a penalty occurs. There could be other issues with the server or website changes that you may not be aware of that could cause a lot of misdiagnosis of penalties.

Some SEO companies will charge to look into or “remove” penalties that don’t actually exist. Many of the disavow files that these companies submit will likely do more harm than good.

Google could also roll out any number of other algorithmic changes that could affect ranking, and SEOs and business owners will automatically think they have been penalized (because in their minds, any negative change is a penalty). Google Search Console really needs to inform website owners of algorithmic penalties, but I see very little chance of that happening, particularly because it would be giving away more information about what the search engines are looking for in the way of negative factors.

Negative SEO

Are you prepared for the next business model of unscrupulous SEO companies? There will be big money in spamming companies with bad links, then showing companies these links and charging to remove them.

The best/worst part is that this model is sustainable forever. Just spam more links and continue charging to remove. Most small business owners will think it’s a rival company or perhaps their old SEO company out to get them. Who would suspect the company trying to help them combat this evil, right?

Black hat SEO

There’s going to be a lot more black hat testing to see exactly what you can get away with. Sites will be penalized faster, and a lot of the churn-and-burn strategy may go away, but then there will be new dangers.

Everything will be tested repeatedly to see exactly what you can get away with, how long you can get away with it, and exactly how much you will have to do to recover. With faster updates, this kind of testing is finally possible.

Will there be any positives?

On a lighter note, I think the change to real-time updates is good for the Google search results, and maybe Googler Gary Illyes will finally get a break from being asked when the next update will happen.

SEOs will soon be able to stop worrying about when the next update will happen and focus their energies on more productive endeavors. Sites will be able to recover faster if something bad happens. Sites will be penalized faster for bad practices, and the search results will be better and cleaner than ever. The black hat tests will likely have positive results on the SEO community, giving us a greater understanding of the algorithms.

[Article on Search Engine Land.]

Some opinions expressed in this article may be those of a guest author and not necessarily Marketing Land. Staff authors are listed here.

(Some images used under license from Shutterstock.com.)

Marketing Land – Internet Marketing News, Strategies & Tips

(72)

Report Post