Most businesses want to get noticed on the web, but they don’t know the best way to accomplish that. Will amazing content do the trick? What about great design or performance and page load times? Will the right keyword density on your pages make a difference? Or should you focus on inbound links?

Companies struggle with these questions every day, and they have been struggling for the past two decades. Getting noticed online has become an industry in and of itself, with consultants, service providers, brokers, and intermediaries trying to make sense of how search and discovery work. As most businesses have learned, it’s an incredibly complex practice: Google uses more than 200 signals to determine the relevance of a site to a specific search, for example.

If the typical search and discovery protocols seem dated and arcane, it’s because a lot of those protocols are dated and arcane. In this age of deep learning and artificial neural networks, for example, why do publishers still have to worry about whether adding multiple H1 tags in their webpages will adversely affect the discoverability of their sites?

The most important question, however, may be this: Why do changes to indexing algorithms of Google results make or break businesses?

Let’s look at Expedia, for example. At the end of 2019, the company had underwhelming quarterly earnings — and shares dropped by more than 20%. Expedia blamed these declines on changes to Google’s algorithm, which they said put their hotel listings lower in search rankings.

Although a majority of searches start with Google, the internet search giant is like a black box. There is little transparency into how Google ranks pages, prioritizes results, and — above all — how its results often supersede the results of other search providers. This has been the underlying theme of the European Union’s antitrust cases against Google as well as an impending antitrust investigation being pursued by the U.S. Department of Justice and attorneys general from various states.

Although the algorithms governing search results won’t become public knowledge anytime soon, there are some actions companies can take to prepare for emerging trends in search:

1. Natural Language Processing

NLP is a form of AI that helps machines understand human language and communication. With recent advancements in NLP, internet search protocols can begin to understand the content of webpages rather than just treating them as strings and keywords. Companies should assume that new NLP techniques will cause search engines to try to understand content similarly to how an end user would. Therefore, you should be tailoring your content for users rather than bots.

2. Structured Data

Search engines are increasingly trying to figure out entities in web content (or “things, not strings,” as the saying goes). For example, if the word “Apple” on a webpage is tagged as an “organization,” the search engine would associate it with properties such as location, revenue, management team, etc. If the word “apple” is tagged as a “fruit,” however, it would be associated with attributes like color, nutrition information, etc.

This structure is often defined by standards made by the community initiative Schema.org and represented using standards like JSON-LD or Microdata. Businesses should tag their entities using Schema.org tags to allow search engines to identify them more easily.

3. Voice and Smart Devices

The methods for search optimization have to change because search itself changes constantly. In the future, not all searches will be based on keyword queries typed into browsers. We’re already seeing content search and discovery moving to social media, voice assistants, chatbots, smart speakers, smart cars, AR and VR environments, and so on. Comscore predicts that half of all online searches will be made via voice in 2020, and Gartner predicts that 30% of searches will come through devices that lack screens.

For content to be discovered under these new conditions, it’s less critical that companies produce every possible website link for specific keywords. Instead, these companies should find the answer that is most relevant to users in a given context and then allow those users to further refine their searches by providing more qualifying information.

To achieve this goal, deeper expertise is required in the specific domain of these queries. However, it’s impractical to assume that one company (or one search engine) can achieve that level of expertise specific to every domain area involved.

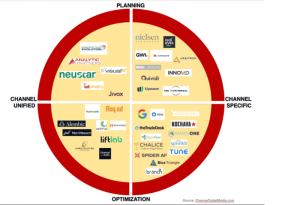

The solution? Businesses need to align more with content discovery networks, vertical search engines, and other domain authorities (think Alexa skills specific to your domain) to be discovered — rather than rely on the outdated keyword-based search.

See a demo of some of these new search technologies in action here.

Digital & Social Articles on Business 2 Community

(14)